Introduction

The Rise of AI APIs

Over the past few years, AI has rapidly transitioned from research labs to real-world applications. Thanks to AI APIs from providers like OpenAI, Google Gemini, Anthropic Claude, and others, developers can now access powerful large language models (LLMs) with just a few lines of code, making Spring Boot AI integration increasingly practical.

The New Problem: Integration Complexity

However, with that ease comes a new kind of complexity:

- Every provider has its SDKs

- Different authentication flows

- Inconsistent response formats

- Manual code duplication for switching vendors

This makes supporting multiple providers, or switching between them, tedious and error-prone, especially for those exploring Spring AI OpenAI integration.

Enter Spring AI: A Unified Abstraction

That’s where Spring AI comes in. Developed by the Spring team, this project brings the Spring Boot developer experience to LLMs by offering:

- A consistent programming model

- Unified configuration patterns

- Easy switching between providers like OpenAI and Gemini

What You'll Learn in This Blog

In this post, we’ll walk through a working Spring Boot POC that integrates both OpenAI and Google Gemini. You’ll learn how to:

- Use Spring AI’s ChatClient abstraction

- Swap providers with just a parameter change

- Avoid vendor lock-in from day one using Spring AI LLM practices

Whether you're exploring providers or aiming to build a flexible AI-powered backend, this post is for you. If you're planning to apply LLMs in real-world apps, consider AI and machine learning consulting to help architect scalable, intelligent solutions tailored to your domain.

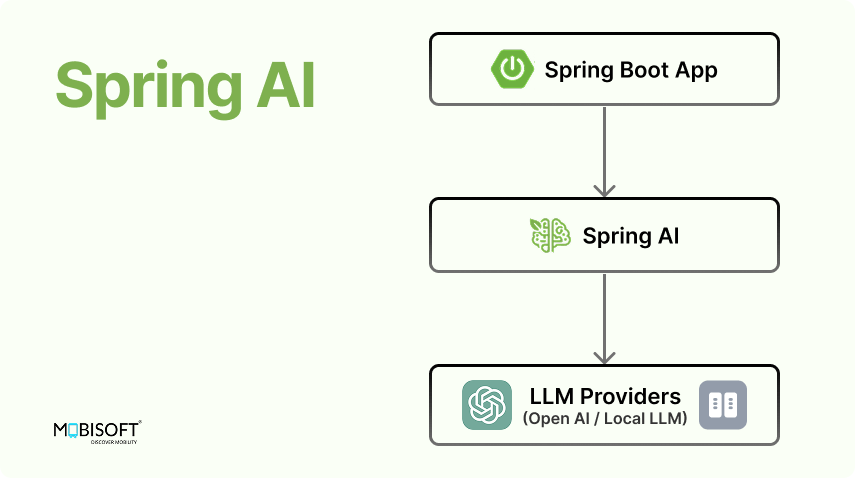

What is Spring AI?

Spring AI is a relatively new addition to the Spring ecosystem that brings AI model integration, especially large language models (LLMs), into the familiar Spring Boot world. Just like Spring abstracts database access, messaging, and security, Spring AI does the same for AI and ML capabilities.

Instead of wrestling with REST APIs, raw payloads, and provider-specific quirks, Spring AI provides clean, Spring-style abstractions for interacting with models like GPT, Gemini, or Azure OpenAI.

Why Spring AI Matters?

At its core, Spring AI lets you:

- Use a standardized interface (ChatClient) to interact with multiple providers

- Avoid boilerplate code

- Easily switch between LLM providers (OpenAI, Gemini, Azure, etc.) with minimal config changes using Spring AI Java LLM integration techniques

This means you focus on business logic, not SDK wiring.

Core Features

Unified ChatClient Abstraction

Spring AI provides a common ChatClient API for sending prompts and receiving responses, regardless of which model or provider is behind the scenes.

chatClient.prompt("Your input").call().content();This enables plug-and-play flexibility when changing backends and supports the growing interest in how to integrate LLM in Spring Boot.

Easy Switching Between Providers

You can swap between OpenAI or Google Gemini just by changing the parameter in the request. No rewrites.

Auto-Configuration for Popular Providers

Spring AI supports the following providers out of the box:

- OpenAI via spring-ai-starter-model-openai

- Google Gemini (Vertex AI) via spring-ai-starter-model-vertex-ai-gemini

The right beans and defaults are wired automatically based on your Maven dependencies.

Spring Boot Starter-Style Integration

Following the “convention over configuration” philosophy, Spring AI:

- Uses familiar Spring Boot conventions

- Registers beans automatically

- Supports externalized configuration (application.properties / application.yml)

What Makes Spring AI Shine

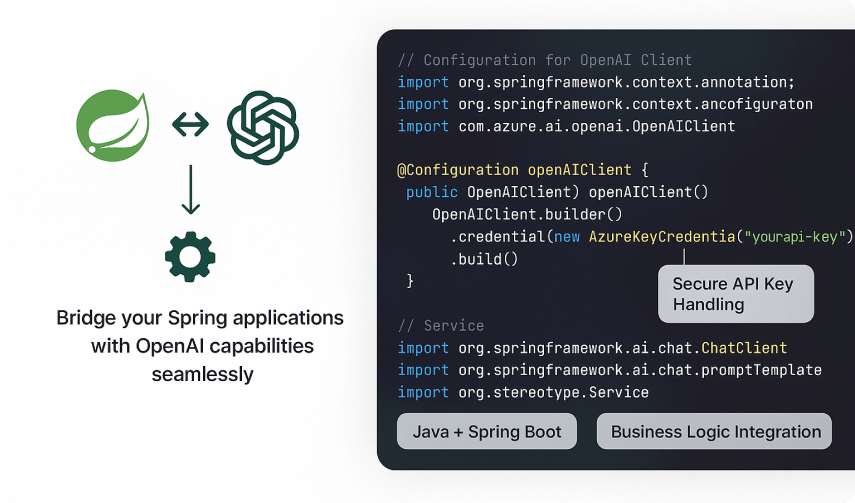

With Spring AI, integrating LLMs becomes as effortless as configuring a data source or message queue. You don’t need to deal with REST templates, auth headers, or manual JSON parsing; just drop in the dependency and start prompting using Spring AI with OpenAI LLM.

Why Use Spring AI?

As AI APIs like OpenAI, Gemini, and Azure OpenAI continue to evolve, Java developers face a tough question:

How can we integrate LLMs flexibly without vendor lock-in or duplicated effort?

Spring AI answers this with a clean, unified, and production-ready abstraction. Here’s why it’s a great fit for modern AI apps:

Avoid Vendor Lock-In with a Consistent API

Each provider has its own SDK quirks, auth flow, and response format. That leads to tightly coupled code and headaches when switching providers.

Spring AI solves this with a common ChatClient interface:

chatClient.prompt("Generate a summary").call().content();This makes your application vendor-agnostic, perfect for evaluating providers or complying with regional/data regulations. Examples of this flexibility can be seen in Spring AI LLM integration use cases.

Minimal Learning Curve for Spring Developers

If you already know how to build REST APIs, configure beans in Spring Boot, you already know 90% of what it takes to use Spring AI.

No need to learn the intricacies of each LLM provider’s SDK.

Spring AI fits naturally into familiar Spring concepts:

- Auto-configured models via Spring Boot starters

- Externalized config via application.yml

- Standard annotations like @Bean

This dramatically reduces time-to-POC and onboarding effort even in complex scenarios like Spring AI RAG pipeline implementations.

Clean Integration Without Boilerplate

Integrating LLMs the traditional way involves writing verbose REST client logic:

- Manual JSON payloads

- HTTP headers for API keys

- Response parsing and error handling

With Spring AI, all of that disappears behind a single clean abstraction:

String reply = chatClient.prompt("What are the features of Java?").call().content();No need for RestTemplate, WebClient, or third-party SDKs. It’s just plug-and-play, even supporting advanced use cases like a Spring AI vector store RAG example.

Unified Error Handling Across Providers

Each LLM provider returns different error formats (e.g. HTTP 429 for rate limits, or custom model not found errors).

Spring AI normalizes these responses into a consistent exception hierarchy, so your app can handle failures uniformly, regardless of the provider:

try {

chatClient.prompt("Your Prompt").call();

} catch (AiClientException ex) {

log.warn("AI call failed: {}", ex.getMessage());

}

Key Takeaways

Consistent API Across Providers

Use the same ChatClient code whether you're calling OpenAI, Gemini, or Azure; no provider-specific logic is needed.

Switch Models via parameter, Not Code Changes

Configure once and use different agents.

Minimal Learning Curve for Spring Devs

If you know Spring Boot, you know Spring AI; it reuses familiar concepts like @Bean, application.yml, and auto-config.

No Boilerplate, Just Business Logic

Forget about writing HTTP clients and response parsers. One method call does the job:

chatClient.prompt("Your question").call().content();Unified Error Handling Out of the Box

Spring AI consolidates diverse provider errors into a unified exception model, making it easier to integrate retries, circuit breakers, and logging.

Spring AI Building Blocks (Under the Hood)

Now that you’ve seen why Spring AI helps simplify LLM integration, let’s unpack how it works. Behind its clean API, Spring AI provides a well-structured set of components that align naturally with Spring Boot development patterns a central concept in Spring Boot AI architecture.

ChatClient: The Unified Gateway

At the core of Spring AI is the ChatClient, a single interface that abstracts away all provider-specific logic. Whether you're talking to OpenAI, Google Gemini, or Azure, your code stays the same:

String reply = chatClient

.prompt("Summarize this article")

.call()

.content();This makes it incredibly simple to implement Spring AI LLM integration with minimal friction: SDKs or response formats, just prompt, call, and consume the result.

Provider-Specific Model Beans (For Fine-Grained Control)

When you need more control, Spring AI exposes model-specific beans for Spring AI Java LLM integration use cases:

- OpenAiChatModel

- VertexAiGeminiChatModel

- AzureOpenAiChatModel

These beans let you configure:

- Temperature

- Max tokens

- Model version

- Streaming or non-streaming mode

This layered model lets developers fine-tune behavior while still benefiting from high-level Spring AI prompt engineering abstractions.

Auto-Configuration via Starters

Spring AI follows Spring Boot conventions: include the right starter, and everything “just works.”

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>This ease of integration makes Spring AI tutorial adoption fast and accessible, especially for projects exploring how to integrate LLM in Spring Boot.

Seamless Integration with the Spring Ecosystem

Spring AI plugs in smoothly with other Spring modules:

- Spring Web – Build RESTful endpoints that call LLMs

- Spring Security – Secure access to your AI endpoints

- Spring Boot Actuator & Micrometer – Monitor and trace AI usage

- Spring Retry / Resilience4j – Add retry logic or circuit breakers around LLM calls

This makes Spring AI a great fit whether you're prototyping or building something for production.

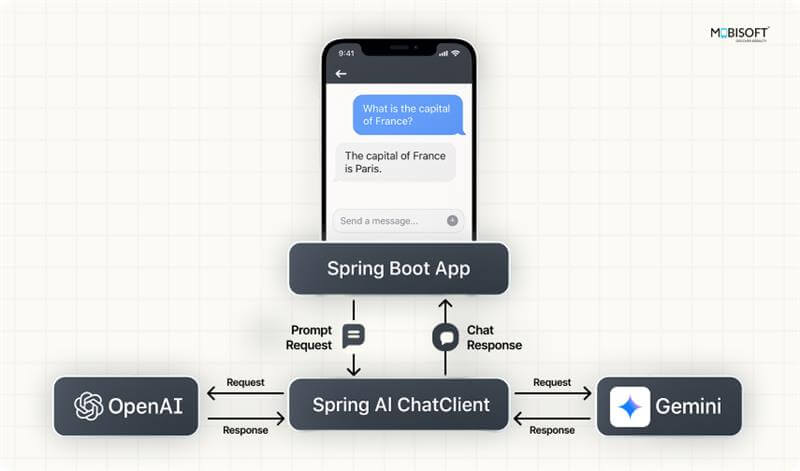

Overview of the POC App

To demonstrate the practical value of Spring AI, this Proof of Concept (POC) application showcases a simple yet flexible architecture that dynamically integrates with multiple LLM providers, including OpenAI and Google Gemini (Vertex AI), within a single Spring Boot AI application.

This example is intentionally lightweight and focused, allowing you to grasp the core mechanics of Spring AI without being distracted by unrelated boilerplate or UI elements. It's a great Spring AI tutorial for building foundational understanding.

Tech Stack

The POC leverages the latest tools in the Spring ecosystem along with standard modern Java:

- Java 17: Modern language features, better performance, and long-term support (LTS)

- Spring Boot 3.5.0: Latest version with support for Jakarta EE 10, enhanced observability, and better native compilation compatibility

- Spring AI (SNAPSHOT version): Pulling in the latest Spring AI features, including support for OpenAI, Spring AI LLM, Gemini, and more.

- Maven: For dependency and build management

This stack is fully open-source, well-supported, and ready for rapid prototyping or production evolution.

What the App Does

At a high level, the application exposes a single REST API endpoint:

GET /ai?prompt=YourText&model=openaiYou can send any natural language prompt to this endpoint along with the model to be used, and it will respond with an AI-generated reply based on the requested model.

But here’s the key feature: The app dynamically switches between OpenAI and Gemini based on the request.

This means:

- No need for separate codebases or microservices per provider

- Just configure the required properties and the appropriate bean

- The correct ChatClient is used based on the parameter model

- You’re free to test, compare, or switch models with zero logic changes

Minimal Configuration Per Provider

One of the strengths of Spring AI, and a major goal of this POC, is to demonstrate how little configuration is needed to get started with different AI providers:

- For OpenAI, you just provide your API key via application.properties

- For Gemini, the setup points to Google’s Vertex AI with project ID and credentials, but still uses a similar ChatClient interface

There’s no need to manually build HTTP requests, set headers, or parse responses; the Spring AI abstraction handles it all. You focus on the prompt design and the response, not the plumbing. This highlights how Spring AI Prompt Design Patterns can simplify implementation.

This POC is ideal for:

- Developers evaluating AI providers

- Teams building platform-agnostic AI capabilities

- Use cases where flexibility, speed, and clean separation of concerns matter, such as in Spring AI RAG pipeline development

In the next section, we'll walk through how code is structured, including configuration files and key Java components.

Code Walkthrough

Let’s take a look under the hood of the POC and understand how Spring AI is integrated using standard Spring Boot conventions. The setup is simple, modular, and designed to make switching between LLM providers as painless as possible.

POM Configuration

The project uses Maven to manage dependencies.

Import the Spring AI BOM

The Spring AI project is still evolving, so you'll want to import the Spring AI BOM (Bill of Materials) to ensure all dependencies stay aligned and compatible:

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0-SNAPSHOT</version> <!-- or latest available -->

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>This ensures consistent versioning across all Spring AI modules.

Also, some of the model integrations may currently exist only in snapshot or milestone versions.

To resolve these, add the following repositories to your pom.xml:

<repositories>

<repository>

<id>spring-snapshots</id>

<name>Spring Snapshots</name>

<url>https://repo.spring.io/snapshot</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>Add Provider-Specific Starters

Next, declare the dependencies for the LLM providers you want to support:

<!-- OpenAI Starter -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-vertex-ai-gemini</artifactId>

</dependency>

<!-- Vertex AI Gemini Starter -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-vertex-ai-gemini</artifactId>

</dependency>These starters support Spring AI Java LLM Integration and include model classes, auto-configuration, and ChatClient support.

Now, running mvn spring-boot:run will load the configurations and beans.

(We will go over an extra step required for Vertex Gemini for credentials)

Application Properties

Spring AI uses auto-configuration to connect to LLM providers. You just need to supply basic credentials and metadata in your application.properties or application.yml.

For OpenAI:

spring.ai.openai.api-key=YOUR_OPENAI_KEYYou can retrieve your OpenAI key from: https://platform.openai.com/account/api-keys

For Google Gemini (Vertex AI):

spring.ai.vertex.ai.gemini.api-endpoint=us-central1-aiplatform.googleapis.com

spring.ai.vertex.ai.gemini.project-id=YOUR_PROJECT_ID

spring.ai.vertex.ai.gemini.location=us-central1If you are using an application.yaml,

spring:

ai:

openai:

api-key: YOUR_OPENAI_KEY

vertex:

ai:

gemini:

api-endpoint: us-central1-aiplatform.googleapis.com

project-id: YOUR_PROJECT_ID

location: us-central1In addition to these properties, make sure your application can authenticate using a service account JSON key (explained in the next section). This is required by Google Cloud SDKs.

Note on Region & Endpoint

The location and api-endpoint values in the Gemini configuration (e.g., us-central1) depend on where your Vertex AI resources are deployed.

If your GCP project is using a different region (e.g., europe-west4, asia-southeast1), update both:

spring.ai.vertex.ai.gemini.location=your-region

spring.ai.vertex.ai.gemini.api-endpoint=your-region-aiplatform.googleapis.comYou can verify your region in the Google Cloud Console under Vertex AI > Locations.

Setting Up Credentials

To interact securely with OpenAI and Google Gemini (via Vertex AI), you’ll need to set up authentication credentials for both providers. This section walks through how to do that.

OpenAI API Key

Spring AI uses your OpenAI API key to authenticate requests to OpenAI’s servers.

Steps:

Create/Open an Account

Go to https://platform.openai.com and sign in or create a free account.

Generate API Key

- Navigate to your Account Settings > API Keys

- Click “+ Create new secret key”

- Copy and save the key securely (you won’t see it again)

Add to Spring Boot config

In your application.properties:

spring.ai.openai.api-key=sk-XXXXXXXXXXXXXXXXXXXXXXXXNote: Do not commit this key to Git. Use .gitignore and .env files when possible, or externalize it using environment variables for production environments.

Google Gemini (Vertex AI) Setup

Gemini via Vertex AI requires more configuration due to GCP’s IAM and security model. Spring AI uses the official Google SDK under the hood, which authenticates using a service account JSON key file.

Steps:

- Create a Google Cloud Project

- Go to https://console.cloud.google.com

- Click on “New Project”

- Give it a name (e.g., springai-demo)

- Note down the Project ID, you'll need it for the

Application.properties

- Enable Vertex AI API

- Go to APIs & Services > Library

- Search for “Vertex AI API”

- Click Enable

- Create a Service Account

- Navigate to IAM & Admin > Service Accounts

- Click “Create Service Account”

- Assign it a name like springai-service

- Under "Role", choose:

Vertex AI > Vertex AI User - Complete creation

- Download Credentials (JSON)

- After creating the account, go to the Keys tab

- Click “Add Key” > “Create New Key” > JSON

- Download and securely store this .json file

- Set the Environment Variable

Spring AI (via Google SDK) looks for the credentials file via an environment variable:export GOOGLE_APPLICATION_CREDENTIALS=/path/to/your/credentials.json

If you're using an IDE like IntelliJ or Eclipse, ensure this environment variable is passed to the runtime configuration of your app.

Summary

- OpenAI: Uses a simple API key

- Gemini: Requires service account authentication via a JSON key file

- Both can be seamlessly configured through Spring AI using minimal setup

This authentication setup ensures that your app can securely interact with LLMs while keeping your credentials out of the source code.

ChatClientConfig: Multi-Model Client Configuration

The ChatClientConfig class defines multiple ChatClient beans, each associated with a different AI provider. These beans are registered under specific names ("openai", "gemini"), allowing the application to dynamically select the appropriate client at runtime based on incoming API parameters.

ChatClientConfig:

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.openai.OpenAiChatModel;

import org.springframework.ai.vertexai.gemini.VertexAiGeminiChatModel;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ChatClientConfig {

@Bean(name = "openai")

ChatClient openAiChatClient(OpenAiChatModel model) {

return ChatClient.builder(model).build();

}

@Bean(name = "gemini")

ChatClient geminiChatClient(VertexAiGeminiChatModel model) {

return ChatClient.builder(model).build();

}

}Explanation:

- Both

ChatClientinstances are registered as Spring beans, each with a unique name. - These named beans correspond to different Spring AI LLM model implementations: OpenAI and Vertex AI Gemini.

- Instead of using conditional bean creation or environment-based selection, the application loads all supported clients during startup, supporting a multi-model Spring AI configuration.

- At runtime, the appropriate

ChatClientis selected by looking up the bean from the Spring context using the model name provided by the user (for example, via a request parameter).

Data Transfer Objects (DTOs)

Since we're now using a POST-based interaction that accepts input and returns a structured output, we need two simple DTOs to represent the request and response formats.

AIRequest

This class captures the input payload sent by the client, including the prompt and the name of the AI model to use.

public class AIRequest {

private String prompt;

private String model;

// Getters and setters

}AIResponse

This class defines the structure of the response returned by the API. It includes the name of the AI model used and the generated response content.

public class AIResponse {

private String model;

private String response;

// Constructor, getters

}These DTOs support clean, testable API logic and support Spring AI prompt engineering patterns by isolating input/output

REST Controller: Unified Endpoint for Both Providers

Here's the AIController that interacts with the user via a simple Spring AI with an OpenAI LLM endpoint:

import com.mobisoftinfotech.springai.dto.AIRequest;

import com.mobisoftinfotech.springai.dto.AIResponse;

import org.springframework.ai.chat.client.ChatClient;

import org.springframework.web.bind.annotation.*;

import java.util.Map;

@RestController

@RequestMapping("/ai")

public class AIController {

private final Map<String, ChatClient> chatClients;

public AIController(Map<String, ChatClient> chatClients) {

this.chatClients = chatClients;

}

@PostMapping

public AIResponse getAIResponse(@RequestBody AIRequest request) {

String modelKey = request.getModel() != null ? request.getModel().toLowerCase() : "openai";

ChatClient client = chatClients.get(modelKey);

if (client == null) {

return new AIResponse(modelKey, "Unsupported model: " + modelKey);

}

String output = client.prompt(request.getPrompt() != null ? request.getPrompt() : "Hello AI!").call().content();

return new AIResponse(modelKey, output);

}

}Explanation:

- This controller is designed to support multiple AI providers dynamically at runtime.

- Instead of being tied to a specific provider, it receives the desired model name (e.g., OpenAI, Gemini) along with the prompt as part of the request body. All available ChatClient instances are injected into the controller as a map, keyed by their model names, showcasing how to perform Spring AI Java LLM integration.

- At runtime, the controller uses the

modelfield from the request to look up the correspondingChatClientand forward the prompt to it. If the requested model is not supported, the controller returns an appropriate message. - This design decouples the controller from any specific AI provider and enables seamless switching or testing of different models through simple client-side parameters, without restarting or redeploying the application. It's an example of a Spring Boot AI setup supporting dynamic model selection.

Hit the Endpoint

Once the app is running, you can interact with the AI using a simple HTTP POST request:

curl -X POST http://localhost:8080/ai \

-H "Content-Type: application/json" \

-d '{

"prompt": "What day is today?",

"model": "gemini"

}'Response:

{

"model": "gemini",

"response": "Today is Sunday, October 15th, 2023."

}This call sends your prompt to the AI model and returns its response. These APIs can also power mobile apps powered by LLM backends for real-time user experiences.

Beyond the POC: Advanced Use Cases & What’s Next

Once your basic integration with OpenAI or Gemini is working, Spring AI tutorial features provide powerful capabilities that enable real-world, production-ready applications. These include advanced prompting patterns, structured output, contextual memory, and streaming, all while keeping your code clean and idiomatic for Spring Boot.

Here are some areas worth exploring:

Embeddings & Vector Databases (RAG)

The Spring AI embedding configuration tutorial helps you implement embedding models to convert text into vector representations. This is essential for:

- Semantic search

- Document similarity

- Retrieval-Augmented Generation (RAG)

You can store these embeddings in vector databases such as PGVector, Milvus, Qdrant, or Redis Vector Search. At query time, Spring AI RAG pipeline logic fetches relevant context based on vector similarity, which is then injected into the prompt for more accurate, context-aware responses.

EmbeddingModel model = ...;

List<Double> embedding = model.embed("Spring AI is amazing").getEmbedding();This is part of how Spring AI vector store RAG example scenarios work in real-world search use cases. If your enterprise demands higher control or privacy, you can also deploy private LLMs with spring boot to ensure secure, on-premise AI deployments.

Prompt Templating & Structured Output

Hardcoding prompts quickly becomes unmanageable. Spring AI supports:

- Prompt templates (text files or annotated classes)

- Dynamic variable injection

- Output parsers for mapping responses to Java objects

This enables safer, reusable, and testable prompt patterns and makes structured outputs (e.g., JSON into Java POJOs) easier to handle.

PromptTemplate template = new PromptTemplate("Translate '{{text}}' to Spanish");

String rendered = template.render(Map.of("text", "Hello World"));

JsonOutputParser<Person> parser = new JsonOutputParser<>(Person.class);

Person person = parser.parse(llmResponse);Streaming with Server-Sent Events (SSE)

For real-time user interfaces or chatbots, token-by-token streaming creates a better user experience. Most providers support it, and Spring AI integrates this via Project Reactor:

@GetMapping(value = "/ai/stream", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux<String> streamResponse(@RequestParam String prompt) {

return chatClient.prompt()

.user(prompt)

.stream()

.map(ChatResponse::content);

}To ensure visibility and performance tracking in production, monitoring Spring Boot-based AI services is recommended to capture streaming response times, token generation delays, and error trends.

Tool Use & Agent-Like Workflows (Experimental)

Spring AI is evolving toward LLM agents that can decide when to:

- Call APIs

- Perform calculations

- Search databases or trigger downstream actions

This is similar to LangChain’s tool calling or OpenAI’s function calling. While still experimental, it lays the foundation for agent-like systems with multi-step reasoning and dynamic tool orchestration.

When orchestrating long-running or complex LLM processes, consider distributed workflow management using sagas or event-driven patterns.

Lessons Learned from the POC

During this project, a few consistent takeaways emerged:

Rapid productivity with model-agnostic design

The ChatClient abstraction allows integration with multiple AI providers (like OpenAI and Gemini) with minimal configuration. By injecting named beans and selecting the appropriate one at runtime, we can switch providers without altering core business logic.

Spring idioms = low friction

If you're comfortable with Spring Boot, working with Spring AI feels natural. From @Bean definitions to structured configuration, everything follows familiar Spring patterns.

Focus on business logic, not infrastructure

We didn’t have to write custom HTTP clients, serialization code, or error handling layers. Spring AI handles communication with underlying AI services, letting us focus on delivering user-facing features. For production-grade stability, incorporate retry patterns and circuit breakers for handling failures in AI workflows.

Conclusion

As the landscape of generative AI evolves rapidly, the tools we use to integrate with it must be just as agile. Big Spring AI offers a powerful abstraction that brings the strengths of the Spring ecosystem's modularity, configurability, and developer productivity to the world of large language models.

Even with just a few classes and configuration files, you get a fully functional AI-backed API that’s modular, extensible, and production-ready.

But this is only the beginning. Spring AI is actively evolving to support features like:

- Streaming responses for real-time interactivity

- Structured output parsing

- Prompt templating

- Retrieval-augmented generation (RAG)

- Tool-using agents and orchestration

Whether you're building an intelligent chatbot, a document summarizer, or integrating AI into business workflows, Spring AI lets you focus on what your app should do, not how to wire up every model-specific detail.

If you're a Java or Spring developer and you're starting to explore AI in your projects, Spring AI is one of the fastest and cleanest ways to get started. Try this POC structure, plug in your preferred AI provider, and build something useful fast.

You can integrate these components into custom enterprise software development projects tailored to your specific business needs.

You can find the complete source code for the POC on GitHub here:

Clone it, experiment with prompts, switch providers, and see how easily you can implement a Spring AI tutorial with Spring Boot AI integration in your Java applications.

August 1, 2025

August 1, 2025