Introduction

Microservices architecture has become the go-to approach for building scalable, modular, and independently deployable systems. By breaking down a system into smaller, loosely coupled services, teams can innovate faster and scale components independently.

However, while microservices offer clear architectural benefits, they also introduce complexity, particularly around communication between services. These systems depend heavily on network interactions, making them susceptible to latency issues and partial failures.

This is where resilience in microservices becomes essential. Building fault tolerant microservices means anticipating failures and mitigating their impact to ensure system stability and a smooth user experience.

To address these concerns, tools like Resilience4j have emerged. It’s a lightweight Java resilience library designed for functional programming and integrates seamlessly with Spring Boot. Resilience4j offers several powerful microservices resilience patterns to improve service-to-service reliability, including the Circuit Breaker pattern, Retry mechanism, and the Bulkhead pattern in microservices.

Tools like Resilience4j align well with efforts focused on modernizing applications with digital transformation, enabling fault-tolerant service design in cloud environments.

For a broader introduction to microservices and their role in modern software architecture, it's essential to understand how they evolved from traditional monolithic systems.

Challenges in Microservices

While monolithic applications operate largely within a single process, microservices rely on distributed communication, usually over HTTP or messaging systems. This introduces new classes of failure that must be accounted for:

Latency Overhead

In a monolith, method calls are in-process and virtually instantaneous. In microservices, these calls occur over the network, leading to increased latency. Even a small delay in a downstream service can ripple through the system, resulting in cascading latency and degraded user experience.

Dependency Failures

Each microservice typically depends on one or more downstream services. If a dependent service is slow or unavailable, it can trigger timeouts or exceptions. Left unchecked, this can lead to resource exhaustion, where threads or connections are consumed waiting on failed services, resulting in cascading failures that impact the whole system.

These challenges underscore the need for resilience patterns in Java-based microservices. By integrating techniques such as the Resilience4j Circuit Breaker, Retry pattern in microservices, and Bulkhead pattern, we can significantly enhance the fault tolerance and robustness of our Spring Boot applications.

These practices align closely with core Spring Boot microservices principles that guide scalable, reliable, and maintainable service development.

Key Benefits of Resilience Patterns

1. Prevent Cascading Failures

The Circuit Breaker resilience4j implementation stops repeated calls to failing services, protecting the system from overload and minimizing downstream impact.

For example, if a payment service in an e-commerce app is slow, the circuit breaker stops further calls to it temporarily, ensuring the frontend remains responsive and other services aren't affected. This allows the system to degrade gracefully while the failing service recovers.

2. Isolate Resource Contention

The bulkhead pattern in microservices ensures that one service or thread pool doesn't monopolize resources, allowing other parts of the system to continue functioning.

For example, if a reporting module starts consuming too many threads, a bulkhead can limit its concurrency, ensuring that core features like order placement in an e-commerce app remain unaffected and responsive.

3. Automatically Recover from Transient Failures

The retry pattern in microservices automatically retries failed calls a configured number of times before giving up. It handles transient faults like temporary network issues or backend unavailability.

For example, if a backend service intermittently fails due to a brief timeout or momentary unavailability, retrying the request can often succeed without impacting the user experience. This reduces error rates and improves system reliability without requiring manual intervention, allowing services to self-heal from transient problems.

Combining resilience patterns with DevOps automation and monitoring ensures better observability and proactive failure management across microservices.

Key Resilience Patterns

Modern microservices must anticipate and gracefully handle failure scenarios. Resilience4j examples showcase how the library enables services to recover from latency, failures, and resource bottlenecks using focused resilience patterns in microservices.

The three core patterns, Circuit Breaker, Retry, and Bulkhead, work together to build highly resilient microservices in Java.

Circuit Breaker

What is a Circuit Breaker?

The Circuit Breaker pattern in microservices prevents a service from repeatedly calling a failing downstream service. Instead of overwhelming the system with retries or blocking threads, it opens the circuit after a failure threshold is reached. This fail-fast mechanism helps avoid cascading failures and gives failing services time to recover.

Why It’s Important

- Prevents cascading failures by stopping calls to unhealthy services.

- Helps recover gracefully from transient issues.

- Reduces system load during failure periods.

Circuit Breaker States

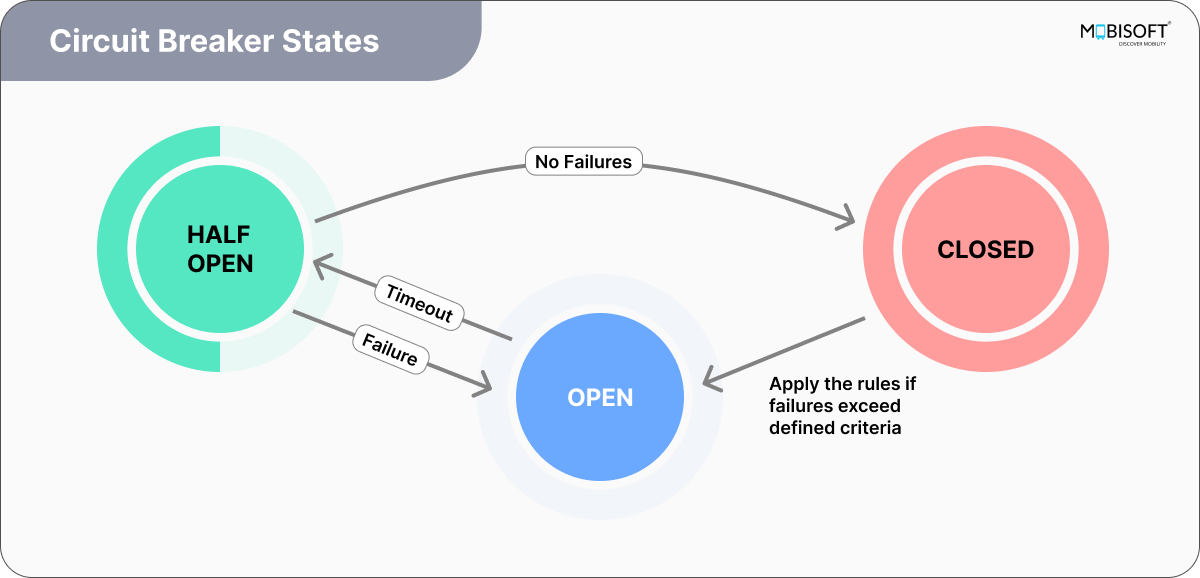

A Circuit Breaker in Resilience4j transitions through three states to manage backend failures:

- Closed: All requests are allowed through. The circuit breaker monitors failures. If the failure rate exceeds the configured threshold, it opens the circuit.

- Open: All requests are rejected immediately. This state prevents the system from sending load to a failing service. The circuit remains open for the configured wait duration.

- Half-Open: After the wait period, a limited number of requests are allowed through. If they succeed, the circuit closes. If failures continue, the circuit returns to the open state, often with a longer wait period.

This Resilience4j configuration ensures unstable services don’t degrade the system and enables automatic recovery without manual intervention.

Key Circuit Breaker Configuration Properties in Resilience4j

- slidingWindowSize

Defines the number of recent calls considered for failure rate calculation.

Default: 100

Example:slidingWindowSize=5

Smaller values (e.g., 5) make the circuit breaker more sensitive to recent changes. - failureRateThreshold

Sets the percentage of failed calls required to open the circuit breaker.

Default: 50%

Example:failureRateThreshold=50

If 50% of recent calls fail, the circuit breaker opens. A higher threshold makes it more lenient. - waitDurationInOpenState

Specifies how long the circuit breaker stays open before transitioning to half-open state.

Default: 60 seconds

Example:waitDurationInOpenState=10s

After opening, it remains open for the specified time before testing with a limited number of requests. - permittedNumberOfCallsInHalfOpenState

Defines how many calls are allowed in the half-open state to test backend recovery.

Default: 10

Example:permittedNumberOfCallsInHalfOpenState=2

Fewer calls (e.g., 2) help evaluate if the backend has recovered before returning to a closed state. - minimumNumberOfCalls

Specifies the minimum number of calls before evaluating whether the circuit breaker should open.

Default: 100

Example:minimumNumberOfCalls=5

Prevents premature opening when there aren't enough calls to assess failure rate. - automaticTransitionFromOpenToHalfOpenEnabled

If true, the circuit breaker automatically transitions from open to half-open state after the wait duration.

Default: true

Example:automaticTransitionFromOpenToHalfOpenEnabled=true

Disabling this requires manual intervention for state transitions.

Retry

What is a Retry Pattern?

Retry is a resilience pattern in microservices used to handle transient faults, temporary glitches such as network hiccups or, a temporarily overloaded service. Instead of failing on the first attempt, it automatically re-attempts the call after a delay, increasing the likelihood of success. This retry pattern in microservices is often implemented using libraries like Resilience4j in Spring Boot.

Why It’s Important

- Handles intermittent issues that may resolve quickly.

- Improves system robustness without manual retries.

- Helps smooth out temporary spikes in failure rates.

Key Retry Configuration Properties in Resilience4j

- maxAttempts

Defines the maximum number of attempts for a call, including the initial call plus retries.

Default: 3

Example:maxAttempts=3

This means the call will be tried up to 3 times before giving up. If the first attempt fails (due to an exception or specific failure conditions), Resilience4j will automatically retry according to the configured policy. - waitDuration

Specifies the fixed wait time between retry attempts.

Default: 500ms

Example:waitDuration=2s

After a failed call, Resilience4j waits for this duration before trying again. Increasing this delay can help reduce the load on a struggling downstream service and give it time to recover.

To further improve fault tolerance, resilience4j retry supports backoff strategies like exponential or random delays, helping avoid synchronized retries from multiple services that could lead to spikes in load.

Best Practice: Combine Retry with Circuit Breaker. Retry alone may exacerbate failures by increasing load; Circuit Breaker can guard against retry storms.

Bulkhead

What is a Bulkhead Pattern?

Inspired by ship design, the bulkhead pattern in microservices limits the number of concurrent requests to a service or resource. Just as watertight compartments prevent an entire ship from sinking, resilience patterns in Java, like bulkheads, prevent failures in one part of a system from cascading.

Why it's important

Without bulkheads, a slow or failing dependency can monopolize system resources like threads or database connections. This often results in a complete system outage. The Resilience4j bulkhead pattern helps isolate failures and maintain system responsiveness by limiting the scope of impact.

Key Bulkhead Configuration Properties in Resilience4j

- maxConcurrentCalls

Defines the maximum number of concurrent calls allowed at any given time.

Default: 25

Example:maxConcurrentCalls=3

This limit ensures that only the specified number of calls (e.g., 3) are processed concurrently. Additional calls are either rejected immediately (if maxWaitDuration=0) or wait for a free spot. - maxWaitDuration

Specifies how long a call should wait to acquire a permit before being rejected.

Default: 0 (no wait)

Example:maxWaitDuration=500ms

WhenmaxWaitDuration > 0, excess calls will wait for an available spot, up to the specified duration. This reduces immediate rejections but may increase latency when the system is under heavy load.

Integrating Resilience4j in a Spring Boot Application

To demonstrate this, we’ll create two Spring Web applications: one serving as the frontend aggregator and the other as a fragile backend service.

The frontend aggregator will be responsible for making HTTP calls to the backend, and it will incorporate Resilience4j to showcase the use of Circuit Breaker, Retry and Bulkhead patterns along with optional fallback methods to demonstrate graceful handling of the scenarios.

The backend service, for simplicity, will expose a basic API endpoint that simulates responses introducing delays and failures to effectively test the resilience mechanisms in action.

Frontend Application Setup :

Dependencies and configuration

First, need to include the necessary dependencies in your `pom.xml` if you’re using Maven.

Certain annotations from Resilience4j library depend on Spring AOP to work. So include Spring AOP dependency as well.

<!-- Spring Boot Web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Resilience4j integration for Spring Boot 3 -->

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-spring-boot3</artifactId>

<version>2.1.0</version> <!-- Or the latest stable -->

</dependency>

<!-- Required for annotations like @CircuitBreaker, @Bulkhead -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>And for Gradle

// Spring Boot Web

implementation 'org.springframework.boot:spring-boot-starter-web'

// Resilience4j Spring Boot 3 support

implementation 'io.github.resilience4j:resilience4j-spring-boot3:2.1.0'

// Required for annotations like @CircuitBreaker, @Bulkhead

implementation 'org.springframework.boot:spring-boot-starter-aop'In the application.properties file, we need to define all the configurations required for the circuit breaker, bulkhead.

spring.application.name=aggregator

server.port=8080

backend.service.url=http://localhost:8081/data

# Resilience4j - Circuit Breaker with name demoCircuitBreaker

resilience4j.circuitbreaker.instances.demoCircuitBreaker.slidingWindowSize=5

resilience4j.circuitbreaker.instances.demoCircuitBreaker.failureRateThreshold=50

resilience4j.circuitbreaker.instances.demoCircuitBreaker.waitDurationInOpenState=10s

resilience4j.circuitbreaker.instances.demoCircuitBreaker.permittedNumberOfCallsInHalfOpenState=2

resilience4j.circuitbreaker.instances.demoCircuitBreaker.minimumNumberOfCalls=5

resilience4j.circuitbreaker.instances.demoCircuitBreaker.automaticTransitionFromOpenToHalfOpenEnabled=true

# Resilience4j - retry with name demoCircuitBreaker

resilience4j.retry.instances.demoRetry.maxAttempts=3

resilience4j.retry.instances.demoRetry.waitDuration=2s

# Resilience4j - Bulkhead with name demoBulkhead

#Only 3 concurrent calls are allowed.

#Any 4th+ call that comes in at the same time will be immediately rejected.

resilience4j.bulkhead.instances.demoBulkhead.maxConcurrentCalls=3

resilience4j.bulkhead.instances.demoBulkhead.maxWaitDuration=0If you are using application.yml :

spring:

application:

name: aggregator

server:

port: 8080

backend:

service:

url: http://localhost:8081/data

resilience4j:

circuitbreaker:

instances:

demoCircuitBreaker:

slidingWindowSize: 5

failureRateThreshold: 50

waitDurationInOpenState: 10s

permittedNumberOfCallsInHalfOpenState: 2

minimumNumberOfCalls: 5

automaticTransitionFromOpenToHalfOpenEnabled: true

retry:

instances:

demoRetry:

maxAttepts: 3

waitDuration: 2s

bulkhead:

instances:

demoBulkhead:

maxConcurrentCalls: 3

maxWaitDuration: 0Controller implementation with resilience annotations

Define a controller with endpoints that invoke the backend service API, incorporating resilience mechanisms such as CircuitBreaker, Retry and Bulkhead as configured in the application properties, to handle potential failures gracefully with fallback methods. We have used RestTemplate to make the API call to the backend service.

In order to simulate concurrent calls to an API in case of bulkhead, we have added delay.

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.client.RestTemplate;

import io.github.resilience4j.bulkhead.annotation.Bulkhead;

import io.github.resilience4j.circuitbreaker.annotation.CircuitBreaker;

import io.github.resilience4j.retry.annotation.Retry;

@RestController

public class FrontendController {

private RestTemplate restTemplate;

private static final Logger LOGGER = LoggerFactory.getLogger(FrontendController.class);

@Value("${backend.service.url}")

private String backendServiceUrl;

public FrontendController(RestTemplate restTemplate) {

this.restTemplate = restTemplate;

}

@GetMapping("/api/circuit-breaker")

@CircuitBreaker(name = "demoCircuitBreaker", fallbackMethod = "circuitBreakerFallback")

public String getCircuitBreakerResponse() {

return getResponseFromBackend();

}

@GetMapping("/api/retry")

@Retry(name = "backendRetry", fallbackMethod = "retryFallback")

public String getRetryResponse() {

LOGGER.info("Trying call in thread: {}", Thread.currentThread().getName());

return getResponseFromBackend();

}

@GetMapping("/api/bulkhead")

@Bulkhead(name = "demoBulkhead", type = Bulkhead.Type.SEMAPHORE, fallbackMethod = "bulkheadFallback")

public String getResponse() {

try {

LOGGER.info("Processing request in thread: ", Thread.currentThread().getName());

Thread.sleep(5000);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

return getResponseFromBackend();

}

private String circuitBreakerFallback(Throwable throwable) {

LOGGER.info("Circuit breaker fallback triggered: ", throwable.getClass().getSimpleName());

return "Fallback: Circuit breaker is open - " + throwable.getMessage();

}

private String retryFallback(Throwable throwable) {

LOGGER.info("Retry fallback triggered: ", throwable.getClass().getSimpleName());

return "Retry Fallback: Failed after retries - " + throwable.getMessage();

}

private String bulkheadFallback(Throwable throwable) {

LOGGER.info("Bulkhead fallback triggered: ", throwable.getClass().getSimpleName());

return "Bulkhead Fallback: Too many concurrent calls";

}

private String getResponseFromBackend() {

return restTemplate.getForObject(backendServiceUrl, String.class);

}

}What This Does:

1. @CircuitBreaker(name = "demoCircuitBreaker", fallbackMethod = "circuitBreakerFallback"):

- Protects against failing calls to the backend. If the backend fails repeatedly, it "opens" the circuit and returns a fallback response.

- Executes the fallback method circuitBreakerFallback when the circuitBreaker resilience is triggered

2. @Retry(name = "backendRetry", fallbackMethod = "retryFallback"):

- Retries failed backend calls a configurable number of times before ultimately failing and returning a fallback response.

- Executes the fallback method retryFallback when the retry resilience is triggered.

3. @Bulkhead(name = "demoBulkhead", type = Bulkhead.Type.THREADPOOL, fallbackMethod = "bulkheadFallback"):

- Limits the number of concurrent calls to the backend. If the limit is reached, the request is queued or rejected.

- Executes the fallback method bulkheadFallback when the bulkhead resilience is triggered

Also, we need to define the RestTemplate bean in the application class.

@SpringBootApplication

@EnableAspectJAutoProxy

public class FrontendApplication {

public static void main(String[] args) {

SpringApplication.run(FrontendApplication.class, args);

}

@Bean

RestTemplate restTemplate() {

return new RestTemplate();

}

}The frontend application is now ready to run on port 8080.

Backend Application Setup :

Dependencies and configuration

Now to build a simple Spring web application with an endpoint simulating different failure scenarios, we need to include the following dependencies. If you're also planning to implement asynchronous communication, integrating Spring Boot with Apache Kafka can enhance fault isolation and event-driven resilience.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>For application.properties, need basic configuration as below.

spring.application.name=backend

server.port=8081If you have application.yml

spring:

application:

name: backend

server:

port: 8081

Simulating failures in the backend service

Create a BackendController class to handle incoming requests from the frontend service with an endpoint that generates random failures.

import java.util.Random;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RequestMapping("/data")

@RestController

public class BackendController {

private final Random random = new Random();

@GetMapping

public String getData() {

if (random.nextBoolean()) {

throw new RuntimeException("Simulated backend failure");

}

return "Backend: Success!";

}

}The backend application is now ready to run on port 8081.

Testing Resilience Patterns

In order to check the resilience patterns in action, run both applications on defined ports and try accessing the frontend APIs.

Testing the scenarios:

Once both frontend (port 8080) and backend (port 8081) services are running, use the approaches below to simulate failures and observe how each Resilience4j pattern behaves.

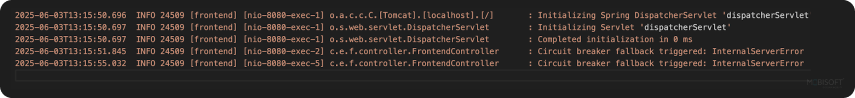

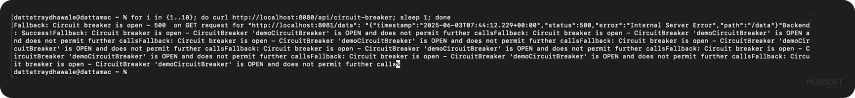

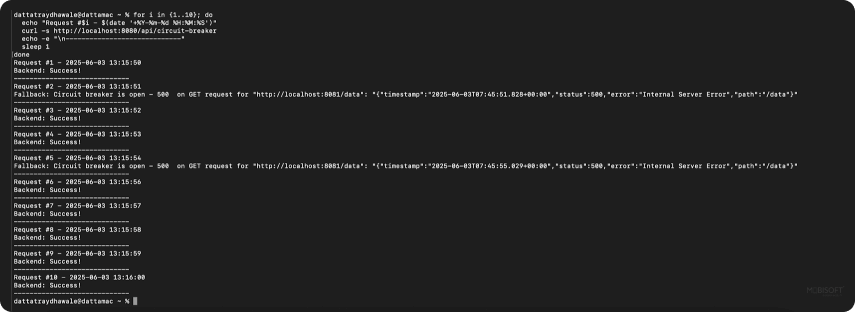

1. Circuit Breaker

Endpoint: http://localhost:8080/api/circuit-breaker

Test Objective: Simulate repeated backend failures to trip the circuit breaker.

Steps:

- Call the endpoint multiple times (5 times if

slidingWindowSize=5). - Ensure the backend returns failure for at least

50%of calls (failureRateThreshold=50). - Observe that after reaching the threshold, calls immediately return the fallback response:

"Fallback: Circuit breaker is open - 500 on GET request for "http://localhost:8081/data"

Verify Logs:

- Fallback method gets triggered, logs are visible in the terminal.

Curl test loop:

for i in {1..10}; do curl http://localhost:8080/api/circuit-breaker; sleep 1; done

For more formatted output in the terminal using curl -

for i in {1..10}; do

echo "Request #$i - $(date '+%Y-%m-%d %H:%M:%S')"

curl -s http://localhost:8080/api/circuit-breaker

echo -e "\n-----------------------------"

sleep 1

done

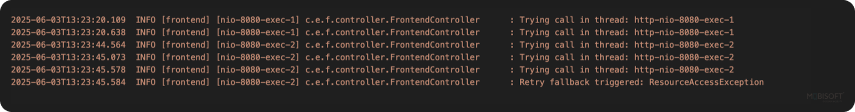

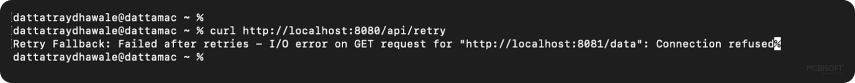

2. Retry

Endpoint: http://localhost:8080/api/retry

Test Objective: Confirm that failed calls are retried automatically.

Steps:

- Call the endpoint.

- If the backend randomly fails, the frontend will retry up to 3 times (

maxAttempts=3). - After 3 failures, fallback is triggered with response:

"Retry Fallback: Failed after retries" - If unable to trigger the fallback, you can try stopping the backend service running on port 8081 and API call attempts can be seen in the logs as per max attempts configured, 3 in this case.

“Trying call in thread: http-nio-8080-exec-1”

Verify Logs:

- API calls are logged

- The fallback method is only called after all retries are exhausted.

Curl test:

curl http://localhost:8080/api/retry

Run multiple times to observe retry and fallback behavior on failure.

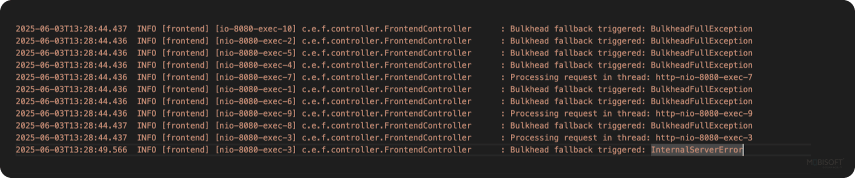

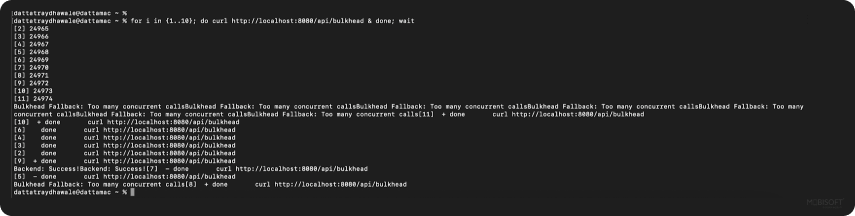

3. Bulkhead

Endpoint: http://localhost:8080/api/bulkhead

Test Objective: Simulate concurrent load to trigger bulkhead limits.

Steps:

- The frontend API sleeps for

5 secondsbefore responding. - Bulkhead allows only 3 concurrent calls (

maxConcurrentCalls=3). - Fire 10 parallel requests to simulate load.

Expected Behavior:

- The first 3 requests are processed.

- Remaining calls are rejected immediately if

maxWaitDuration=0. - Rejected requests will return:

"Bulkhead fallback triggered: BulkheadFullException"

Curl load test:

for i in {1..10}; do curl http://localhost:8080/api/bulkhead & done; wait

Verify Logs:

- Log should show "Bulkhead fallback triggered" for rejected requests.

- The backend should only receive up to 3 requests at once.

Recap of Resilience Patterns Used

In a microservices architecture, resilience patterns in Java help mitigate risks from downstream failures. Below are the three core Resilience4j patterns used in this tutorial:

- Circuit Breaker: Prevents repeated calls to a failing backend by "opening" the circuit after a failure threshold is reached, allowing the system to recover without additional load.

- Retry: Automatically re-attempts failed backend calls a configured number of times, helping recover from transient issues like network blips or temporary service unavailability.

- Bulkhead: Limits the number of concurrent backend calls to prevent resource exhaustion and contain failures within specific service boundaries.

Real-World Benefits of These Patterns

These microservices resilience patterns don’t just sound good in theory; — they translate into practical, operational benefits:

- System Stability: Prevents one failing component from cascading into widespread failure across services.

- Better Resource Utilization: Bulkhead, Circuit Breaker, and Retry help optimize thread usage and reduce unnecessary strain on downstream services.

- Improved User Experience: Users see graceful fallbacks instead of raw exceptions or long timeouts, leading to a more resilient front-end experience.

- Self-Healing Capabilities: Retry allows services to recover from temporary failures without requiring manual intervention.

- Operational Visibility: With built-in metrics and Spring Boot Actuator integration, these patterns offer valuable insight into service health and performance.

- Scalability: These resilience techniques help microservices remain robust under high load, improving fault isolation and supporting dynamic scaling in production.

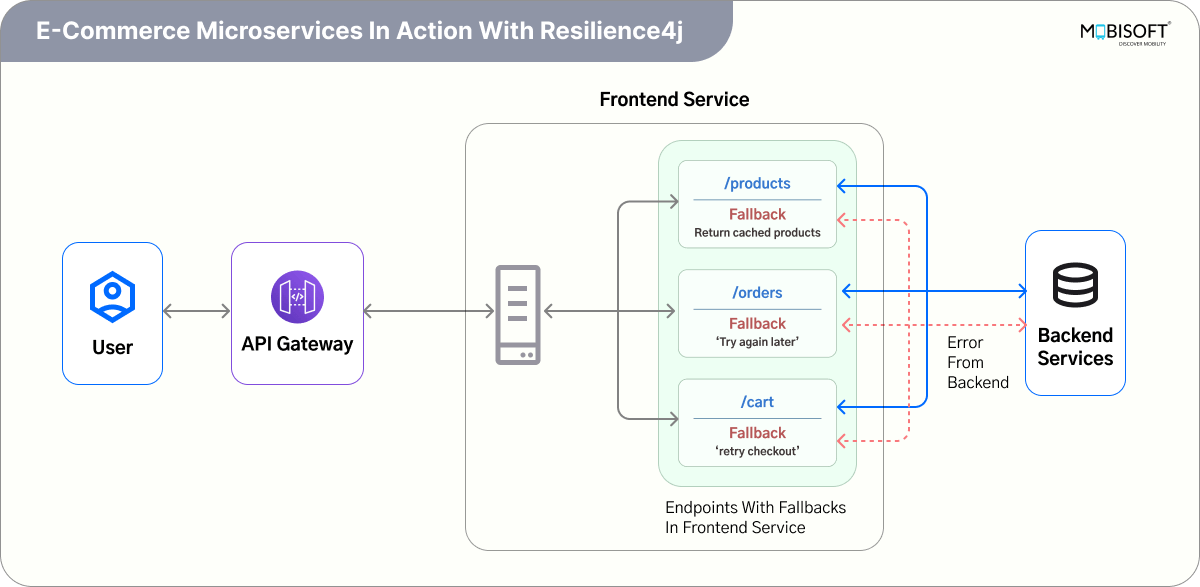

E-commerce Microservices in Action with Resilience4j

Above is a simple diagram of an E-commerce application with different services like products, orders, and carts. These services work together to handle user interactions such as browsing products, managing the shopping cart, and placing orders. To make the system more resilient and user-friendly, we apply different Resilience4j patterns. Let’s look at three of them in the context of this application:

Product Service – Circuit Breaker

Your Product Service is down due to a database outage. If your Frontend Service keeps trying to call it, every request takes time to fail, and wastes system resources.

With Circuit Breaker:

After a threshold of failures, the circuit opens. All future calls are blocked immediately (and optionally routed to a fallback), avoiding further strain and speeding up failure responses.

Example fallback response: “Products are temporarily unavailable. Showing top-rated items from cache.”

Benefit: Prevents cascading failures and keeps the user experience responsive even when services are down.

Order Service – Retry

Sometimes, placing an order fails due to a temporary network glitch or a short-lived backend issue. If the request is not retried, the order fails even though the service may recover in a second.

With Retry:

The system automatically re-invokes the failed call a defined number of times with optional delays. If the issue is transient, the retry succeeds and the order is placed without the user noticing any problem.

Benefit: Helps recover from momentary errors and improves the overall success rate of operations.

Cart Service – Bulkhead

Let’s say the Cart Service becomes slow due to a spike in user activity or a backend bottleneck. Without proper isolation, it can consume too many threads and degrade the performance of the entire system.

With Bulkhead:

Each service is allocated a fixed number of threads or resources. If the Cart Service gets overloaded, only its share of resources is affected; other services like Products and Orders remain unaffected and continue working smoothly.

Benefit: Limits the blast radius of failures and ensures system stability under load.

These Resilience4j patterns help ensure that even when something goes wrong in one part of the system, the rest of the application continues to function reliably, delivering a smoother experience for your customers.

Conclusion

In this comprehensive tutorial, we demonstrated how to apply Resilience4j Circuit Breaker, Retry, and Bulkhead patterns within a Spring Boot microservices architecture. Using a frontend-backend simulation, we introduced real failure scenarios and implemented robust resilience mechanisms to handle them.

Building resilient microservices is no longer optional; it's a necessity in modern distributed systems where partial failures, latency spikes, and overloaded services are everyday realities. Patterns like Circuit Breaker, Retry, and Bulkhead provide time-tested strategies to handle these issues gracefully, and Resilience4j brings these patterns to your Java applications in a lightweight, modular, and easy-to-integrate form.

For teams building cloud-native microservices, Resilience4j simplifies the integration of failure handling mechanisms without adding complexity.

By proactively designing your microservices to handle failure scenarios, you improve system availability, protect critical resources, and deliver a better user experience even under duress.

If you're building microservices with Java or Spring Boot, adopting Resilience4j is a smart step toward making your services more robust, self-healing, and production-ready. It's easy to configure, well-documented, and aligns perfectly with modern resilience engineering practices.

Start small, maybe with a Circuit Breaker on one critical dependency, and evolve your resilience strategy as your system grows.

For teams building cloud-native microservices, Resilience4j simplifies the integration of failure handling mechanisms without adding complexity. You can explore the complete source code and implementation details on our official GitHub repository. Official Resilience4j Documentation to explore in detail more configuration options for all resilience patterns.

June 13, 2025

June 13, 2025