Vibe-coded apps are fast, flashy, and often built as MVPs. They are built in just days or weeks using AI coding assistants, no-code platforms, or low-code tools. This is very helpful when you need a working app to introduce to the market.

AI now produces 41% of all code, with 256 billion lines having been written in 2024 alone. Recent surveys indicate 44% of non-technical founders now build their initial prototypes using AI coding assistants rather than outsourcing to developers. But it has its downsides. Features start breaking when you make even small changes. You can’t remember where specific logic lives in the code. Users report bugs that you cannot reliably reproduce. Worst of all, you become afraid to touch the AI codebase.

If this sounds familiar, welcome to the world of vibe-coded bug chaos. This guide will help you find a way out by showing how to approach debugging AI-generated code and stabilizing these fragile applications. For a more structured approach, explore our AI development services for expert guidance on AI code optimization.

What Makes Vibe-Coded Apps So Bug-Prone?

Vibe-coded applications are often built in a rush. With the use of AI-based coding platforms and no-code builders, teams can build and test apps in just weeks. This is very useful in investment meetings for presenting a working AI-generated code prototype. But the accelerated pace often hides deep structural risks that appear later.

The real challenge surfaces when these apps move beyond early prototypes. Features start breaking without clear reasons. Developers hesitate to touch the code for fear of creating new issues. The result is a fragile product that cannot grow with users or scale with demand. Let’s look at why these applications are especially prone to bugs.

Lack of Clear Architecture

Most vibe-coded projects skip structured planning. Code lands wherever it can fit, inside UI components, small scripts, or plugged into third-party services. Without separation of concerns, it becomes difficult to trace how logic flows. Over time, this scattered design causes small changes in one place to ripple unpredictably into another a major challenge for bug fixing in AI-generated code.

AI-Generated Guesswork

AI coding assistants are built to predict “what code looks right.” They are not wired to know the specific context of your system. Studies by Georgetown's CSET revealed that nearly 50% of code snippets by top-tier models had security vulnerabilities like inadequate input checking and poorly optimized loops. These flaws often require bug fixing in AI-generated code, since they can create subtle memory leaks, silent performance degradation, or even broken functionality that shows up only when the app grows.

Rapid Iterations Without Testing

Early prototypes focus on validating ideas. Testing feels like a slowdown. So teams often skip writing unit tests or integration suites. This is workable in the short term, but creates issues when multiple features land on top of one another. Without even minimal tests, developers rely on manual checks or user complaints to find problems. This lack of structured testing complicates automated code debugging later when the app starts scaling.

Copy-Paste and Black Box Logic

Many AI and low-code flows feel like “black boxes.” Developers copy a snippet, paste it, and move forward—without understanding how it handles input or manages state. Over time, this creates common bugs in AI-written code that are difficult to trace. The lack of comprehension grows dangerous as more code accumulates, making debugging code written by AI far more complex.

Tightly Coupled Systems

Another recurring weakness is tightly coupled components. UI, data models, and services are often linked without clear contracts. Change a small detail in the login form, and the dashboard stops rendering. Modify a payment handler, and email notifications fail. This tight coupling amplifies error handling in generated code challenges and slows down the process of stabilizing AI-generated applications.

Learn more about generative AI solutions for AI code generation best practices.

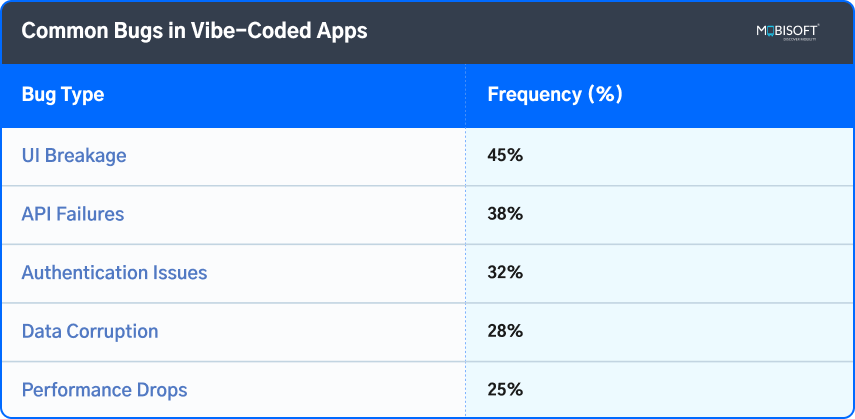

Common Bugs in Vibe-Coded Apps

For continuous stability, consider implementing DevOps for AI applications, including automated bug fixing in AI development.

Step-by-Step: How to Fix Bugs in a Vibe-Coded App

You do not need to throw away your entire application. What you need is structure. A phased process helps you expose weak points, isolate the worst AI code debugging areas, and rebuild confidence. Each stage protects the work you already have while laying foundations for stronger releases.

Step 1: Stabilize What You Have

The job starts with visibility. Right now, you may be responding to bug reports as they arrive without patterns or structure. That creates panic. To break that cycle, you must collect, categorize, and monitor.

Create a Bug Tracker

Even a basic bug board is better than scattered notes. It can be a Notion database, a GitHub board, or even a Google Sheet. The goal is clarity. Each time a bug appears, log:

- What the bug is

- Where it shows up

- How often does it repeat

- Whether it blocks the user from moving forward

- If it can be reproduced step by step

Categorize Bugs Into Priority Levels

Once logged, split them into categories:

- Critical: these stop the app from functioning or block core actions.

- Disruptive: these interrupt, frustrate, or confuse users but do not cause total failure.

- Cosmetic: visible issues that do not impact core behavior.

This prioritization helps with bug fixing in AI-generated code, so your team tackles the most damaging problems first.

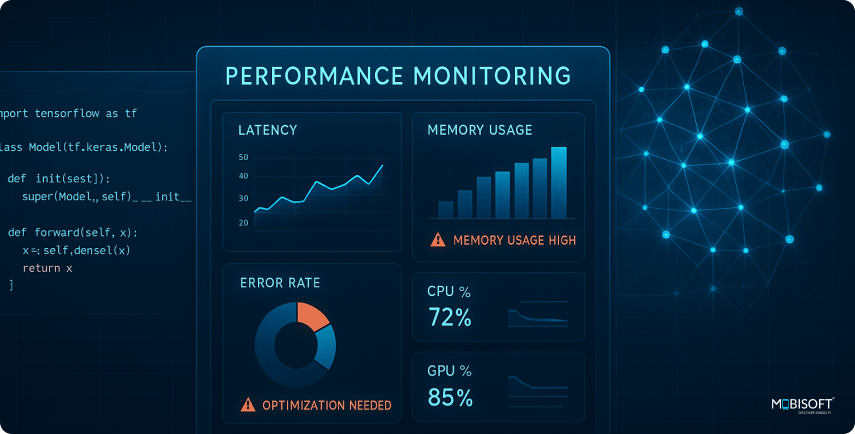

Add Basic Monitoring

Tracking only user reports leaves large blind spots. Implement monitoring tools where possible:

- Sentry for error tracking

- LogRocket or FullStory for seeing real user sessions

- Simple console logs or server logs where advanced tools cannot be used

Monitoring reduces guesswork and supports automated code debugging by showing issues as they occur.

Step 2: Identify the Root Causes of Chaos

Once you log bugs, the next issue is finding why they keep showing up. This means scanning the structure of your AI codebase.

Questions to ask:

- Are your components too complex or doing multiple jobs at once?

- Is business logic mixed directly into UI code rather than separated?

- Are third-party tools or plugins creating dependencies you cannot control?

- Is data being moved through fragile assumptions that fail in real cases?

Use Tools for Early Discovery

Static analysis platforms like SonarCloud or Codacy can reveal weak spots. If you’re using AI coding assistants, run repo-level audits. This will flag duplicate logic, risky patterns, or dead code. This is a crucial step in debugging code written by AI before issues escalate.

The purpose of this step is not refactoring yet. It is a diagnosis. Once you see causes clearly, you will know where to act.

Step 3: Wrap Critical Paths with Tests

A vibe-coded app usually has no tests or has incomplete ones. You cannot cover every single function instantly, but you can put guardrails around the most important flows.

What to Test First

- Sign up and log in

- Payment workflows

- Dashboard data and reporting

- Notifications and webhook callbacks

Even minimal smoke tests improve error handling in generated code, catching problems before users do.

Tools to Use

- Cypress or Playwright for web flow testing

- Postman or Hoppscotch for API request and response checks

- Jest with Testing Library for unit tests in React or similar frameworks

Why Even Small Tests Matter

Minimal smoke tests ensure your application still runs after each change. They turn risk into routine. Developers become less afraid to push new code since tests will highlight errors instantly.

Step 4: Refactor High-Risk Areas First

You cannot fix everything at once, but you must address recurring failures in AI-generated code.

Where to Start

- Remove logic from the UI and place it into service or domain layers

- Break huge functions into smaller units that are easier to test

- Rewrite unclear AI-generated blocks into code that the team fully understands

- Remove redundant plugins, libraries, or unused dependencies

How to Release Safely

Feature flags are practical here. Instead of releasing risky changes to everyone, you deploy them to a targeted user group. If things hold up, you widen rollout. This prevents mass outages and builds confidence.

Step 5: Triage AI-Generated Code

If your MVP was heavily written using tools like Copilot or Claude, you must approach it as a review exercise. Treat AI-generated areas the same way you would treat externally supplied code with unknown trust.

What to Look For

- Exception handling: Are errors caught or ignored?

- Input safety: What happens when null, undefined, or empty values appear?

- Performance overhead: Are loops inefficient or prone to exceeding limits?

- Quota and rate checks: Does the code risk hitting third-party limits?

How to Audit with AI

Instead of generating new code, make AI explain what you already have. Useful prompts include:

- “Explain what this function does, and where it might break.”

- “What happens if this block receives invalid data?”

- “Suggest a safer, more standard way to write this logic.”

By moving AI into the role of auditor rather than writer, you prevent mysterious behavior from living hidden in production.

Step 6: Rebuild the Worst Offenders

Some parts of the codebase will simply resist repair. These sections will crash, fail, or break repeatedly, no matter how many patches you apply.

When Rebuild is the Right Choice

- If a module is fragile and creates the same bugs again and again

- If it has been built using obsolete or unsupported frameworks

- If its design actively prevents meaningful testing

- If new developers cannot understand it even after a long explanation

Leaving such sections in place is risky. They will consume growing time and effort with little payoff.

How to Rebuild Safely

Do not replace everything at once. Use a targeted rebuild. Develop the new module in parallel. Keep the old one running. When the new one is ready, place it behind a feature toggle and gradually route real users to it.

Step 7: Establish a Bug-Fixing Workflow

At the prototype stage, bug fixing is informal. In production, that approach fails. A structured workflow ensures you are not repeating the same fire drills endlessly.

Formalize the Process

- Schedule bug-fix sprints or “technical debt days” to specifically address recurring issues

- Hold weekly triage sessions where the team reviews new and ongoing bugs

- Require clear version control, with feature branches and pull request templates

- Use reviews to check both the clarity of code and the presence of basic test coverage

Promote Culture Over Quick Patches

Encourage developers to log root causes instead of just patching symptoms. Document lessons learned from each incident. This simple discipline builds institutional knowledge.

Pro Tips to Fix Bugs in AI-Assisted / Vibe-Coded Systems

The following tips will help teams navigate the nuances of AI-generated code or rapidly prototyped codebases, making the bug fixing in AI-generated code process more efficient and less error-prone.

Use Git Blame to Trace AI-Created Bugs

One effective practice is to use tools like Git blame to identify which parts of the AI codebase were written by AI assistants such as Copilot or Claude. By pinpointing these sections, your team can focus their testing and review efforts where the risk is highest, reducing AI coding assistant bugs.

Ask AI for Safer Alternatives

Instead of treating AI tools just as code generators, use them as reviewers and advisors. When you encounter complex or unclear code, ask the AI for safer or more idiomatic ways to write that logic. For example, you can prompt AI with questions like, “What is a more reliable way to handle this input validation?” or “How can this loop be optimized to prevent performance issues?” This helps in debugging AI-generated code effectively.

Make Debugging Reproducible

A common challenge with vibe-coded apps is inconsistent bug reproduction. Teams often struggle because they lack the exact test data, environment conditions, or user scenarios that caused the issue. To tackle this, build reproducible test cases for each bug. This step is key to automated code debugging.

Document Known Issues in Code Comments

Fragile or shortcut implementations often continue to cause problems without anyone realizing why they were made. Including clear comments in the AI codebase highlighting assumptions, workaround reasons, or known limitations helps future developers avoid repeating mistakes.

Don’t Refactor Without Tests

Refactoring code without tests is dangerous, especially in vibe-coded systems where the original logic might be unclear or incomplete. Even minimal test coverage around critical flows can prevent unintended regressions during cleanup work and support stabilizing AI-generated applications.

Common mistakes to stay away from

Many teams try to rush fixes without proper planning, which often leads to frustration and wasted effort. While it is important to ensure the implementation of the best practices, it is equally necessary to steer clear of common hindrances.

- One frequent mistake is attempting to rewrite the entire AI codebase at once. This approach creates huge risks and often introduces more bugs. Instead, fixes should be gradual and focused on critical areas.

- Another error is neglecting documentation. Vibe-coded projects often start without clear notes, and skipping documentation during cleanup only compounds knowledge gaps. Clear, concise comments and records save time in the long run.

- Blaming AI-generated code exclusively is also counterproductive. While AI can introduce fragile logic, human oversight, testing, and architecture decisions carry more weight in stability.

By steering clear of these mistakes, teams reduce technical debt and build a stronger foundation for debugging code written by AI.

When to Consider Rebuilding Entirely

Sometimes incremental fixes are not enough. A full rebuild may become necessary to restore stability and allow future growth.

Signs You Need a Full Rewrite

- The technology stack is outdated or no longer supported.

- The codebase is so tangled that testing or making changes is nearly impossible.

- Core user flows are flawed at a fundamental level, causing repeated failures.

- Performance issues persist even after multiple optimizations.

- New developers cannot be onboarded without extensive training or explanations.

How to Approach a Rebuild

Rebuilding does not have to mean starting over all at once. Use a phased strategy such as the strangler pattern. Create new modules one at a time, running alongside the old system. Gradually switch users to the fresh code. This reduces risk and avoids long downtime while stabilizing AI-generated applications.

Real-World Example

ProductHuntX is a SaaS prototype built quickly using tools like Copilot, Firebase, and Vercel. It impresses early users and investors during demo week. However, after scaling up, several bugs started causing problems.

Some of the issues they faced included users being unable to log in if their names contained emojis. The pricing logic failed whenever new pricing tiers were added. Additionally, button click logic relied heavily on brittle DOM IDs, which caused it to break frequently.

To address these, the team took several steps. They wrapped their login and billing flows with Cypress tests to catch errors early. They audited AI-generated code thoroughly to identify risky assumptions. The pricing engine was rebuilt as a standalone, cleaner module. They also moved input validation from the frontend to the backend for greater reliability.

Outcome:

Bug reports decreased by around 40%. Feature development doubled in pace. Onboarding new engineers became smoother because the AI codebase was better organized and tested. For startups looking to scale quickly, check out our AI product roadmap for startups to plan stable growth.

ProductHuntX, a SaaS prototype built using Copilot + Firebase + Vercel, started breaking after demo week.

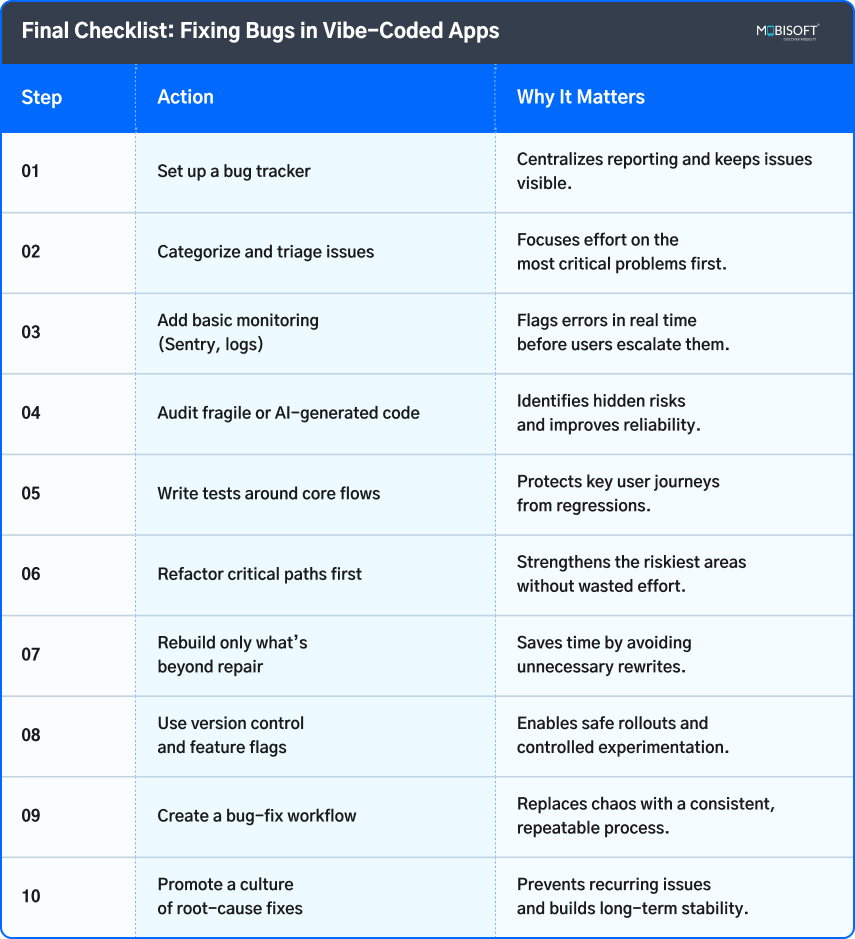

Final Checklist: Fixing Bugs in Vibe-Coded Apps

For example, teams can implement Generative AI optimization to fine-tune AI-generated code for performance and reliability.

Turning Chaos into Confidence: Your Roadmap to Stability

Building a vibe-coded app fast is a great way to get your idea out to market quickly. But moving from prototype to a reliable product requires careful cleanup and discipline. The speed that helped you launch can become a source of bugs if left unmanaged.

Gradual rebuilding of the most fragile parts ensures stability without risking live users. Create a structured bug fixing in an AI-generated code workflow and foster a team culture that values root-cause analysis. Treat AI-generated code as something to audit and understand, not just accept blindly.

Implement the practices mentioned and beware of the common mistakes. Doing so, teams will be able to stabilize their vibe-coded app. This approach supports faster feature delivery and easier onboarding of new engineers, setting your product up for success beyond the demo phase. Similarly, leveraging AI agent optimization ensures that AI-driven modules like chatbots or automated workflows run efficiently and predictably.

Key takeaways:

- Speed comes at a cost: Vibe-coded apps make use of no-code platforms and AI-based environments to build apps quickly. This is because of a weak codebase, which helps speed up the process but causes bugs when scaled.

- Architecture matters: Even slight code changes cause a ripple effect after scaling. This often occurs due to improper structure and confusing business logic in UI code.

- AI is not infallible: AI-generated snippets look perfect in normal test cases, but fail on testing under exceptional conditions.

- Testing is the safety net: Running comprehensive tests is crucial. It can otherwise leave errors in important pages like payment and signup.

- Black-box logic is risky: Copy-paste code and opaque AI/low-code flows weaken developer understanding, making debugging and scaling harder.

- Stabilization requires structure: monitoring tools, Bug trackers, and clear prioritization of issues turn firefighting into predictable workflows.

- Targeted fixes beat rewrites: Refactor high-risk areas first, wrap core flows with tests, and only rebuild modules that resist repair.

- Use AI wisely: AI is smart enough to help, not to create. Treat it like an auditor, ask questions, take suggestions, and improve upon the existing logic.

- Avoid common traps: Don’t refactor without tests, skip documentation, or attempt a full rewrite at once. These moves create more chaos.

- Long-term stability is cultural: Create well-defined workflows, practice analyzing the root cause, and document fragile areas for efficient and seamless scaling.

August 29, 2025

August 29, 2025