The Apple WWDC Event continues to encourage developers about how the new integrations can help to create an amazing experience. From day 3, a lot of focus has been on creating shortcut app experiences, designing an app, the features of the iOS camera captures, and building layouts and views with Swift UI. These features will provide app developers with an extensive range of opportunities to create mobile and Apple product applications hassle-free.

Let’s take a look at the recap from day 3 of the Apple WWDC Event 22.

Apple WWDC Event 2022 Day 3 Recap

As we mentioned earlier, day 3 was all about designing and creating app experiences with different toolkits. On day 2, there are several explanatory videos on how Apple developers can change their array of app development processes.

Here’s a look at the sessions from day 3 of the worldwide developers’ conference.

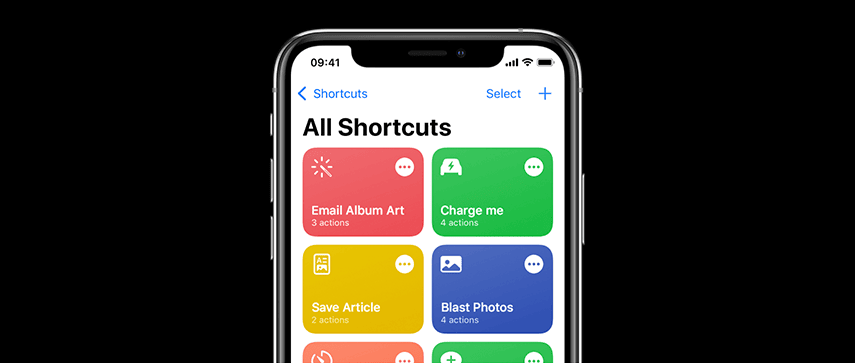

App Shortcuts Experience with Actions

As we continually discover the new Apple update features, App Shortcuts Experience helps users by suggesting shortcuts. This suggestion allows users to combine Siri, Spotlight, or other app actions to create their necessary shortcuts. When a user is performing specific actions on an application, developers can create or donate shortcuts for accelerating the actions for user accessibility. Siri plays an important role in donating interactions for users in places like Spotlight searches, Siri watch face, and Lock Screen. Sometimes certain actions are available on the app, but users are yet to see the performance. This can spike their interest in the application. Users may be interested in options to add to their shortcuts and this App Shortcuts Experience can provide them with just that by suggesting shortcuts. Developers can offer shortcut suggestions representing certain actions that are specific to a user. This can vary over time due removal or addition of features in an app and a change in the way users interact with the app.

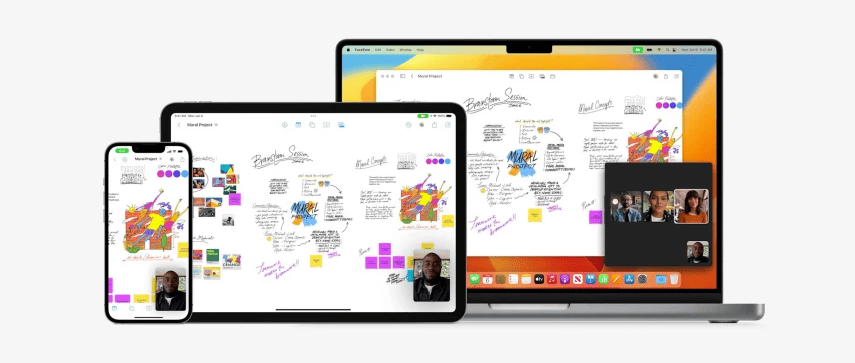

Desktop-Class iPad Applications

Users can use the Magic Keyboard and the external display on an iPad with productivity achievement from desktop-class application development. Developers can take advantage of the iPadOS 16 features to build applications that increase efficiency, customize the existing workflows, and complete ongoing tasks quickly. After creating a desktop-class iPad application, the app can be directly brought over to the Mac with little effort by using Mac Catalyst. Building a desktop-class iPad application ensures:

- Enhanced experience with multitasking: iPad apps are known for their support in increasing productivity by allowing quick and complex workflows to run seamlessly across multiple applications. With iPadOS 16, people can implement Stage Manager to switch between multiple applications and dynamically resize and organize the apps on the windows on the iPad external display.

- Supporting document-editing workflows: Documents contribute to the core of multiple iPad applications, and the integration of iPadOS 16 enhances the experience for document-editing. Having navigation-based applications can support the expert style of editors that can bring to the forefront important features of the document-editing.

- Simplification of the text-editing procedures: While using an iPad attached to an external keyboard, users want complete support in keyboard input. This includes familiarity with keyboard shortcuts and interactions. Providing users with a seamless text-editing experience can leverage the standard system capabilities by tailoring the experience according to keyboard and touch inputs.

- Supporting the inline searches combined with suggestions: The new iPadOS16 has introduced a series of Inline Search UI that helps in providing more space for the surrounding content. With the new operating system in place, improve the search results for content discovery with the help of search capabilities on the iPad and help provide suggestions to users with quicker navigation.

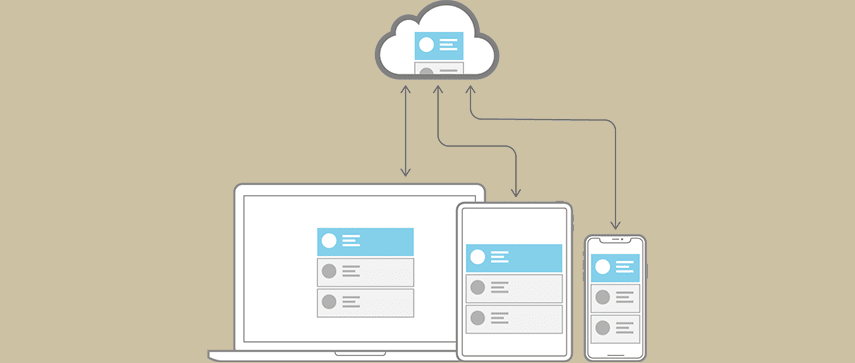

Core Data Optimization

Core Data can help in saving the app data permanently for offline use, caching temporary data, and adding undo functionalities for an app on a single device. For syncing the day across multiple Apple-supported devices in an individual iCloud, Core Data can automatically mirror the schema of a user to the CloudKit container. With the Core Data’s Data Model editor feature, developers can define the types of data and the relationship to generate respective class definitions. Core data can then optimize and manage objects in providing a runtime for specific features like:

- Persistence: Core Data can abstract the information of mapping an object to a store, hence making it simple to save data gathered from Swift and Objective-C without directly administering a database.

- Redo and Undo of Batched or Individual Changes: Core Data can undo manager track changes and again roll them back singularly, in batches, or all together. This makes it easy to support apps with redo and undo methods.

- Background Data Tasks: When performing potential UI-blocking data tasks, developers can cache or store the results that can diminish the server roundtrips.

- View Synchronization: Core Data assists in keeping user views and data in sync by offering data sources for both collection and table viewing.

- Migration and Versioning: Core Data has included mechanisms that can help in the versioning of the data model and the migration of user data according to the upgrade of an app.

Core ML Usage Optimization

Apple updates are requesting iOS developers to integrate machine learning into their applications. Core ML applies to machine learning algorithms, which is a set of training data creating a model. This model is used to make predictions based on the new input data in the application. With Core ML models, iOS app developers can accomplish a wide array of tasks without the hassle of writing code for an app. Once the model is created, it can be integrated into the application and deployed on user devices. It will now use Core ML APIs to make data predictions, train, and fine-tune the model.

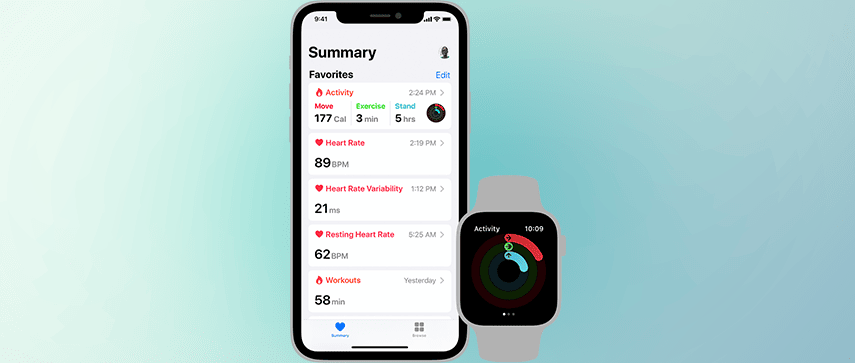

HealthKit

The HealthKit offers a centralized repository for fitness and data on Apple Watch and iPhone. With permission from users, applications can communicate with HealthKit to access and share this collected data. Creating a holistic experience for personalized health and fitness experience requires a variety of tasks to be completed. These tasks can range from:

- Storing and collecting data for health and fitness

- Visualization and analysis of data

- Enabling social interaction via the app

The HealthKit app offers a collaborative approach to building user experience. The application doesn’t require to provide every feature. Developers can focus on the subset of the tasks that can be interesting for them. HealthKit is also designed in a way to manage and merge data from various sources. Users can view and manage their health data on the health App. This allows them to include, add, delete, and change app permissions. Hence, the healthcare app needs to be flexible in handling such tasks even when it is occurring beyond the specific healthcare application.

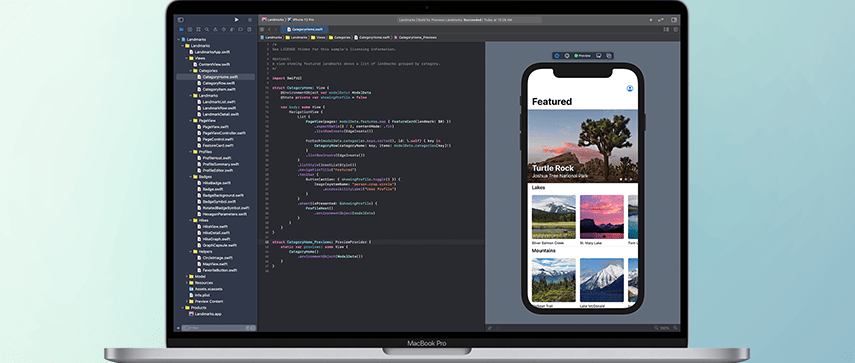

Custom Layouts and Views for Applications with SwiftUI

SwiftUI layout tools can help developers to arrange the app interface. This Apple WWDC event session provided a demonstration with a sample application where various tools were provided by SwiftUI. With custom layouts and views enabled by SwiftUi for application development, a few things can be kept in mind:

- Developers a can arrange the views in two dimensions with a grid layout

- They can create a custom equal-width layout

- Select a view that fits and is appropriate for the layout

- Improve the efficiency of layout with a cache

- Create a custom radial layout with assistance from an offset

- Animate transitions in between layouts

Camera Capture Advancements in iOS Camera

The Apple WWDC event did focus a lot on camera capture advancements. Camera Capture features are usually limited in applications running on a full-screen mode. When an app enters the multitasking mode in Split View, the camera system is automatically disabled. With the iOS 16 update, the camera can be used even when multitasking on other applications. This can be enabled by changing the settings of the “isMultitaskingCameraAccessEnabled” property to “True” on the iOS-supported devices. With a LiDAR scanner, users can now create video and photo effects and perform accurate measurements of depth.

Wrapping Up

At the end of day 3, there has been a multichannel of information regarding the implementation of the Apple software update. The Developers Conference is indeed proving to be an excellent amalgamation of inputs that can help businesses to upgrade their app development services. Therefore, by enhancing the quality of app development services, companies can generate seamless transformation in user experience. Let’s stay tuned for day 4!

Check out the announcements related to Apple software and hardware here:

Apple WWDC Event 2022 Keynote Highlights Day 1

Apple WWDC Event 2022 Keynote Highlights Day 2

Author's Bio:

Pritam Barhate, with an experience of 14+ years in technology, heads Technology Innovation at Mobisoft Infotech. He has a rich experience in design and development. He has been a consultant for a variety of industries and startups. At Mobisoft Infotech, he primarily focuses on technology resources and develops the most advanced solutions.