Introduction to the topic

If you want to broadcast your video which is being captured by iOS device camera to web or mobile devices, in that case, you will find two major protocols.

- Apple’s HTTP Live Streaming (HLS) : HTTP Live Streaming is an HTTP based media streaming communication protocol developed by Apple. It has their own limitations if you want to implement it for multiple platforms.

- Adobe’s RTMP : Many RTMP libraries which allow iOS streaming have commercial licenses. You need to use their P2P infrastructure.

Avoiding all this clutter you can develop your own protocol and use iOS VideoToolBox framework which gives you direct hardware access to encode and decode H264 video frames. H264 is widely used format of recording, compression and distribution of video content, which will make things easier for you to send and receive the video frames by which you can achieve your video broadcasting task.

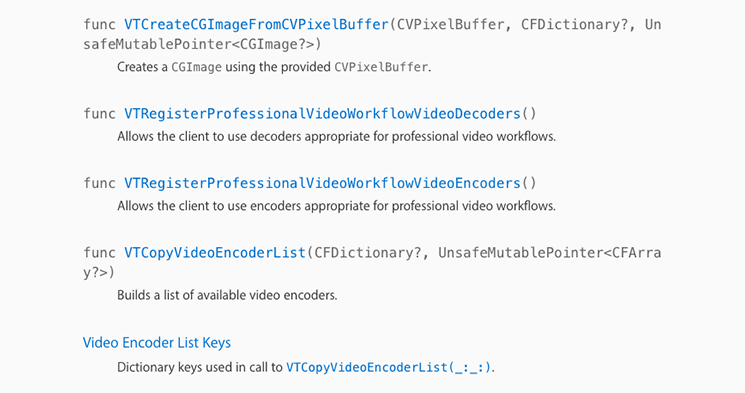

Video Toolbox Framework

The Video Toolbox framework (VideoToolbox.framework) includes direct access to hardware video encoding and decoding.

Before start to Encoding and Decoding of H264 you all need to know about H264 elementary bitstream format.

1. Annex B

Annex B is widely used in Live streaming because of its simple format. In this format, it’s common to repeat SPS and PPS periodically preceding every IDR. Thus, it creates random access points for decoder. It gives the ability to join stream which is already in progress.

2. AVCC

AVCC is the common method to store H264 stream. In this format, each NALU is preceded with its length (In big endian).

Introductory image for SPS,PPS, IDR and Non IDR frames.

Introduction / Concept

1. NALU

NALU stands for Network Abstraction Layer Units each packet can individually parse. Each packet individually identified by its start code which could be from 3 bytes to 7 bytes, which is called as NALU start code or headers. 3-bytes start code (0x00 00 01) and 4-bytes start code (0x00 00 00 01) seems like this. There are different different NALU types defined as VCL and NON VCL NAL units as follows,

NSString * const naluTypesStrings[] = { @"0: Unspecified (non-VCL)", @"1: Coded slice of a non-IDR picture (VCL)", // P frame @"2: Coded slice data partition A (VCL)", @"3: Coded slice data partition B (VCL)", @"4: Coded slice data partition C (VCL)", @"5: Coded slice of an IDR picture (VCL)", // I frame @"6: Supplemental enhancement information (SEI) (non-VCL)", @"7: Sequence parameter set (non-VCL)", // SPS parameter @"8: Picture parameter set (non-VCL)", // PPS parameter @"9: Access unit delimiter (non-VCL)", @"10: End of sequence (non-VCL)", @"11: End of stream (non-VCL)", @"12: Filler data (non-VCL)", @"13: Sequence parameter set extension (non-VCL)", @"14: Prefix NAL unit (non-VCL)", @"15: Subset sequence parameter set (non-VCL)", @"16: Reserved (non-VCL)", @"17: Reserved (non-VCL)", @"18: Reserved (non-VCL)", @"19: Coded slice of an auxiliary coded picture without partitioning (non-VCL)", @"20: Coded slice extension (non-VCL)", @"21: Coded slice extension for depth view components (non-VCL)", @"22: Reserved (non-VCL)", @"23: Reserved (non-VCL)", @"24: STAP-A Single-time aggregation packet (non-VCL)", @"25: STAP-B Single-time aggregation packet (non-VCL)", @"26: MTAP16 Multi-time aggregation packet (non-VCL)", @"27: MTAP24 Multi-time aggregation packet (non-VCL)", @"28: FU-A Fragmentation unit (non-VCL)", @"29: FU-B Fragmentation unit (non-VCL)", @"30: Unspecified (non-VCL)", @"31: Unspecified (non-VCL)", };

2. SPS

Sequence parameter set is non-VCL NALU contains information required to configure the decoder such as profile, level, resolution, frame rate.

3. PPS

Picture parameter set is also non-VCL NALU contains information on entropy coding mode, slice groups, motion prediction and deblocking filters.

4. IDR

This VCL NALU is a self contained image slice. That is, an IDR can be decoded and displayed without referencing any other NALU save SPS and PPS.

Encode H264

Once you initialize AvCaptureSession to capture the video frames using iOS camera. You will receive CMSampleBufferRef under this delegate method AVCaptureVideoDataOutputSampleBufferDelegate. Now we will focus on how to encode H264 data using VTCompressionSessionRef

VTCompressionSessionRef

A compression session supports the compression of a sequence of video frames. To create a compression session, call VTCompressionSessionCreate; then you can optionally configure the session using VTSessionSetProperty;then to encode frames, call VTCompressionSessionEncodeFrame. To force completion of some or all pending frames, call VTCompressionSessionCompleteFrames. When you are done with the session, you should call VTCompressionSessionInvalidate to tear it down and CFRelease to release your object reference. You should create VTCompressionSessionCreate in AVCaptureVideoDataOutputSampleBufferDelegate method.

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); size_t width = CVPixelBufferGetWidth(imageBuffer); size_t height = CVPixelBufferGetHeight(imageBuffer); VTCompressionSessionRef session; OSStatus ret = VTCompressionSessionCreate(NULL, (int)width, (int)height, kCMVideoCodecType_H264, NULL, NULL, NULL, OutputCallback, NULL, &session); if (ret == noErr) { VTSessionSetProperty(session, kVTCompressionPropertyKey_RealTime, kCFBooleanTrue); CMTime presentationTimestamp = CMTimeMake(20, 30); VTCompressionSessionEncodeFrame(session, imageBuffer, presentationTimestamp, kCMTimeInvalid, NULL, NULL, NULL); VTCompressionSessionEndPass(session, false, NULL); } if (session) { VTCompressionSessionInvalidate(session); CFRelease(session); }

In OutputCallback method, you will get compressed sample buffer in which you have to find whether it is an I-frame or not, if so we can write SPS and PPS NAL units to the elementary stream. At last, we will write elementary stream into H264 file. If we could play H264 file in VLC player we can consider successful compression.

Follow the steps to write elementary stream into H264 file:

- Initialize NSMutableData object to store elementary stream

- Find out an I-Frame from sample buffer

- Initialize start code and start code length to write before every NAL unit

- Write the SPS and PPS NAL units to the elementary stream before every I-Frame

- Loop through all the NAL units in the block buffer and write them to the elementary stream with start codes instead of AVCC length headers

- Write elementary data to .h264 file in document directory

void OutputCallback(void *outputCallbackRefCon,

void *sourceFrameRefCon,

OSStatus status,

VTEncodeInfoFlags infoFlags,

CMSampleBufferRef sampleBuffer) {

// Check if there were any errors encoding

if (status != noErr) {

NSLog(@"Error encoding video, err=%lld", (int64_t)status);

return;

}

// In this example we will use a NSMutableData object to store the

// elementary stream.

NSMutableData *elementaryStream = [NSMutableData data];

// Find out if the sample buffer contains an I-Frame.

// If so we will write the SPS and PPS NAL units to the elementary stream.

BOOL isIFrame = NO;

CFArrayRef attachmentsArray = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, 0);

if (CFArrayGetCount(attachmentsArray)) {

CFBooleanRef notSync;

CFDictionaryRef dict = CFArrayGetValueAtIndex(attachmentsArray, 0);

BOOL keyExists = CFDictionaryGetValueIfPresent(dict,

kCMSampleAttachmentKey_NotSync,

(const void **)¬Sync);

// An I-Frame is a sync frame

isIFrame = !keyExists || !CFBooleanGetValue(notSync);

}

// This is the start code that we will write to

// the elementary stream before every NAL unit

static const size_t startCodeLength = 4;

static const uint8_t startCode[] = {0x00, 0x00, 0x00, 0x01};

// Write the SPS and PPS NAL units to the elementary stream before every I-Frame

if (isIFrame) {

CMFormatDescriptionRef description = CMSampleBufferGetFormatDescription(sampleBuffer);

// Find out how many parameter sets there are

size_t numberOfParameterSets;

CMVideoFormatDescriptionGetH264ParameterSetAtIndex(description,

0, NULL, NULL,

&numberOfParameterSets,

NULL);

// Write each parameter set to the elementary stream

for (int i = 0; i < numberOfParameterSets; i++) {

const uint8_t *parameterSetPointer;

size_t parameterSetLength;

CMVideoFormatDescriptionGetH264ParameterSetAtIndex(description,

i,

¶meterSetPointer,

¶meterSetLength,

NULL, NULL);

// Write the parameter set to the elementary stream

[elementaryStream appendBytes:startCode length:startCodeLength];

[elementaryStream appendBytes:parameterSetPointer length:parameterSetLength];

}

}

// Get a pointer to the raw AVCC NAL unit data in the sample buffer

size_t blockBufferLength;

uint8_t *bufferDataPointer = NULL;

CMBlockBufferGetDataPointer(CMSampleBufferGetDataBuffer(sampleBuffer),

0,

NULL,

&blockBufferLength,

(char **)&bufferDataPointer);

// Loop through all the NAL units in the block buffer

// and write them to the elementary stream with

// start codes instead of AVCC length headers

size_t bufferOffset = 0;

static const int AVCCHeaderLength = 4;

while (bufferOffset < blockBufferLength - AVCCHeaderLength) { // Read the NAL unit length uint32_t NALUnitLength = 0; memcpy(&NALUnitLength, bufferDataPointer + bufferOffset, AVCCHeaderLength); // Convert the length value from Big-endian to Little-endian NALUnitLength = CFSwapInt32BigToHost(NALUnitLength); // Write start code to the elementary stream [elementaryStream appendBytes:startCode length:startCodeLength]; // Write the NAL unit without the AVCC length header to the elementary stream [elementaryStream appendBytes:bufferDataPointer + bufferOffset + AVCCHeaderLength length:NALUnitLength]; // Move to the next NAL unit in the block buffer bufferOffset += AVCCHeaderLength + NALUnitLength; } //Before decompress we will write elementry data to .h264 file in document directory //you can get that file using iTunes => Apps = > FileSharing => AVEncoderDemo

[FileLogger logToFile:elementaryStream];

//send data to decompress

uint8_t *bytes = (uint8_t*)[elementaryStream bytes];

int size = (int)[elementaryStream length];

[[CameraSource source] receivedRawVideoFrame:bytes withSize:size];

}

Decode H264

We will focus on how to decode H264 data using VTDecompressionSessionRef

VTDecompressionSessionRef

A decompression session supports the decompression of a sequence of video frames.The session reference is a reference-counted CF object.To create a decompression session, call VTDecompressionSessionCreate; then you can optionally configure the session using VTSessionSetProperty; then to decode frames, call VTDecompressionSessionDecodeFrame.When you are done with the session, you should call VTDecompressionSessionInvalidate to tear it down and CFRelease to release your object reference.

We will decode the same elementary data what we just compressed.

Before that we declare some global variable

@property (nonatomic, assign) CMVideoFormatDescriptionRef formatDesc;

@property (nonatomic, assign) VTDecompressionSessionRef decompressionSession;

@property (nonatomic, retain) AVSampleBufferDisplayLayer *videoLayer;

@property (nonatomic, assign) int spsSize;

@property (nonatomic, assign) int ppsSize;

//send data to decompress

uint8_t *bytes = (uint8_t*)[elementaryStream bytes];

int size = (int)[elementaryStream length];

[[CameraSource source] receivedRawVideoFrame:bytes withSize:size];

Follow the steps to decompress the received raw video frames:

- Find out the 2nd and 3rd start code from H264

- Find out NALU types and if NALU type is 7 then it’s the SPS parameter NALU

- Find out Type 8 is the PPS parameter NALU

- Allocate enough data to fit the SPS and PPS parameters into our data objects

- Now we set our H264 parameters

- Type 5 is an IDR frame NALU. The SPS and PPS NALUs should always be followed by an IDR (or IFrame) NALU

- Replace the start code header, it’s required by AVCC format

- NALU type 1 is non-IDR (or PFrame) picture frame

- Non-IDR frames do not have an offset due to SPS and PSS, so the approach is similar like IDR frames

- Finally you can send the samplebuffer to a VTDecompressionSession or to an AVSampleBufferDisplayLayer

- How to render sample buffer you will find that part in render method in this example

-(void) receivedRawVideoFrame:(uint8_t *)frame withSize:(uint32_t)frameSize

{

OSStatus status;

uint8_t *data = NULL;

uint8_t *pps = NULL;

uint8_t *sps = NULL;

// I know how my H.264 data source's NALUs looks like so I know start code index is always 0.

// if you don't know where it starts, you can use a for loop similar to how I find the 2nd and 3rd start codes

int startCodeIndex = 0;

int secondStartCodeIndex = 0;

int thirdStartCodeIndex = 0;

long blockLength = 0;

CMSampleBufferRef sampleBuffer = NULL;

CMBlockBufferRef blockBuffer = NULL;

int nalu_type = (frame[startCodeIndex + 4] & 0x1F);

NSLog(@"~~~~~~~ Received NALU Type \"%@\" ~~~~~~~~", naluTypesStrings[nalu_type]);

// if we havent already set up our format description with our SPS PPS parameters, we

// can't process any frames except type 7 that has our parameters

if (nalu_type != 7 && _formatDesc == NULL)

{

NSLog(@"Video error: Frame is not an I Frame and format description is null");

return;

}

// NALU type 7 is the SPS parameter NALU

if (nalu_type == 7)

{

// find where the second PPS start code begins, (the 0x00 00 00 01 code)

// from which we also get the length of the first SPS code

for (int i = startCodeIndex + 4; i < startCodeIndex + 40; i++)

{

if (frame[i] == 0x00 && frame[i+1] == 0x00 && frame[i+2] == 0x00 && frame[i+3] == 0x01)

{

secondStartCodeIndex = i;

_spsSize = secondStartCodeIndex; // includes the header in the size

break;

}

}

// find what the second NALU type is

nalu_type = (frame[secondStartCodeIndex + 4] & 0x1F);

NSLog(@"~~~~~~~ Received NALU Type \"%@\" ~~~~~~~~", naluTypesStrings[nalu_type]);

}

// type 8 is the PPS parameter NALU

if(nalu_type == 8) {

// find where the NALU after this one starts so we know how long the PPS parameter is

for (int i = _spsSize + 12; i < _spsSize + 50; i++)

{

if (frame[i] == 0x00 && frame[i+1] == 0x00 && frame[i+2] == 0x00 && frame[i+3] == 0x01)

{

thirdStartCodeIndex = i;

_ppsSize = thirdStartCodeIndex - _spsSize;

break;

}

}

// allocate enough data to fit the SPS and PPS parameters into our data objects.

// VTD doesn't want you to include the start code header (4 bytes long) so we add the - 4 here

sps = malloc(_spsSize - 4);

pps = malloc(_ppsSize - 4);

// copy in the actual sps and pps values, again ignoring the 4 byte header

memcpy (sps, &frame[4], _spsSize-4);

memcpy (pps, &frame[_spsSize+4], _ppsSize-4);

// now we set our H264 parameters

uint8_t* parameterSetPointers[2] = {sps, pps};

size_t parameterSetSizes[2] = {_spsSize-4, _ppsSize-4};

status = CMVideoFormatDescriptionCreateFromH264ParameterSets(kCFAllocatorDefault, 2,

(const uint8_t *const*)parameterSetPointers,

parameterSetSizes, 4,

&_formatDesc);

NSLog(@"\t\t Creation of CMVideoFormatDescription: %@", (status == noErr) ? @"successful!" : @"failed...");

if(status != noErr) NSLog(@"\t\t Format Description ERROR type: %d", (int)status);

// See if decomp session can convert from previous format description

// to the new one, if not we need to remake the decomp session.

// This snippet was not necessary for my applications but it could be for yours

/*BOOL needNewDecompSession = (VTDecompressionSessionCanAcceptFormatDescription(_decompressionSession, _formatDesc) == NO);

if(needNewDecompSession)

{

[self createDecompSession];

}*/

// now lets handle the IDR frame that (should) come after the parameter sets

// I say "should" because that's how I expect my H264 stream to work, YMMV

nalu_type = (frame[thirdStartCodeIndex + 4] & 0x1F);

NSLog(@"~~~~~~~ Received NALU Type \"%@\" ~~~~~~~~", naluTypesStrings[nalu_type]);

}

// create our VTDecompressionSession. This isnt neccessary if you choose to use AVSampleBufferDisplayLayer

if((status == noErr) && (_decompressionSession == NULL))

{

[self createDecompSession];

}

// type 5 is an IDR frame NALU. The SPS and PPS NALUs should always be followed by an IDR (or IFrame) NALU, as far as I know

if(nalu_type == 5)

{

// find the offset, or where the SPS and PPS NALUs end and the IDR frame NALU begins

int offset = _spsSize + _ppsSize;

blockLength = frameSize - offset;

// NSLog(@"Block Length : %ld", blockLength);

data = malloc(blockLength);

data = memcpy(data, &frame[offset], blockLength);

// replace the start code header on this NALU with its size.

// AVCC format requires that you do this.

// htonl converts the unsigned int from host to network byte order

uint32_t dataLength32 = htonl (blockLength - 4);

memcpy (data, &dataLength32, sizeof (uint32_t));

// create a block buffer from the IDR NALU

status = CMBlockBufferCreateWithMemoryBlock(NULL, data, // memoryBlock to hold buffered data

blockLength, // block length of the mem block in bytes.

kCFAllocatorNull, NULL,

0, // offsetToData

blockLength, // dataLength of relevant bytes, starting at offsetToData

0, &blockBuffer);

NSLog(@"\t\t BlockBufferCreation: \t %@", (status == kCMBlockBufferNoErr) ? @"successful!" : @"failed...");

}

// NALU type 1 is non-IDR (or PFrame) picture

if (nalu_type == 1)

{

// non-IDR frames do not have an offset due to SPS and PSS, so the approach

// is similar to the IDR frames just without the offset

blockLength = frameSize;

data = malloc(blockLength);

data = memcpy(data, &frame[0], blockLength);

// again, replace the start header with the size of the NALU

uint32_t dataLength32 = htonl (blockLength - 4);

memcpy (data, &dataLength32, sizeof (uint32_t));

status = CMBlockBufferCreateWithMemoryBlock(NULL, data, // memoryBlock to hold data. If NULL, block will be alloc when needed

blockLength, // overall length of the mem block in bytes

kCFAllocatorNull, NULL,

0, // offsetToData

blockLength, // dataLength of relevant data bytes, starting at offsetToData

0, &blockBuffer);

NSLog(@"\t\t BlockBufferCreation: \t %@", (status == kCMBlockBufferNoErr) ? @"successful!" : @"failed...");

}

// now create our sample buffer from the block buffer,

if(status == noErr)

{

// here I'm not bothering with any timing specifics since in my case we displayed all frames immediately

const size_t sampleSize = blockLength;

status = CMSampleBufferCreate(kCFAllocatorDefault,

blockBuffer, true, NULL, NULL,

_formatDesc, 1, 0, NULL, 1,

&sampleSize, &sampleBuffer);

NSLog(@"\t\t SampleBufferCreate: \t %@", (status == noErr) ? @"successful!" : @"failed...");

}

if(status == noErr)

{

// set some values of the sample buffer's attachments

CFArrayRef attachments = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, YES);

CFMutableDictionaryRef dict = (CFMutableDictionaryRef)CFArrayGetValueAtIndex(attachments, 0);

CFDictionarySetValue(dict, kCMSampleAttachmentKey_DisplayImmediately, kCFBooleanTrue);

// either send the samplebuffer to a VTDecompressionSession or to an AVSampleBufferDisplayLayer

[self render:sampleBuffer];

}

// free memory to avoid a memory leak, do the same for sps, pps and blockbuffer

if (NULL != data)

{

free (data);

data = NULL;

}

}

-(void) createDecompSession

{

// make sure to destroy the old VTD session

_decompressionSession = NULL;

VTDecompressionOutputCallbackRecord callBackRecord;

callBackRecord.decompressionOutputCallback = decompressionSessionDecodeFrameCallback;

// this is necessary if you need to make calls to Objective C "self" from within in the callback method.

callBackRecord.decompressionOutputRefCon = (__bridge void *)self;

// you can set some desired attributes for the destination pixel buffer. I didn't use this but you may

// if you need to set some attributes, be sure to uncomment the dictionary in VTDecompressionSessionCreate

/*NSDictionary *destinationImageBufferAttributes = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithBool:YES],

(id)kCVPixelBufferOpenGLESCompatibilityKey,

nil];*/

OSStatus status = VTDecompressionSessionCreate(NULL, _formatDesc, NULL,

NULL, // (__bridge CFDictionaryRef)(destinationImageBufferAttributes)

&callBackRecord, &_decompressionSession);

NSLog(@"Video Decompression Session Create: \t %@", (status == noErr) ? @"successful!" : @"failed...");

if(status != noErr) NSLog(@"\t\t VTD ERROR type: %d", (int)status);

}

void decompressionSessionDecodeFrameCallback(void *decompressionOutputRefCon,

void *sourceFrameRefCon,

OSStatus status,

VTDecodeInfoFlags infoFlags,

CVImageBufferRef imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration)

{

if (status != noErr)

{

NSError *error = [NSError errorWithDomain:NSOSStatusErrorDomain code:status userInfo:nil];

NSLog(@"Decompressed error: %@", error);

}

else

{

NSLog(@"Decompressed sucessfully");

}

}

- (void) render:(CMSampleBufferRef)sampleBuffer

{

/*

VTDecodeFrameFlags flags = kVTDecodeFrame_EnableAsynchronousDecompression;

VTDecodeInfoFlags flagOut;

NSDate* currentTime = [NSDate date];

VTDecompressionSessionDecodeFrame(_decompressionSession, sampleBuffer, flags,

(void*)CFBridgingRetain(currentTime), &flagOut);

CFRelease(sampleBuffer);*/

// if you're using AVSampleBufferDisplayLayer, you only need to use this line of code

if (_videoLayer) {

NSLog(@"Success ****");

[_videoLayer enqueueSampleBuffer:sampleBuffer];

}

}

To help developers use Videotoolbox which is released by iOS 8 to give direct hardware access to encode and decode videos.

Prior to this, video encoding and decoding were done using third party frameworks, which was bit slow and latency was high.

By- Ashish Chaudhary

Principal Software Engineer (iOS)

Mobile Expert

April 30, 2017

April 30, 2017