In the rapidly evolving landscape of Generative AI, Retrieval-Augmented Generation (RAG) models have emerged as powerful tools, combining the strengths of large language models with external knowledge bases. However, as the size of these knowledge bases grows, ensuring efficient and rapid retrieval becomes paramount. This is where Hierarchical Navigable Small World (HNSW) indexes come into play. In this blog post, we'll explore how HNSW indexes can significantly improve the performance of RAG-based applications using PostgreSQL and PgVector, backed by a practical experiment involving a dataset with one million rows.

Understanding RAG and Its Performance Challenges

Retrieval-Augmented Generation applications integrate large language models with external databases or knowledge bases. Instead of relying solely on the pre-trained knowledge embedded within the model, RAG models fetch relevant information from external sources in real time, enhancing the accuracy and relevance of generated responses.

However, as the size of the knowledge base grows, retrieval latency can become a bottleneck. Efficiently searching through millions of embeddings to find the most relevant pieces of information requires optimized indexing and search algorithms.

Introducing HNSW Indexes

Hierarchical Navigable Small World (HNSW indexes) is an advanced graph-based indexing method designed for approximate nearest neighbor searches. Unlike traditional indexing methods, HNSW indexes in PostgreSQL create a multi-layered graph structure that allows for rapid traversal and efficient similarity searches, making it particularly suited for high-dimensional data like embeddings used in RAG models.

By leveraging HNSW indexes, databases like PostgreSQL—enhanced with extensions like PgVector—can perform similarity searches much faster, drastically reducing retrieval times in RAG applications.

Setting Up the Experiment

To quantify the performance improvements brought by HNSW indexes, we conducted an experiment using PostgreSQL and PgVector on a substantial dataset. Here's a detailed walkthrough of the process.

Dataset Overview

We utilized the test.csv file from the MeDAL Dataset, which comprises 1 million rows. The MeDAL (Medical Abbreviation Disambiguation) Dataset is a large-scale medical text dataset specifically curated for the task of abbreviation disambiguation in the medical domain. Each row contains textual content that we aim to embed and store in the PostgreSQL database for retrieval.

Creating the PostgreSQL Table

First, we need to set up a PostgreSQL table optimized for storing embeddings. Using PgVector, a PostgreSQL extension for vector similarity searches in RAG Generative AI optimization, we define a table structure that accommodates our data.

CREATE TABLE file_embeddings (

id SERIAL PRIMARY KEY,

embeddings vector(384),

content TEXT NOT NULL

);

Explanation:

- id: A unique identifier for each row, auto-incremented.

- embeddings: A vector column with 384 dimensions to store the generated embeddings.

- content: The textual content from which embeddings are derived.

This structure ensures efficient storage and retrieval of both the textual data and their corresponding embeddings.

Generating Embeddings

To generate embeddings for the textual content, we employed the "all-MiniLM-L12-v2" model from the SentenceTransformer Python package.

Note: Generating embeddings is a time-intensive task. On a MacBook Air M2 with 24GB RAM, it took approximately 12 hours to insert all the rows. While a multi-threaded approach could have expedited this process, the experiment was allowed to run overnight.

Analyzing PostgreSQL Table Size

Understanding the storage footprint of our data is crucial for performance tuning. We conducted several queries to ascertain the size of the table and its individual columns.

Total Number of Rows

SELECT COUNT(*) FROM file_embeddings;| count |

| 1000000 |

Total Table Size

SELECT pg_size_pretty(pg_relation_size('file_embeddings')) AS data_size;| data_size |

| 1064 MB |

Insight: The entire table occupies approximately 1.064 GB, which is manageable but sets the stage for optimization.

Size Breakdown by Column

SELECT

pg_size_pretty(SUM(pg_column_size(id))) AS total_id_size,

pg_size_pretty(SUM(pg_column_size(embeddings))) AS total_embeddings_size,

pg_size_pretty(SUM(pg_column_size(content))) AS total_content_size

FROM

file_embeddings;

| total_id_size | total_embeddings_size | total_content_size |

| 3906 kB | 1469 MB | 811 MB |

Interpretation:

- id: Approximately 3.906 MB

- embeddings: Approximately 1.469 GB

- content: Approximately 811 MB

The embeddings column is the most storage-intensive, underscoring the importance of optimizing its retrieval.

Note on Table Size vs. Column Sizes:

You might notice that the Total Table Size (pg_relation_size) reported as 1.064 GB is less than the sum of the individual column sizes (~2.3 GB). This discrepancy is due to PostgreSQL's TOAST (The Oversized-Attribute Storage Technique) mechanism.

What is TOAST?

TOAST is PostgreSQL's method for handling large data fields that exceed a certain size threshold. Instead of storing all the data directly within the main table, TOAST compresses and moves large column values (like our embeddings and content columns) into a separate storage area called a TOAST table. This not only optimizes storage by compressing data but also ensures that the main table remains efficient for operations that don't require accessing the large columns.

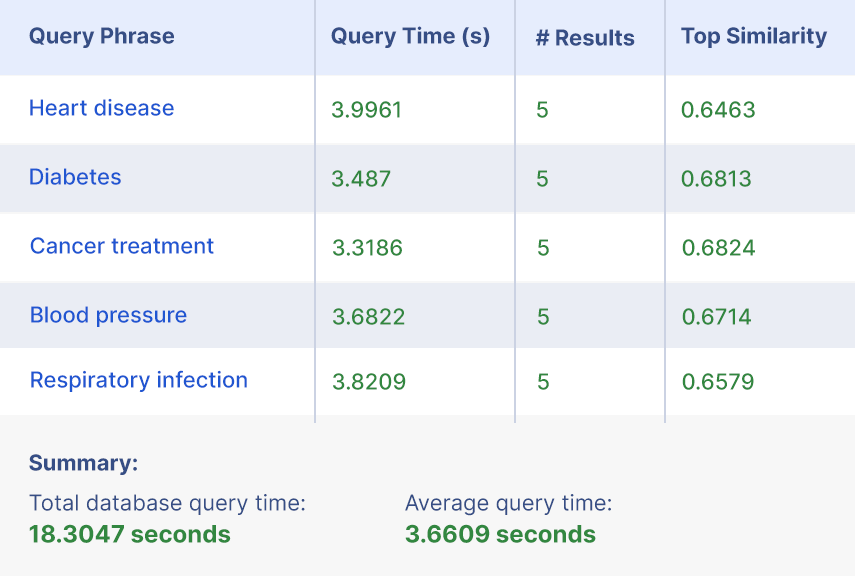

Performance Before Indexing

To establish a performance baseline, we executed a set of basic similarity search queries without any indexing. These queries simulate the typical retrieval operations in a RAG-based application.

Sample Queries and Results:

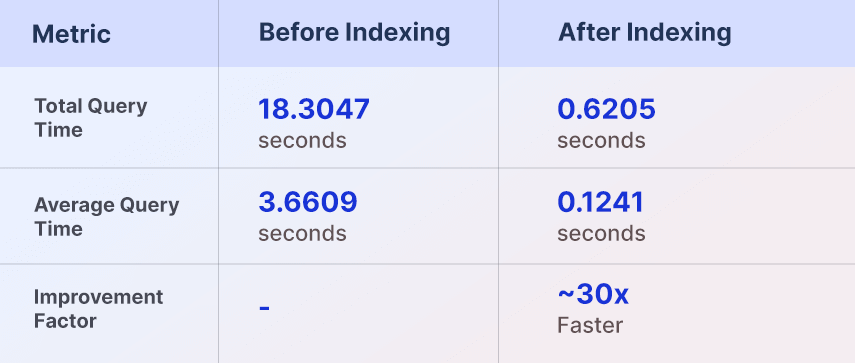

Summary:

Total database query time: 18.3047 seconds

Average query time: 3.6609 seconds

Analysis:

- Total Query Time: Approximately 18.3 seconds for five queries.

- Average Query Time: Around 3.66 seconds per query.

These results highlight the need for optimization, especially when scaling up to more extensive datasets or higher query frequencies in production environments.

Implementing HNSW Indexes

To enhance query performance, we introduced an HNSW index on the embeddings column. This index facilitates rapid approximate nearest neighbor searches, significantly reducing retrieval times.

CREATE INDEX ON file_embeddings USING hnsw (embeddings vector_cosine_ops);Details:

- Index Type: HNSW (Hierarchical Navigable Small World)

- Operator Class: vector_cosine_ops specifies the use of cosine similarity for vector operations.

Time Taken: Building the HNSW index on 1 million rows took approximately 33 minutes. While this is a considerable upfront cost, the trade-off is justified by the substantial performance gains during query operations.

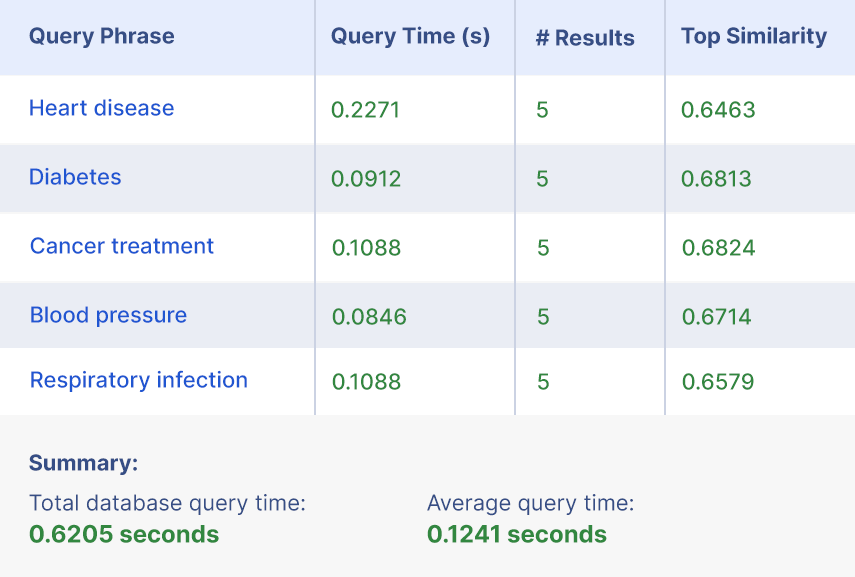

Performance After Indexing

Post-indexing, we reran the same set of similarity search queries to assess the improvements.

Optimized Queries and Results:

Summary:

Total database query time: 0.6205 seconds

Average query time: 0.1241 seconds

Comparison:

| Metric | Before Indexing | After Indexing |

| Total Query Time | 18.3047 seconds | 0.6205 seconds |

| Average Query Time | 3.6609 seconds | 0.1241 seconds |

| Improvement Factor | - | ~30x faster |

Key Observations:

- Total Query Time decreased from 18.3 seconds to 0.62 seconds.

- Average Query Time per query dropped from 3.66 seconds to 0.124 seconds.

- This represents an approximate 30-fold improvement in query performance.

Conclusion

The experiment clearly demonstrates the transformative impact of HNSW indexes on RAG-based generative AI applications. By integrating HNSW indexes within PostgreSQL using PgVector, we achieved a dramatic reduction in similarity search times, from several seconds per query to mere milliseconds. This enhancement not only accelerates real-time data retrieval but also scales seamlessly with growing datasets, ensuring that RAG models remain responsive and efficient even as the underlying knowledge bases expand.

For developers and data scientists working with large-scale generative AI systems, implementing HNSW indexes offers a straightforward yet highly effective strategy to optimize performance. As the demand for real-time, accurate, and scalable AI-driven applications continues to surge, leveraging advanced indexing techniques like HNSW will be pivotal in meeting these challenges head-on.

November 13, 2024

November 13, 2024