Understanding Tokens, Costs, and Optimization Strategies for 2025

Large language models are now deeply embedded in business software. That's why figuring out their pricing is important for developers, product managers, and organizations. Unlike traditional licensing, LLM APIs work on a consumption model, built around a key unit: the token. This guide makes LLM API costs clear, covering all, from basic token ideas to advanced optimization methods, while helping readers understand bold, budget-friendly insights aligned with modern LLM API pricing.

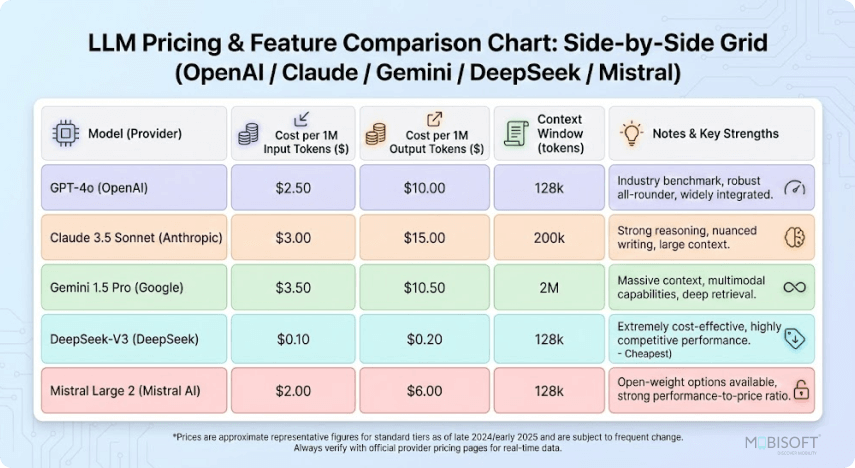

The LLM API landscape in 2025 includes key providers like OpenAI, Anthropic's Claude, Google's Gemini, and xAI's Grok. Each has distinct pricing, capabilities, and use cases.. By the end of this guide, you'll know how to calculate costs accurately, choose the right model, and apply strategies that can reduce your AI spending by up to 90%.

Discover how enterprises streamline model orchestration with our multi-llm platform for enterprises.

What is a Token?

A token is the fundamental unit that LLMs use to process text, and it plays a role in LLM API pricing. Rather than reading character by character or word by word, language models break text into smaller chunks called tokens. These tokens are the atomic units upon which all LLM processing and billing are based, affecting overall LLM token cost.

How Tokenization Works

When you send text to an LLM, the model's tokenizer breaks it apart. It uses algorithms like Byte-Pair Encoding for this. Different models use different tokenizers, you see. This means the same text can create different token counts depending on your provider, which can influence LLM API cost. It's a bit unpredictable. But as a general rule, you'll find that:

- 1,000 tokens approximately equals 750 words in English

- One token roughly corresponds to 4 characters of English text

- A typical page of text (500 words) uses approximately 666 tokens

- Non-English languages often require more tokens per word

- Code and special characters may tokenize differently from natural language

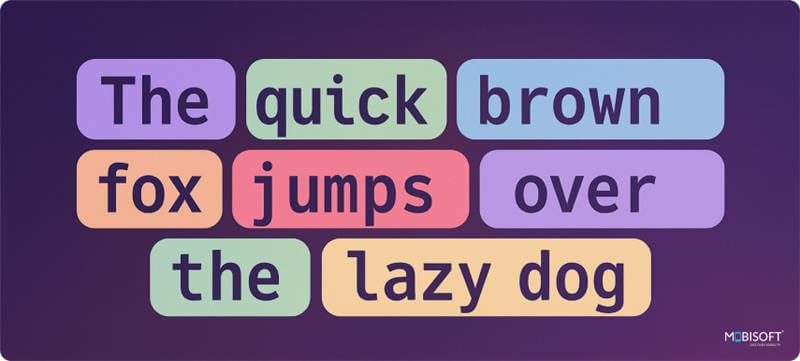

For example, the sentence "The quick brown fox jumps over the lazy dog" might be tokenized as:

resulting in 9 tokens. Notice how spaces are often attached to the following word as part of the same token.

Why Tokens Matter for Pricing?

This approach neatly ties your costs directly to the computing resources used. More tokens mean more processing power, more memory, and more time. We believe this model is great for developers because it offers such detailed cost control in LLM API pricing. You only pay for what you use, honestly. That makes it wonderfully feasible to scale from a tiny test to a huge, company-wide deployment.

Explore our AI strategy consulting & machine learning services to plan and scale your enterprise AI initiatives.

Understanding Input and Output Tokens

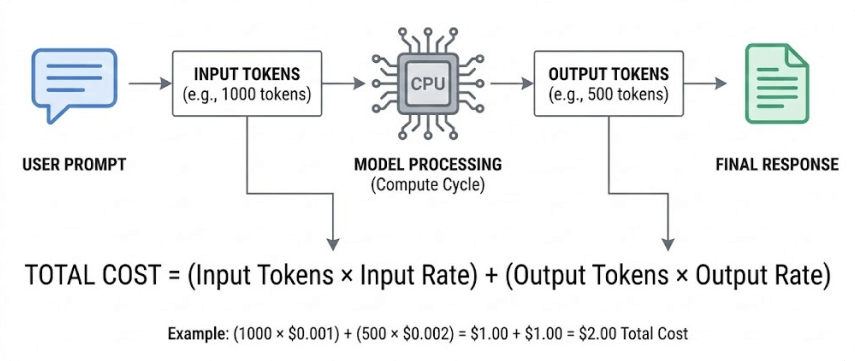

Providers distinguish between two types of tokens, each priced differently to reflect the computational resources required in LLM API pricing.

Input Tokens (Prompt Tokens)

Input tokens represent all the text you send to the model, including:

- System prompts and instructions

- User messages and queries

- Context documents and reference materials

- Conversation history in multi-turn chats

- Few-shot examples

- Tool and function definitions

Input tokens are generally less expensive than output tokens because the model only needs to process and understand them, not generate new content, which influences overall LLM API cost.

Output Tokens (Completion Tokens)

Output tokens are the text generated by the model in response to your input. These usually cost two to five times more than input tokens. That generation work adds up. Each new output token must be predicted one after the other, which requires the model to calculate probability distributions across its entire vocabulary for every single token it produces. It's quite a task.

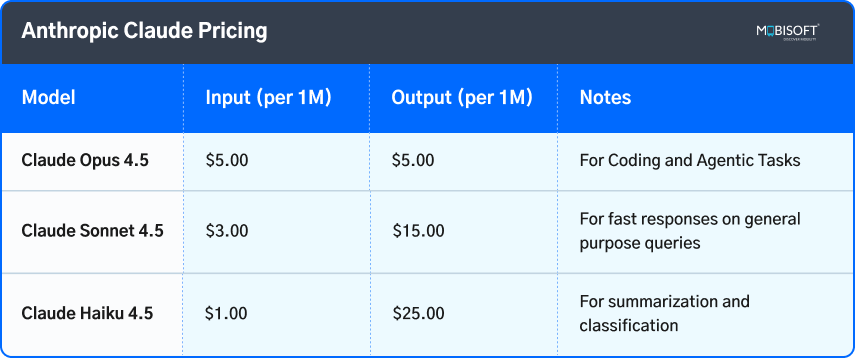

For example, with Claude Sonnet 4.5, input tokens cost $3 per million while output tokens cost $15 per million, a 5:1 ratio. This pricing asymmetry has significant implications for application design: applications that generate lengthy responses will incur higher costs than those producing short, focused outputs, which is important when evaluating LLM token cost.

Thinking Tokens (Reasoning Models)

Modern reasoning models like OpenAI's o3 and Claude with extended thinking introduce a third category: thinking tokens. These represent the model's internal reasoning process before producing a final answer. Thinking tokens may generate 10 to 30 times more tokens than the visible output, significantly impacting costs for complex reasoning tasks and affecting LLM API pricing models. Some providers charge thinking tokens at different rates than standard output tokens.

Standard API Calls vs. Batch Processing

LLM providers offer different processing modes to accommodate various use cases and budget requirements.

Standard (On-Demand) API Calls

Standard API calls are synchronous requests where you send a prompt and receive an immediate response. This mode is ideal for:

- Real-time applications requiring immediate responses

- Interactive chatbots and conversational AI

- User-facing features where latency matters

- Applications needing streaming responses

Standard calls are priced at the full listed rate and typically have higher rate limits to support production workloads.

Batch API Processing

Batch processing allows you to submit large volumes of requests asynchronously. With results typically returned within 24 hours, significant cost savings become the primary benefit. Most providers offer a 50% discount on both input and output tokens for batch requests, creating a more budget friendly LLM model for SaaS workflows.

Batch processing is ideal for:

- Large-scale data processing and analysis

- Document summarization at scale

- Content generation for marketing or SEO

- Evaluation and testing pipelines

- Any workflow where immediate results are not required

For instance, with Anthropic's Claude API, using the Batch API reduces Claude Sonnet 4.5 pricing from $3/$15 per million tokens (input/output) to $1.50/$7.50. This has cut costs in half for suitable workloads, which is valuable for Enterprise LLM API cost analysis.

Secure your models with our private LLM implementation & deployment solutions for full data control.

Cached Input Tokens and Prompt Caching

Prompt caching is one of the most powerful cost optimization techniques available, potentially reducing LLM API cost by up to 90% for suitable applications.

What is Prompt Caching?

When an LLM works on a prompt, it creates internal states. We call these key-value pairs. They represent the model's grasp of your input. Prompt caching saves these states. This means later requests that start the same way can skip redoing the work. It just pulls the stored understanding, reducing LLM token cost.

Imagine you keep asking questions about one document. Without caching, the model reads and processes that whole document every single time. It's quite inefficient. With caching, though, the model holds onto its analysis. It only needs to process your new, specific question.

How Prompt Caching Works

The caching process involves three key concepts:

- Cache Write: When you first send a prompt, the provider stores the computed K-V pairs. This initial write may cost slightly more than standard input tokens (typically 1.25x to 2x depending on the provider and cache duration), affecting LLM API pricing 2025.

- Cache Hit: Subsequent requests with an identical prefix retrieve the cached states instead of recomputing them. Cache hits are dramatically cheaper, typically costing 10% of a standard input token. (a 90% discount).

- Cache Miss: If the prefix doesn't match any cached content (due to expiration or modification), the request is processed as normal and may create a new cache entry.

Enabling and Using Prompt Caching

Implementation varies by provider

- OpenAI: Caching is automatic for prompts exceeding 1,024 tokens. Simply structure your prompts with static content at the beginning, without needing any configuration, which is helpful for llm api pricing OpenAI.

- Anthropic (Claude): Requires explicit cache_control headers to designate cache breakpoints. Offers both 5-minute (default) and 1-hour cache durations.

- Google (Gemini): Supports both implicit and explicit caching with configurable TTLs up to 1 hour.

- xAI (Grok): Automatic caching enabled for all requests without user configuration.

Best Practices for Prompt Caching

- Structure prompts strategically: Place static content (system instructions, reference documents, and few-shot examples) at the beginning of your prompt, with variable content (user queries) at the end.

- Maintain consistency: Cache matching requires exact prefix matches. Any change is meant to cause a cache miss, even whitespace.

- Batch-related queries: Process multiple questions about the same document in quick succession to maximize cache utilization within the TTL window.

- Monitor cache metrics: Track cache hit rates through API response fields to verify caching is working effectively.

Prompt Caching Pricing Example

Consider a document Q&A application using Claude Sonnet 4.5 with a 50,000-token reference document:

- Without caching: 50,000 tokens × $3/million = $0.15 per query

- First query (cache write at 1.25x): 50,000 × $3.75/million = $0.1875

- Subsequent queries (cache hit at 0.1x): 50,000 × $0.30/million = $0.015

- Savings after just 2 queries: 90% reduction on cached portions

Learn how our generative AI services help build powerful, production-ready llm applications.

Pricing for Image Inputs

Modern multimodal LLMs can process images as part of their input. The pricing is typically calculated by converting images to equivalent token counts, which is part of the LLM API pricing models.

How Image Input Pricing Works

When you send an image to a vision-capable model, the image is processed and converted into a token-equivalent count based on its resolution and detail level. Higher resolution images consume more tokens. For example:

- A typical 1024×1024 image consumes approximately 700 to 1,300 tokens, depending on the model

- Google Gemini charges 1,290 tokens for a 1024×1024 image

- OpenAI models vary based on the "detail" parameter (low vs. high)

Image Input Pricing by Provider

- OpenAI (GPT-4o, GPT-4o-mini): Images are charged at standard text token rates. Low detail images use fewer tokens (around 85 tokens), while high detail images scale with resolution.

- Anthropic (Claude): Images are converted to tokens and charged at input token rates. The token count depends on image dimensions.

- Google (Gemini): Approximately 1,290 tokens per 1024×1024 image, charged at the model's input rate.

- xAI (Grok): Vision models support image inputs with tokens charged based on image complexity.

Pricing for Image Outputs

Image generation APIs create visual content from text prompts, with pricing structures that differ significantly from text-based models.

OpenAI Image Generation (GPT Image)

OpenAI offers multiple image generation models with per-image pricing:

- GPT Image 1: $0.01 (low quality), $0.04 (medium), $0.17 (high) for square images

- GPT Image 1 Mini: The most affordable option for high-volume generation with $0.005 to $0.052 per image.

Google Imagen

Google's Imagen models use token-based pricing for image outputs:

- Gemini 2.5 Flash Image: $30 per million output tokens (approximately $0.039 per image)

- Gemini 3 Pro Image: $120 per million output tokens (approximately $0.134 per high-resolution image)

- Imagen 4.0 models available with varying pricing tiers

See how developers streamline backend workflows with LLM integration for spring boot devs.

API Pricing Comparison by Provider

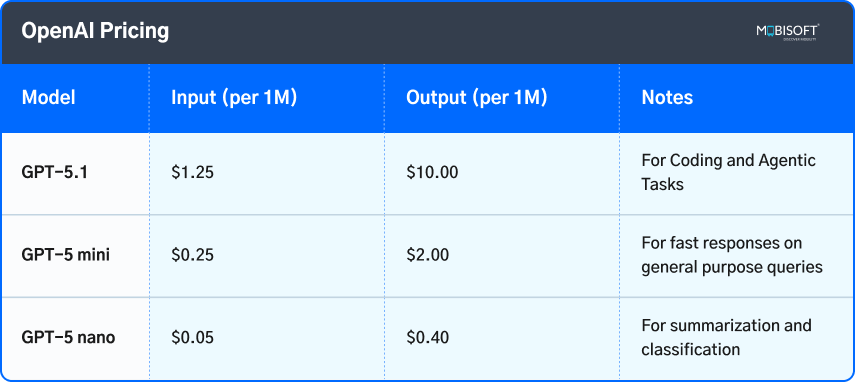

The following tables summarize current pricing for major LLM model providers as of November 2025. All prices are per million tokens unless otherwise noted.

OpenAI Pricing

Official Pricing Page: https://openai.com/api/pricing

Anthropic Claude Pricing

Official Pricing Page: https://claude.com/pricing#api

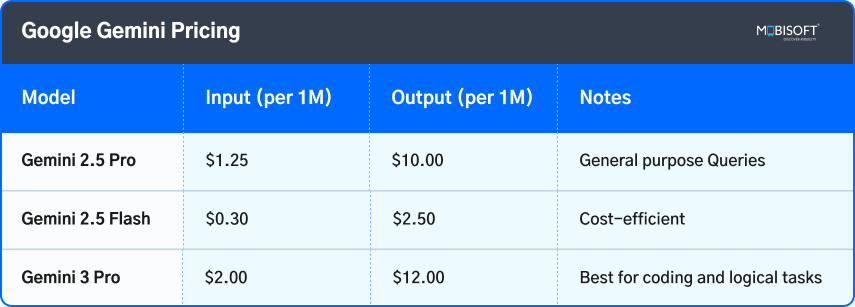

Google Gemini Pricing

Official Pricing Page: https://ai.google.dev/gemini-api/docs/pricing

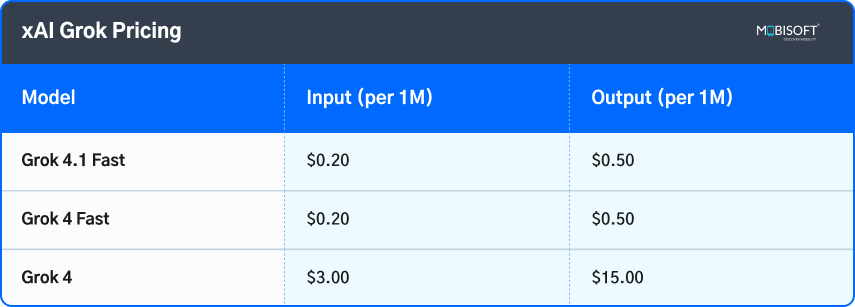

xAI Grok Pricing

Official Pricing Page: https://x.ai/api

Tips for Optimizing LLM API Costs

Implementing effective cost optimization strategies can reduce your LLM API cost by 50% to 90% without sacrificing quality. Here are proven techniques used by production applications.

Choose the Right Model for Each Task

Not every task requires your most capable (and expensive) model. Implement model routing to direct requests to appropriate tiers:

- Use small/mini models for classification, simple Q&A, and routing decisions

- Reserve flagship models for complex reasoning, creative tasks, and high-stakes outputs

- Consider a tiered approach: route 70% of queries to cheap models, escalating only when needed

- Benchmark regularly with smaller models that improve rapidly. Eventually, they may handle tasks previously requiring larger ones in LLM models.

Optimize Your Prompts

Every unnecessary token in your prompt costs money. Implement these prompt optimization techniques:

- Remove redundant instructions and verbose explanations

- Use concise system prompts, compressing without losing essential context

- Trim conversation history to include only relevant context

- Use abbreviations or shorthand where the model can still understand

- Implement context summarization for long conversations instead of sending the full history

Control Output Length

Output tokens are significantly more expensive than input tokens. Control them by:

- Setting appropriate max_tokens limits in API calls

- Instructing the model to be concise in your system prompt

- Requesting structured outputs (JSON) instead of verbose prose where applicable

- Using few-shot examples that demonstrate the desired response length

Leverage Caching Aggressively

Prompt caching offers up to 90% savings on input tokens:

- Structure prompts with static content at the beginning

- Batch queries about the same document or context together

- Maintain consistent formatting to ensure cache hits

- Monitor cache hit rates and optimize accordingly

Use Batch Processing for Non-Urgent Work

The Batch API provides a 50% discount for asynchronous processing:

- Queue content generation, summarization, and analysis tasks for batch processing

- Schedule batch jobs during off-peak hours

- Design workflows that can tolerate 24-hour turnaround times

Implement Semantic Caching

Beyond provider-level caching, implement your own application-level cache:

- Cache responses to frequently asked questions

- Use embedding similarity to find and reuse semantically similar query results

- Store and retrieve common outputs without making API calls

Monitor and Set Budgets

Proactive cost management prevents surprise bills:

- Set up usage alerts and spending limits in provider dashboards

- Keep an eye on token counts for each feature, user, or workflow.

- Add rate limiting and user quotas right in your app.

- Monitor usage patterns each week and change your approach as needed.

Consider Fine-Tuning for High-Volume Use Cases

For high-volume applications, you can specifically fine-tune costs:

- Fine-tuned models work with shorter prompts, which is helpful. You won't need a few-shot examples.

- Domain-specific tuning can reduce prompt length by 50% or more

- ROI typically becomes positive after 5+ million tokens of usage

Build advanced automation using custom AI agent development with crewAI framework.

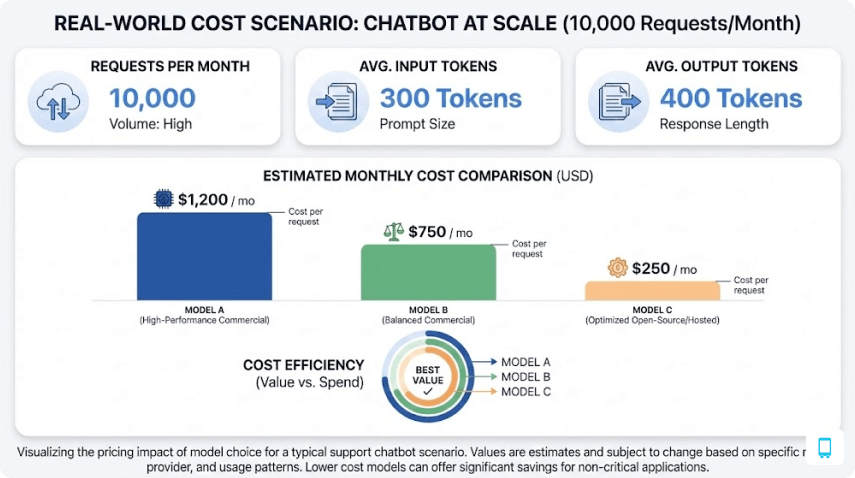

Real-World Cost Calculation Examples

Understanding theoretical pricing is important, but seeing how costs accumulate in real scenarios provides practical insight for budget planning. The following examples demonstrate typical usage patterns and their associated costs.

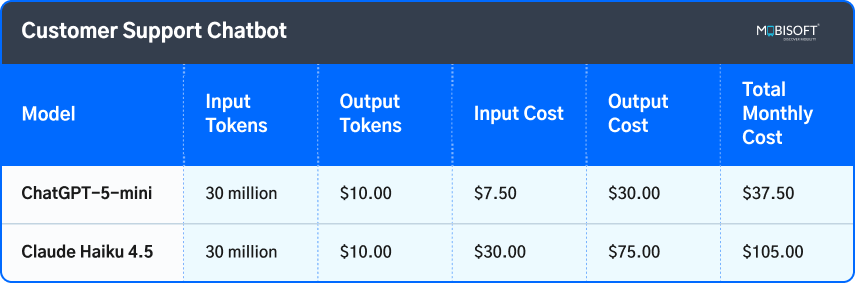

Example 1: Customer Support Chatbot

Think about a customer support chatbot managing 50,000 questions each month. Every single interaction uses a system prompt of about 500 tokens. The user's own query is another 100 tokens on average. Then the AI's answer comes in at around 300 tokens. You can see how it adds up.

Example 2: Document Analysis Pipeline

A legal technology company processes 1,000 contracts per month, each averaging 10,000 tokens. They extract key terms and generate summaries of approximately 500 tokens per document.

Standard Processing with Claude Sonnet 4.5:

- Input tokens: 1,000 × 10,000 = 10 million tokens at $3.00/M = $30.00

- Output tokens: 1,000 × 500 = 500,000 tokens at $15.00/M = $7.50

- Standard monthly cost: $37.50

With Batch Processing (50% discount):

- Batch monthly cost: $18.75 (saving $18.75)

With Prompt Caching (using shared analysis instructions):

If 2,000 tokens of each prompt are system instructions that can be cached (80% cache hit rate), cache savings of approximately $4.32, bringing the effective monthly cost to around $33.18 for standard processing, or combining both optimizations for maximum savings.

Future Trends in LLM Pricing

The whole LLM API pricing scene is changing quickly. It's driven by better technology, more players competing, and what users want now. Understanding these trends helps organizations plan for their future AI spending. It just makes sense.

Continued Price Reductions

Historical data shows consistent price reductions across the industry. Industry analysts expect this trend to continue, with 20-30% annual price reductions becoming standard as:

- Hardware efficiency improves with specialized AI chips

- Model architectures become more efficient (Mixture-of-Experts, speculative decoding)

- Competition from open-source models pressures commercial pricing

- Infrastructure scale increases across all providers

Emergence of Reasoning Token Economics

As reasoning models like OpenAI's o3 and Claude with extended thinking become mainstream, new pricing dimensions are emerging. Thinking tokens can multiply output costs by 10-30× for complex tasks. Providers are developing various approaches to bill for reasoning:

- Separate thinking token rates (typically lower than output rates)

- Effort or budget controls that let users cap reasoning depth

- Tiered pricing based on problem complexity

Model Optimizer and Dynamic Pricing

Google's Vertex AI Model Optimizer represents an emerging paradigm where users specify desired outcomes (cost, quality, or balance) rather than specific models. The system automatically routes requests to appropriate models, applying dynamic pricing based on actual compute used, which aligns with current LLM API pricing models. This approach may become more common as providers seek to optimize resource utilization while simplifying user decisions.

Get hands-on guidance in our Build AI Agents with CrewAI: Complete Framework Tutorial.

Choosing the Right Provider

Selecting an LLM provider involves balancing multiple factors beyond raw pricing. Here is a framework for making informed decisions.

Capability Requirements

Different providers excel in different areas:

- OpenAI: Leading in multimodal capabilities, reasoning models, and ecosystem integration (LLM API pricing OpenAI)

- Anthropic (Claude): Excels in safety, long-context handling, coding, and agentic workflows

- Google (Gemini): Strong in multimodal understanding, Google Cloud integration, and search grounding

- xAI (Grok): Optimized for reasoning, real-time information, and X platform integration

Total Cost of Ownership

Per-token pricing is only part of the equation. Consider:

- Integration complexity and developer time

- Availability of batch processing and caching features

- Rate limits and their impact on your throughput requirements

- Quality at your specific tasks (benchmark on your actual use cases)

- Support, SLAs, and enterprise features if needed

Multi-Provider Strategies

Many production systems use multiple providers to optimize for different scenarios:

- Use the cheapest model that meets quality requirements for each task type

- Maintain fallback providers for redundancy and uptime

- Route specific task types to providers with strengths in those areas

- Use abstraction layers (like LiteLLM or OpenRouter) to simplify multi-provider management

Conclusion

LLM API pricing in 2025 reflects a market that's maturing. Competition is growing, and it's pushing costs down, even as the models themselves get more capable. Grasping the token-based billing model is key. We're talking about the distinctions between input and output tokens, standard versus batch processing, and the pure power of prompt caching. This understanding lets developers and businesses make informed decisions. It lets them balance what's possible with what's affordable, especially as teams compare options through LLM API pricing insights.

The AI industry keeps changing constantly. To stay ahead, knowing about pricing, updates, and new features is essential. It helps you to maintain cost-effective AI operations. The providers we've discussed, like OpenAI, Anthropic, Google, and xAI, all update their pricing and introduce new models quite often. So, bookmark their official pricing pages and review them every quarter. This simple habit ensures your cost strategies stay current, particularly when you track LLM usage pricing 2025 across providers.

By applying the strategies from this guide, organizations can achieve cost reductions of 50% to 90%. And they can do this while maintaining, or even improving, the quality of their AI applications. The future of AI isn't just about raw capability. It's increasingly about efficient, sustainable deployment that delivers real value without straining your budget, an important consideration for teams managing LLM API cost for long-term use.

Key Takeaways

- Master token-based billing, where output tokens cost significantly more than inputs due to the intensive computation required for text generation.

- Strategically route tasks by complexity, using smaller models for simple jobs and reserving powerful models for reasoning and high-stakes creation.

- Implement prompt caching to slash input costs by reusing processed static content instead of recalculating it for every single request.

- Leverage batch processing for a fifty percent discount on workloads where you can tolerate a longer turnaround time.

- Control output length actively through API parameters and clear instructions, as these tokens have the greatest impact on your final bill.

- Factor in higher token consumption for non-English languages to avoid unexpected budget overruns on international products.

- Look beyond per-token prices to evaluate critical operational factors like rate limits, regional latency, and debugging tools.

- Continuously monitor usage and benchmark new models, as the landscape evolves with frequent price changes and capability improvements.

FAQs

How do we accurately forecast our monthly LLM API budget when usage is so variable?

Start by instrumenting your application to log token counts for every single call, separating inputs from outputs. Analyze historical data to find usage patterns per user or feature. Then, build a simple model based on active users and core actions. It is never perfect, but this approach gets you much closer than just guessing. Always include a 15-20 percent buffer for unexpected growth.

We operate in European languages. How does tokenization impact our cost structure compared to English?

It often creates a significant cost increase. Non-English languages, especially those with complex grammar or non-Latin scripts, can require two to three times more tokens per word. This directly inflates your bill. You must benchmark your actual text in the target language against English equivalents. Factor this multiplier into your initial pricing calculations to avoid budget surprises later.

When does it become financially sensible to fine-tune a model instead of using clever prompting?

Fine-tuning becomes a compelling option when you have a high-volume, specialized task. The break-even point typically arrives after you have spent several thousand dollars on API calls for that specific use case. If you are constantly repeating the same lengthy instructions or examples in your prompt, fine-tuning can strip those out, leading to much cheaper and faster requests over time.

What are the less obvious pitfalls when trying to control output length with max_tokens?

Setting a hard limit can sometimes truncate a crucial thought, rendering the entire response useless. The model does not know it is being cut off. A more effective technique is to explicitly instruct the model for brevity within the prompt itself. Ask for bullet points or a three-sentence summary. This maintains coherence while still conserving those expensive LLM token costs for output tokens.

Prompt caching sounds ideal, but what commonly causes cache misses in practice?

The biggest culprit is minor, unpredictable changes in your prompt. A single extra space, a different punctuation mark, or even a swapped synonym can break the match. Inconsistent user session data or timestamps woven into the prefix will also invalidate the cache. Achieving a high hit rate demands rigorous prompt templating and separating dynamic variables from your static instructions.

Beyond the per-token price, what hidden factors should we compare between providers?

You must evaluate their rate limits and throttling policies, as these can bottleneck your application during peak traffic. Also, assess the latency for your specific region, as slower response times can degrade user experience. Finally, consider the maturity of their debugging tools and the clarity of their usage analytics. These operational factors greatly influence the total LLM API cost of development and maintenance.

How do 'thinking tokens' in reasoning models change how we design an application?

They introduce a new cost variable that is much less predictable. You might send a short query, but pay for thousands of internal reasoning tokens. This makes it essential to implement cost tracking at the user action level, not just by request count. It also encourages designing workflows that use reasoning models selectively, for only the most complex problems that truly justify the extra computational depth.

December 4, 2025

December 4, 2025