Most web applications don’t fail because features are missing. They fail because early architectural decisions were made for functionality, not scale. In Scalable Web Application Development, the real challenge is anticipating growth before traffic exposes weakness. At low traffic, almost any system looks competent, but every shortcut resurfaces when scaled at the enterprise level. First as latency, then as instability, and finally as costly rewrites that nobody budgeted for.

Today's traffic patterns compound the issue. They mix sustained enterprise loads with unpredictable spikes. Choosing a development partner, therefore, becomes less about frameworks and more about foundational philosophy for web application scalability. You need a team that architects for the failure modes specific to scale.

The infrastructure trade-offs explored here separate applications that grow from those that collapse. This matters because downtime during peak traffic doesn't just pause transactions. It permanently damages brand trust and competitive position. Getting this architecture wrong has a lasting cost within any serious digital transformation strategy.

To turn architectural foresight into execution, explore how enterprise web development solutions are designed to support scalability, security, and long-term business growth.

Traffic Has Changed. Most Architectures Haven’t.

The nature of traffic has fundamentally diverged from the architectural patterns still in common use. Contemporary systems must accommodate a persistent baseline load while also absorbing sudden, unpredictable surges from global events or commercial initiatives, all within the strict guardrails of geographic data compliance that modern scalable web architecture must support.

Yet, many prevailing architectures remain anchored to an outdated premise of linear growth. This creates a profound mismatch. When confronted with real-world volatility, these systems reveal their constraints. Database connections exhaust themselves, monolithic servers become critical points of failure, and latency spirals. The cost here is operational and tangible.

Moving forward requires intentionality. The solution lies in foundational principles like stateless design and deliberate horizontal scaling, concepts that must be embedded from the outset. Treating scalability as a future retrofit is a significant strategic risk. Building for modern traffic means prioritizing resilient architecture as a core business requirement, not a technical footnote.

Aligning modern traffic demands with business goals is easier with custom software development services that balance performance, resilience, and operational efficiency.

Why Vertical Scaling Always Breaks First?

Scaling vertically by upgrading a single server is the instinctive first move. It seems simpler, yet it hits a hard physical limit in memory and processing cores. Enterprise traffic demands require geographic distribution, data residency compliance, and inherent fault tolerance. A single server cannot provide that when organizations try to build scalable web applications for sustained growth.

The Horizontal Mandate

True scalability requires horizontal scaling across many servers, a core principle of scalable web application development. This mandates a stateless application design, distributed session management, and coordinated deployments. It is a fundamental architectural shift.

Strategic Cost Implications

The cost model changes because vertical scaling produces linear cost growth. Proper horizontal architecture aims for logarithmic cost growth relative to traffic. When a development service defaults to vertical scaling, they prioritise short-term simplicity over long-term, resilient scale. This is a critical differentiator for enterprises.

When scale requires speed and flexibility, leveraging outsourced software development helps extend capability without sacrificing architectural discipline.

Horizontal Scaling Is Not an Optimization- It’s a Design Mandate

Choosing horizontal scaling is not merely an infrastructure preference. It represents a profound architectural philosophy within scalable web application development that shapes every subsequent decision. This approach fundamentally redefines how systems are built to endure.

Architectural Non-Negotiables

To scale horizontally, applications must be stateless by design in any serious scalable web architecture. User sessions cannot reside locally; they require externalized management in dedicated stores like Redis. Deployments themselves must become coordinated, repeatable events across dozens of instances. These are not enhancements. They are prerequisites for survival under distributed loads and sustained web application scalability.

Strategic Cost Implications

Vertical scaling follows a linear cost trajectory, where each capacity increase demands a significantly more expensive server. In contrast, a proper horizontal architecture aims for logarithmic cost growth relative to traffic in enterprise web application development environments. You add modest, commoditized units of capacity, not premium hardware. Teams defaulting to vertical scaling often prioritize short-term simplicity. Teams designing horizontally are investing in long-term economic and operational resilience.

A Core Commitment

Ultimately, this is about foresight. It is a commitment to building systems that can withstand uncertainty by design, not by frantic adjustment later. The architecture you begin with is the one you scale with. Making horizontal scaling a core mandate from the outset is perhaps the most decisive choice for an application’s future and long-term business scalability strategy.

Scalability becomes sustainable when guided by clear digital transformation strategies that connect architecture decisions with long-term business innovation.

Why Slow Enterprise Applications Quietly Destroy Value?

Users abandon slow tools, even internal ones. The three-second threshold is rigid. Enterprise apps often breach it due to complex permissions, deep database joins, and legacy integrations. This isn't a minor inconvenience because at scale, latency directly costs revenue across high-performance web applications.

The Performance Budget

Architecting for speed requires a performance budget from day one, not optimization as an afterthought in modern web application architecture. This means defining Core Web Vitals targets during initial sprints and monitoring them as core metrics.

Architecture for Speed

The solution is layered. Implement edge computing for dynamic logic, not just static files. Use intelligent caching strategies at multiple levels. Shift non-critical processes to asynchronous queues. A development partner must treat this as a foundational requirement, not a final polish in web application development services.

Performance Is a Budget, Not a Post-Launch Fix

High-performing systems are fast by design, not by after-the-fact optimization. This requires a fundamental shift in how performance is planned and measured from the very beginning.

That requires:

- Explicit performance budgets are defined during the initial sprint, setting clear limits for key metrics.

- Core Web Vitals are treated as primary success criteria, monitored as rigorously as feature completion.

- Continuous measurement and reporting, replacing reactive tuning with proactive governance.

Speed comes from architecture in cloud-native web applications:

- Running dynamic logic at the edge, closer to users, not just serving static files from a CDN.

- Implementing intelligent, multi-layer caching strategies for both data and presentation.

- Designing asynchronous pathways for non-critical processes like notifications or data aggregation.

A team that treats performance as final polish is planning for post-launch failure. By embedding these budgets and architectural choices early, you ensure speed is a core deliverable, not an expensive retrofit.

Much of that performance depends on infrastructure, and cloud computing services for scalable applications enable speed and elasticity across modern environments.

The Database Is Where Most Scalable Systems Break

There is a fundamental asymmetry that undermines many applications. Your application server can spin up thousands of threads to handle concurrent users. Your database, however, has a hard limit on concurrent connections, often between 100 and 500. This invisible ceiling is where high-traffic systems falter.

The N+1 Query Trap

The problem is magnified by inefficient code. The n+1 query pattern, where a loop triggers a new database call for each item, performs acceptably with small datasets. At enterprise scale, it can generate thousands of superfluous queries, crippling the database. This is where optimization transcends mere tweaking in enterprise web application development.

Architectural Mitigations

Solving this requires deliberate database architecture supported by scalable web architecture. Strategic connection pooling is essential, acting as a buffer between server demand and database limits. Beyond that, you must distribute the load. Read replicas offload query traffic, while write-through caching reduces database calls for frequent data. The mark of a seasoned development team is how they approach this during design. They should articulate clear strategies for pool sizing, query optimization, and geographic distribution of database instances to reduce latency.

If legacy platforms struggle under load, applying application modernization best practices helps rebuild data layers for performance and scale.

Microservices Don’t Eliminate Complexity - They Redistribute It

Microservices promise independent scaling, a compelling vision in scalable web application development. Yet enterprise applications rarely function on stateless logic alone. Shopping carts, user sessions, and multi-step workflows require state. This creates a paradox. How do you maintain coherence when a single user's journey spans dozens of independent, geographically dispersed services?

The Stateful Reality

The stateless service is a scaling dream, but business logic is inherently stateful. The challenge shifts from avoiding state to distributing it intelligently across a scalable web architecture. You need strategies for session storage, data caching, and transaction tracking that work across service boundaries.

Consistency and Compromise

This leads to the critical debate around consistency models. Eventual consistency works for many features, like updating a user profile. But enterprise domains like financial transactions or inventory management often demand strong consistency. Your development partner must articulate a clear strategy for both, using patterns like event sourcing for auditability or sagas for distributed transactions. A vague answer here reveals a lack of production experience with truly distributed systems that affect web application scalability.

Managing distributed sessions effectively often depends on implementing redis for high-performance web applications to keep data access fast and consistent across services.

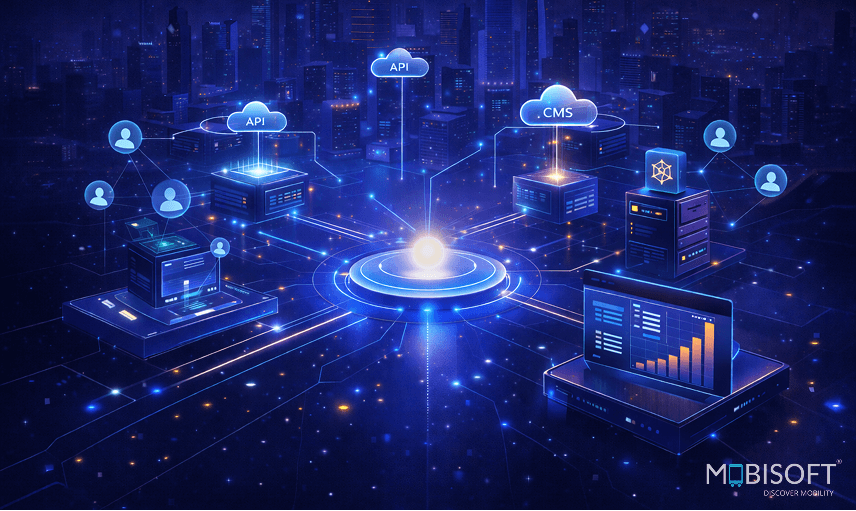

Integration Is the Most Fragile Layer- and the Most Ignored

Enterprise systems connect to a vast ecosystem of external services. This integration layer, while necessary, becomes the primary source of latency and systemic fragility if not architected with intent.

- External API calls are not instant. Each introduces 100-500ms of latency, and these delays add up sequentially within a single request.

- Cascading failures occur when a slow third-party service ties up your application threads, causing a backward collapse of your own systems.

- Implement the circuit breaker pattern. It fails fast when an integration is unresponsive, protecting your application's stability.

- Use the bulkhead pattern to isolate integration points. A failure in your payment service should not impact user search functionality.

- Every integration requires a defined timeout and a fallback mechanism, even if it's a degraded user experience.

- Observability must track external dependencies. Monitor p95 and p99 latency for all APIs to detect degradation early.

- Respect downstream rate limits in your design. Your scaling should not trigger throttling from a partner's API.

- Stale data is sometimes acceptable. A cached, slightly old response is often better than a fresh timeout error for users.

Choosing a development partner hinges on their approach to these points. Their default patterns must assume external systems will fail, building resilience in from the start for high-performance web applications.

Elasticity Without Guardrails Is Financial Risk

While auto-scaling responds to unpredictable traffic, implementing it without strategic boundaries transforms a technical feature into a significant financial liability. Pure reactive scaling ignores the ecosystem of dependencies and hard limits that govern real-world systems.

True elasticity considers:

- Platform Constraints: The finite concurrent connections of your database and the quotas of third-party APIs.

- Operational Realities: Licensing costs that scale per-instance and the latency penalty of cold starts.

- Fiscal Discipline: Predictive scaling based on traffic patterns, and the imperative to scale down as aggressively as you scale up.

Without these guardrails, it might fail to scale core components in unison, causing collapse under load. True resilience requires elasticity that is as cost-aware as it is performance-conscious.

Observability Is the Nervous System of Scaled Platforms

At high traffic, problems evolve faster than humans can diagnose. You cannot troubleshoot what you cannot see. This moves observability from a utility to the central nervous system of your application.

- Monitoring tells you something is wrong. Observability explains why it happened, especially under novel conditions.

- Billions of daily metrics and logs require AI-driven analysis to spot subtle anomalies and correlations before they cause outages.

- Implement distributed tracing to follow a single request across every microservice, database call, and external API.

- Define Service Level Objectives (SLOs) for user experience, not just server uptime. They guide development and investment.

- Alert fatigue paralyzes teams. Consolidate tools and focus alerts on symptoms that truly impact business outcomes.

- Structure logs for machines first. This enables automated parsing and rapid root cause identification during incidents.

- Correlate technical performance with business metrics. A payment service latency increase should link to a drop in conversion.

Therefore, your development partner must treat observability as a first-class citizen from the initial design session, not a post-launch addition. It is the foundation of operational confidence at scale.

Scale Is Proven Under Stress, Not on a Roadmap

Ultimately, building for scale is about pressure-testing your architecture against reality, not a roadmap in scalable web application development. The wrong database connection strategy will strangle your application during a flash sale. Poor state management will corrupt user sessions across your microservices. If your integrations lack timeouts, a single slow vendor API will become your system's bottleneck and expose limits in web application scalability.

These are not hypothetical concerns. They are the documented failure modes of systems that prioritize features over foundations. The development partner you choose must demonstrate that they can architect for these specific breakdowns in a scalable web architecture. They should show you their patterns for circuit breakers, distributed caching, and predictive auto-scaling before they write a line of your business logic.

This approach defines the new standard. This way, your infrastructure becomes a genuine competitive moat within modern web application architecture. When your platform remains stable and fast while others falter, that is the most tangible business advantage of all.

The Real Differentiator

Ultimately, any skilled team can deliver a set of features. The defining difference lies in building systems that not only function but also endure under genuine pressure.

This distinction transforms development from a technical task into a matter of architectural judgment and foundational philosophy. The right partner focuses on engineering resilience, designing for failure states, and embedding observability from the start.

At enterprise scale, where downtime erodes trust and latency costs revenue, that confidence becomes your most critical asset. It is the definitive feature that separates a functional application from a formidable platform.

Sustaining that advantage depends on strong direction, and IT consulting and strategy ensure that scalability decisions align with long-term business priorities.

What Leaders Should Take Away

- High-traffic systems require fundamentally different architecture

- Horizontal scaling is mandatory, not optional

- Performance must be budgeted from day one

- Databases are the real scaling bottleneck, and not servers.

- Distributed state is unavoidable and must be designed intentionally

- Every integration must assume failure

- Elasticity must balance resilience with cost discipline

- Observability is essential to operational confidence

- “Good enough” architecture always charges interest later

Frequently Asked Questions

Is refactoring for scale after launch a viable strategy?

It is a common and expensive misconception. Retrofitting core architecture like distributed state or database partitioning is vastly more complex than building it in from the start. The process often introduces new bugs and requires major rework. Investing in scalable design initially is ultimately more efficient and less risky.

How can we truly assess a team's experience with scale?

Ask them about specific failure modes, not just successes. Have them describe how they handle a cascading database connection failure. Request details on their caching invalidation strategy during a global deployment. Their depth of answer on these gritty, operational details reveals far more than a portfolio number ever could.

Do performance rules apply to internal enterprise tools?

They do, but the stakes differ. For internal users, latency directly erodes productivity and fosters frustration, leading to costly workarounds or errors. While the impact isn't always a lost sale, it quantifiably increases operational costs and can hinder critical business processes.

What is the underestimated cost of microservices?

The complexity shifts rather than disappears. You gain deployment independence, but now face the significant overhead of distributed data management and observability. The cost of maintaining consistency, executing cross-service queries, and tracing requests can surprise teams who only considered the scaling benefits.

Can auto-scaling handle a true viral spike?

Reactive scaling often fails due to provisioning delays. An effective response combines predictive scaling using custom metrics with pre-warmed resource pools. Crucially, your architecture must also prepare downstream dependencies like databases for the load, or include intelligent throttling to maintain overall system stability.

How do we maintain speed with slow legacy integrations?

You must architect around the latency. This involves decoupling the user experience through asynchronous processing, optimistic UI updates, and serving cached data. The goal is to perform background synchronization without making the user wait for the legacy system's response.

February 12, 2026

February 12, 2026