Using MCP servers can make your AI agents intelligent and more rooted in the context of the task. This ensures that the LLM model gets right context about your task so that it can produce results specific to your goals.

In this comprehensive guide, we'll demonstrate AI agent development by building a practical example: a code review agent that integrates with Claude Desktop using the Model Context Protocol (MCP). Through this hands-on MCP development tutorial, you'll learn how to create AI agents for software development that can automatically detect your project's programming language, load appropriate review checklists, and provide structured feedback.

By the end of this guide, you'll understand the fundamentals of building AI agents and have a fully functional code review tool that you can customize for your team's specific needs or adapt for entirely different use cases.

Explore more about MCP and its role in AI systems: Learn more about MCP and its role in AI systems here

What we will build:

- A code review agent that works with Claude Desktop

- Automatic technology detection for Python, JavaScript, Java, Go, Rust, and TypeScript

- Customizable review checklists with security, quality, and performance checks

- Pattern-based code analysis using regular expressions

- Real-time progress tracking during reviews

Time required: 30-45 minutes

Skill level: Intermediate Python knowledge

Prerequisites:

- Python 3.11 or higher installed

- Claude Desktop application

- Basic command line familiarity

Understanding AI Agent Development Through a Code Review Implementation

Before we dive in, let's clarify exactly how this code review system works:

How the Code Review Process Works in Our AI Agent

Our implementation uses static code analysis, not AI-based code review. Here's what happens:

- Pattern Matching: Devs create a YAML checklist containing regex patterns based on specific requirements. The system used this checklist to check flag errors line by line (e.g., eval(, hardcoded passwords, console.log).

- File Validation: Checks if required files exist (e.g., requirements.txt, package.json)

- Static Analysis: No actual code execution - just text pattern matching

Discover more about AI solutions for businesses: Check out our AI services for businesses to explore custom AI solutions.

The AI Agent's Role: How Claude Orchestrates MCP Tools

Claude's role is limited to:

- Natural Language Interface: You can ask "review my Python code" instead of calling command-line tools

- Tool Orchestration: Claude decides which MCP server development tools to call based on your request

- Result Presentation: Claude formats and explains the findings in conversational language

What This Means for AI Agent Development

- Regex patterns do the actual code review you define in YAML files

- Not AI-based: Claude doesn't analyze your code semantically or understand logic

- Pattern-based: You define what to look for (like "find all eval() calls")

- Customizable: You control exactly what gets checked by editing YAML checklists

- Deterministic: Same code always produces the same results (no AI variability)

Why Building AI Agents with MCP This Way Is Effective ?

This hybrid approach gives you:

- Control: You define the exact rules via YAML checklists

- Speed: Pattern matching is fast, no AI inference needed for scanning

- Consistency: Deterministic results every time

- Extensibility: Easy to add new checks without AI training

- Convenience: Natural language interface via Claude Desktop

Think of it as linting rules + Claude's conversational interface. You're essentially building an AI agent with MCP that runs as a customizable linter through natural conversation.

Learn how consulting can optimize AI agent workflows: AI Strategy Consulting Services

Table of Contents

- Understanding the Model Context Protocol

- Project Architecture

- Setting Up the Project

- Building the Technology Detector

- Building the Validation System

- Building the Progress Tracker

- Building the Checklist Engine

- Creating YAML Checklists

Continue to Part 2 for MCP server development, testing, and deployment.

Understanding the Model Context Protocol

Before we dive into AI Agent Development, let's understand what MCP is and why it matters.

What is MCP?

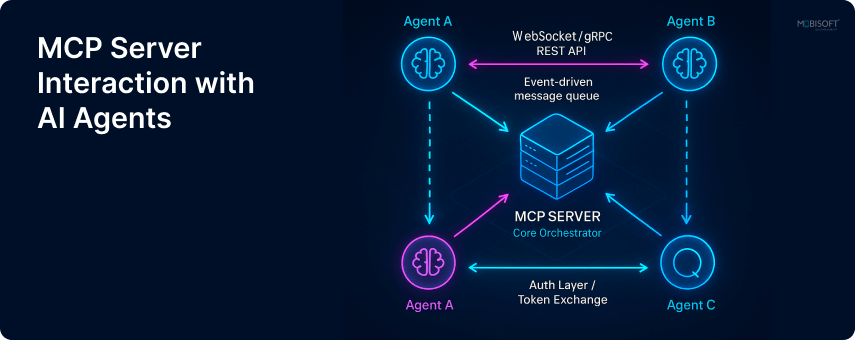

The Model Context Protocol is an open standard developed by Anthropic. It is used for smoothly connecting artificial intelligence agents with external data sources. In simple terms, it’s a bridge that lets your AI talk to external tools or data sources.

Why does MCP matter?

Without MCP, every integration between AI Agents and your development tools would need custom code. MCP provides

- A standard way to expose tools to artificial intelligence agents

- Secure, local execution of code analysis

- Bidirectional communication between Claude and your tools

- Extensibility for custom integrations

For advanced AI agent examples, see: Custom AI Agent Development

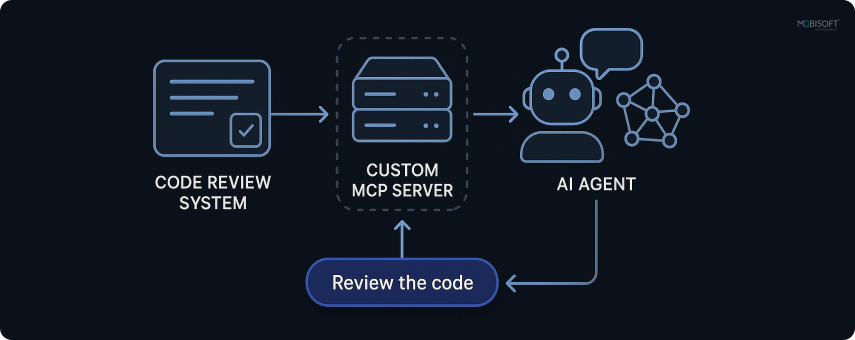

How it works

The flow is straightforward:

- You interact with the Claude Desktop

- Claude identifies when it needs to use your MCP tools

- Claude sends a request to your MCP server

- Your MCP server executes the code review

- Results are sent back to Claude

- Claude presents the findings in natural language

Project Architecture

Our code review agent consists of several components that work together:

mcp-code-reviewer/

├── main.py # MCP server with tool definitions

├── technology_detector.py # Detects project programming language

├── checklist_engine.py # Orchestrates the review process

├── validators/

│ ├── __init__.py

│ ├── base_validator.py # Base class for all validators

│ ├── pattern_validator.py # Regex-based code scanning

│ └── file_validator.py # File existence checks

├── reporters/

│ ├── __init__.py

│ └── progress_tracker.py # Tracks review progress

├── checklists/

│ ├── python.yaml # Python review rules

│ ├── javascript.yaml # JavaScript review rules

│ └── java.yaml # Java review rules

└── pyproject.toml # Project metadata and dependencies

Component Overview:

- Technology Detector identifies what programming language your project uses

- Checklist Engine loads the appropriate YAML checklist and coordinates execution

- Validators run the actual checks against your code

- Progress Tracker monitors execution and collects results

- MCP Server exposes everything as tools that Claude can use

For AI analytics integration, see: Conversational Analytics with SQL

Setting Up Your MCP Development Project

Firstly, we need to create the project structure and install the required dependencies.

Step 1: Create the project directory

Open your terminal and run these commands:

# Create main project folder

mkdir mcp-code-reviewer

cd mcp-code-reviewer

# Create subdirectories for different components

mkdir checklists validators reporters

# Create __init__.py files for Python packages

touch validators/__init__.py

touch reporters/__init__.py

Step 2: Define project dependencies

Create a file called pyproject.toml in the project root:

[project]

name = "mcp-code-reviewer"

version = "0.1.0"

description = "MCP server for automated code review"

requires-python = ">=3.11"

dependencies = [

"mcp[cli]>=1.16.0",

"pyyaml>=6.0",

"pathspec>=0.11.0",

]

[project.scripts]

mcp-code-reviewer = "main:mcp.run"

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

Dependency Explanation:

- mcp[cli]: The official MCP SDK from Anthropic, including CLI tools for MCP development

- pyyaml: Parses YAML files where we store our review checklists

- pathspec: Handles file pattern matching (similar to .gitignore patterns)

Important: The entry point mcp-code-reviewer = "main:mcp.run" tells Python to call the run() method on the MCP server object in main.py. This is crucial for the MCP server to work correctly.

Step 3: Install dependencies

We will use uv, a fast Python package manager. If you don't have it installed:

pip install uvNow install the project dependencies:

uv syncThis creates a virtual environment and installs all required packages automatically.

Building the Technology Detector

The technology detector is the first component we need. It automatically identifies what programming language a project uses by looking for indicator files and counting source code files.

What it does:

The technology detector automatically identifies your project's programming language by analyzing indicator files and source code files.

How it works:

- Defines indicator files for each technology (e.g., package.json for JavaScript, pyproject.toml for Python)

- Scores each technology based on indicator files found (2 points per indicator)

- Counts source code files by extension and adds to the score.

- Returns the highest-scoring technology with confidence level.

Key implementation concept:

# Define technology indicators

TECH_INDICATORS = {

"python": {

"files": ["requirements.txt", "setup.py", "pyproject.toml"],

"extensions": [".py"],

"frameworks": {"django": ["manage.py"], "flask": ["app.py"]}

},

"javascript": {

"files": ["package.json", "yarn.lock"],

"extensions": [".js", ".jsx"]

}

}

# Scoring logic - indicator files get 2 points, source files add to score

for tech, indicators in TECH_INDICATORS.items():

for file in indicators["files"]:

if self._file_exists(file):

score += 2

# Count source code files

ext_count = self._count_extensions(indicators["extensions"])

if ext_count > 0:

score += min(ext_count / 10, 3) # Cap at 3 points

Create the file:

Create technology_detector.py in your project root with the complete implementation, including the TechnologyDetector class with methods for:

- detect(): Main detection method

- _file_exists(): Checks for indicator files

- _count_extensions(): Counts source files

- _detect_frameworks(): Identifies frameworks by reading config files

Note: Full implementation available in the GitHub repository.

Building the Validation System

The validation system is the heart of our code review agent. We have three validator classes: a base class and two implementations.

Create a validators package

First, create validators/__init__.py:

from .base_validator import BaseValidator, ValidationResult

from .pattern_validator import PatternValidator

from .file_validator import FileValidator

__all__ = ['BaseValidator', 'ValidationResult', 'PatternValidator', 'FileValidator']

Base Validator

What it does:

Provides an abstract base class and a result container for all validators.

How it works:

- The ValidationResult class stores check outcomes (pass/fail, message, file location, line number)

- BaseValidator defines the abstract validate() method all validators must implement

- Provides helper method get_files_to_check() for scanning source files

Key implementation concept:

class ValidationResult:

"""Represents the result of a single validation check."""

def __init__(self, check_id: str, passed: bool, message: str = "",

file_path: str = "", line_number: int = 0, severity: str = "info"):

self.check_id = check_id

self.passed = passed

self.message = message

self.file_path = file_path

self.line_number = line_number

self.severity = severity

class BaseValidator(ABC):

@abstractmethod

def validate(self, check: Dict[str, Any]) -> List[ValidationResult]:

"""Must be implemented by subclasses."""

pass

Create the file:

Create validators/base_validator.py with the complete ValidationResult and BaseValidator classes.

Note: Full implementation available in the GitHub repository.

Pattern Validator

What it does:

Scans source code files line-by-line for regex pattern matches (security issues, code smells, etc.).

How it works:

- Receives check definition with regex patterns from YAML

- Gets appropriate source files based on file extensions

- Compiles regex patterns and scans each line

- Records failures with file path and line number when patterns match

Key implementation concept:

# Core pattern matching logic

for file_path in files:

content = file_path.read_text(encoding='utf-8', errors='ignore')

lines = content.split('\n')

for pattern_str in patterns:

pattern = re.compile(pattern_str, re.IGNORECASE | re.MULTILINE)

for line_num, line in enumerate(lines, start=1):

if pattern.search(line):

results.append(ValidationResult(

check_id=check['id'],

passed=False,

message=f"Pattern match: {check['description']}",

file_path=str(file_path),

line_number=line_num,

severity=check.get('severity', 'info')

))

Create the file:

Create validators/pattern_validator.py with the PatternValidator class, including a helper method _get_extensions() to map technology names to file extensions.

Note: Full implementation available in the GitHub repository.

File Validator

What it does:

Checks if required project files exist (like requirements.txt, package.json, test files).

How it works:

- Receives a check definition with a list of required files or glob patterns

- Checks if any of the specified files exist in the project

- Returns pass if found, fail with a missing file list if not

- Supports glob patterns for finding test files or other patterns

Key implementation concept:

# Core file existence check

files_to_check = check.get('files', [])

found = False

for filename in files_to_check:

file_path = self.project_path / filename

if file_path.exists():

found = True

break

if found:

results.append(ValidationResult(check_id=check['id'], passed=True, ...))

else:

results.append(ValidationResult(

check_id=check['id'],

passed=False,

message=f"Missing required files: {', '.join(files_to_check)}",

...

))

Create the file:

Create validators/file_validator.py with the FileValidator class, including helper method _check_pattern_files() for glob pattern matching.

Note: Full implementation available in the GitHub repository.

Building the Progress Tracker

The progress tracker monitors review execution and collects results.First, create reporters/__init__.py:

from .progress_tracker import ProgressTracker

__all__ = ['ProgressTracker']

What it does:

Tracks real-time progress of code review execution, counting passed/failed/skipped checks and recording failure details.

How it works:

- Initializes counters for total, passed, failed, and skipped checks

- Updates the current check being processed

- Records each check outcome (pass/fail/skip)

- Collects detailed failure information (file, line, message, severity)

- Calculates percentage completion and formats reports

Key implementation concept:

class ProgressTracker:

def __init__(self):

self.total_checks = 0

self.passed = 0

self.failed = 0

self.skipped = 0

self.current_check = None

self.failures = []

self.start_time = None

self.end_time = None

def record_pass(self):

"""Record a passed check."""

self.passed += 1

self.in_progress = 0

def record_fail(self, failure_info: Dict[str, Any]):

"""Record a failed check."""

self.failed += 1

self.failures.append(failure_info)

Create the file:

Create reporters/progress_tracker.py with the complete ProgressTracker class, including methods for:

- start(), update_check(), complete()

- record_pass(), record_fail(), record_skip()

- get_progress(), get_summary()

- format_progress_bar(), format_status(), format_failures()

Note: Full implementation available in the GitHub repository.

Building the Checklist Engines

The checklist engine coordinates everything, loading YAML checklists and orchestrating validator execution.

What it does:

Manages the complete code review process by running YAML checklists, validator checks, and monitoring progress.

How it works:

- Loads technology-specific YAML checklist from the checklists directory

- Counts total checks and initializes progress tracking

- Iterates through each category and checks the item

- Determines the appropriate validator (PatternValidator or FileValidator) based on the check definition

- Executes validator and records results (pass/fail)

- Provides progress callbacks for real-time updates

- Returns a comprehensive summary with failures, duration, and statistics

Key implementation concept:

def review_code(self, technology: str, progress_callback=None) -> Dict[str, Any]:

# Load checklist

checklist = self.load_checklist(technology)

# Start tracking

total_checks = sum(len(category['items']) for category in checklist['categories'])

self.progress_tracker.start(total_checks)

# Execute checks

for category in checklist['categories']:

for check in category['items']:

# Update progress

self.progress_tracker.update_check(check['id'], check['description'])

# Execute validation (choose appropriate validator)

results = self._execute_check(check, technology)

# Record results

passed = all(r.passed for r in results)

if passed:

self.progress_tracker.record_pass()

else:

for result in results:

if not result.passed:

self.progress_tracker.record_fail({...})

return self.progress_tracker.get_summary()

Create the file:

Create checklist_engine.py with the complete ChecklistEngine class, including:

- load_checklist(): Loads YAML files

- review_code(): Main orchestration method

- _execute_check(): Chooses and runs the appropriate validator

- get_progress(), get_summary(), format_report(): Result retrieval

Note: Full implementation available in the GitHub repository.

Creating YAML Checklists

Checklists define what to check for each programming language. Let's create a Python checklist as an example.

Create checklists/python.yaml:

technology: python

description: Code review checklist for Python projects

categories:

- name: Security

items:

- id: SEC-001

description: No hardcoded credentials or API keys

validator: check_hardcoded_secrets

severity: critical

patterns:

- "password\\s*=\\s*[\"'][^\"']+[\"']"

- "api_key\\s*=\\s*[\"'][^\"']+[\"']"

- "secret\\s*=\\s*[\"'][^\"']+[\"']"

- "token\\s*=\\s*[\"'][^\"']+[\"']"

- id: SEC-002

description: SQL injection prevention (use parameterized queries)

validator: check_sql_injection

severity: critical

patterns:

- "execute\\([^)]*%[^)]*\\)"

- "execute\\([^)]*\\+[^)]*\\)"

- id: SEC-003

description: No eval() or exec() usage

validator: check_dangerous_functions

severity: critical

patterns:

- "\\beval\\("

- "\\bexec\\("

- name: Code Quality

items:

- id: QA-001

description: Functions should be under 50 lines

validator: check_function_length

severity: warning

threshold: 50

- id: QA-002

description: No commented-out code blocks

validator: check_commented_code

severity: info

patterns:

- "^\\s*#.*\\b(def|class|if|for|while)\\b"

- id: QA-003

description: Proper exception handling (no bare except)

validator: check_exception_handling

severity: warning

patterns:

- "except\\s*:"

- name: Best Practices

items:

- id: BP-001

description: Use context managers for file operations

validator: check_context_managers

severity: warning

patterns:

- "open\\([^)]+\\)(?!\\s*as\\s+)"

- id: BP-002

description: Requirements file exists

validator: check_requirements_file

severity: info

files: ['requirements.txt', 'pyproject.toml', 'Pipfile']

- name: Testing

items:

- id: TEST-001

description: Test files exist

validator: check_test_files

severity: info

patterns:

- 'test_*.py'

- '*_test.py'

- id: TEST-002

description: No print statements (use logging)

validator: check_print_statements

severity: info

patterns:

- "\\bprint\\("

Important YAML Syntax Notes:

- In YAML files, backslashes in regex patterns must be double-escaped

- For example, \b becomes \\b in the YAML file

- Always use double quotes for strings containing special characters

- If you see parsing errors, check your escaping and quote usage

Similarly, create checklists/javascript.yaml:

technology: javascript

description: Code review checklist for JavaScript/TypeScript projects

categories:

- name: Security

items:

- id: SEC-001

description: No hardcoded credentials or API keys

validator: check_hardcoded_secrets

severity: critical

patterns:

- "password\\s*[:=]\\s*[\"'][^\"']+[\"']"

- "apiKey\\s*[:=]\\s*[\"'][^\"']+[\"']"

- id: SEC-002

description: No eval() usage

validator: check_dangerous_functions

severity: critical

patterns:

- "\\beval\\("

- id: SEC-003

description: Avoid innerHTML (XSS vulnerability)

validator: check_xss_vulnerabilities

severity: critical

patterns:

- "\\.innerHTML\\s*="

- name: Code Quality

items:

- id: QA-001

description: Use const/let instead of var

validator: check_var_usage

severity: warning

patterns:

- "\\bvar\\s+"

- id: QA-002

description: No console.log in production code

validator: check_console_statements

severity: info

patterns:

- "console\\.log\\("

- "console\\.debug\\("

- name: Best Practices

items:

- id: BP-001

description: Package.json exists

validator: check_package_json

severity: critical

files: ["package.json"]

- id: BP-002

description: ESLint configuration exists

validator: check_eslint_config

severity: info

files: [".eslintrc", ".eslintrc.js", ".eslintrc.json"]

What's Next?

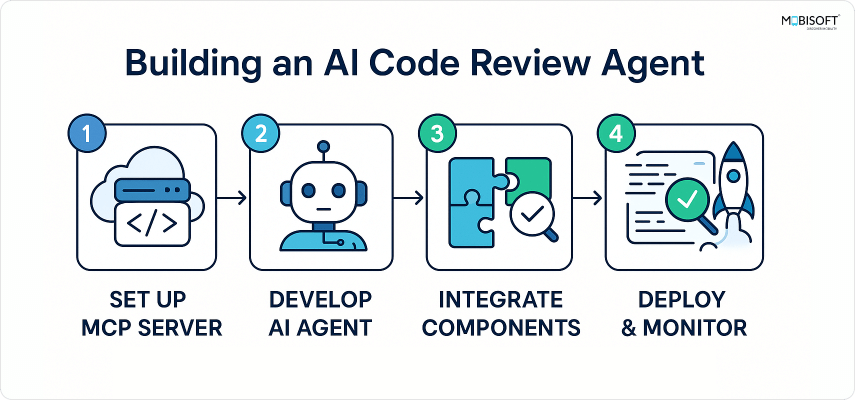

Congratulations! You've completed Part 1 and built all the core components of your AI agent using MCP development:

- Technology detector for automatic language identification

- Validation system with pattern and file validators

- Progress tracking for real-time feedback

- Checklist engine for orchestrating reviews

- YAML checklists for Python and JavaScript

In Part 2, we'll cover:

- Building the MCP server to expose these components as tools

- Configuring Claude Desktop to use your MCP server

- Testing with both MCP Inspector and Claude Desktop

- Troubleshooting common issues

- Extending the agent with additional features

Continue to Part 2: Integration, Testing & Deployment to complete your AI agent with MCP server development!

You can explore the complete source code and follow along with the project on GitHub. Check out the repository here: GitHub Repository Link

Questions or Feedback?

If you have any queries regarding this guide or wish to share improvements:

- Go to the GitHub repository, open a new issue

- Share your feedback on social media platforms

- Suggest refinements in the project

Happy coding and enjoy building AI agents!

October 16, 2025

October 16, 2025