AI pilots perform perfectly in controlled demonstrations. They execute tasks and follow protocols as designed. Once deployed into real workflows, however, these same systems often start to fray. Decisions become inconsistent, and policies are misapplied. The issue is rarely the model's capability. Instead, it stems from the unmanaged information ecosystem surrounding it.

This gap is what context engineering for LLMs addresses. It is the essential discipline for moving from impressive prototypes to robust, production-grade AI infrastructure. It builds the reliable memory and attention systems that enterprise operations require. Without this layer, even the most powerful AI will quietly break under real-world pressure, preventing teams from delivering reliable AI agents at scale.

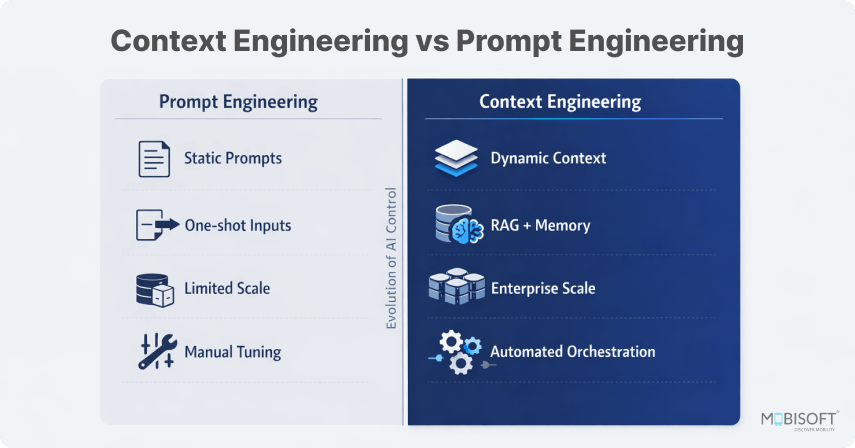

Why Prompt Engineering Fails in Production AI?

A perfectly crafted prompt can feel like a master key in a demonstration. In a live enterprise environment, that same prompt often stops working. The issue is one of scope. Prompt engineering optimizes a single interaction, but production AI must survive relentless system pressure. This local optimization breaks under the weight of real workflows, which is why context engineering vs prompt engineering becomes a real concern in enterprise systems.

Workflow Degradation at Scale

A prompt is static, but business processes are dynamic and expansive. As workflows grow in complexity, the initial instructions are diluted. They must compete with user requests, historical data, and new outputs. The clarity of the original design dissipates, leading to unpredictable behavior and reduced AI agent reliability.

Single-String Architecture

Prompts exist within one continuous stream of text. Enterprise systems, however, are multifaceted. They require separate threads for policy, memory, and operations within a scalable AI agent architecture. Forcing everything into a single string creates a fundamental mismatch. It conflates memory, instruction, and output until the model cannot distinguish its core task.

Instruction Burial & Memory Loss

Key directives get lost in the expanding context. Critical details from earlier in a conversation become inaccessible without proper LLM memory management and dynamic context management. The model then experiences task interference, applying logic from one domain to another. This manifests as inconsistency, not mere hallucination. It is a structural failure.

Consequently, businesses face tangible risks. Inconsistency leads to compliance gaps and erodes trust. Policy drift introduces operational chaos. Relying solely on prompt engineering is building on unstable ground. It cannot support the weight of a full-scale system.

To see how enterprises operationalize context engineering across multiple models, explore the MI Team – AI multi-LLM platform for enterprises for building and managing scalable AI agents.

What Context Means in Modern LLM Systems

We often discuss context as if it were a vessel, a simple container holding the conversation's history. This view is incomplete. In practice, context functions as the model's entire working memory, a dynamic and contested information environment shaped by LLM context engineering. Every new piece of data enters a crowded field where details compete for a finite attention budget.

Modern models face a constant tension. As context grows, the signal of what truly matters can be drowned out by noise inside the model context window optimization process. The model's ability to focus on critical instructions or recent facts diminishes because all information, urgent or trivial, exists with equal potential. However, existing within the context window is not the same as being accessible. Key details become lost in plain sight, buried beneath layers of dialogue or tangential data.

This unmanaged environment quietly sabotages reasoning. The model may have all the necessary data present, yet fails to retrieve the correct piece at the right moment without proper LLM context optimization. It expends its cognitive bandwidth parsing the entire information instead of acting on curated, relevant knowledge. Therefore, consistent reasoning quality depends not on how much information we provide, but on how effectively we govern that information ecosystem. Without this governance, the context becomes a liability.

The Four Context Layers That Control AI Behavior

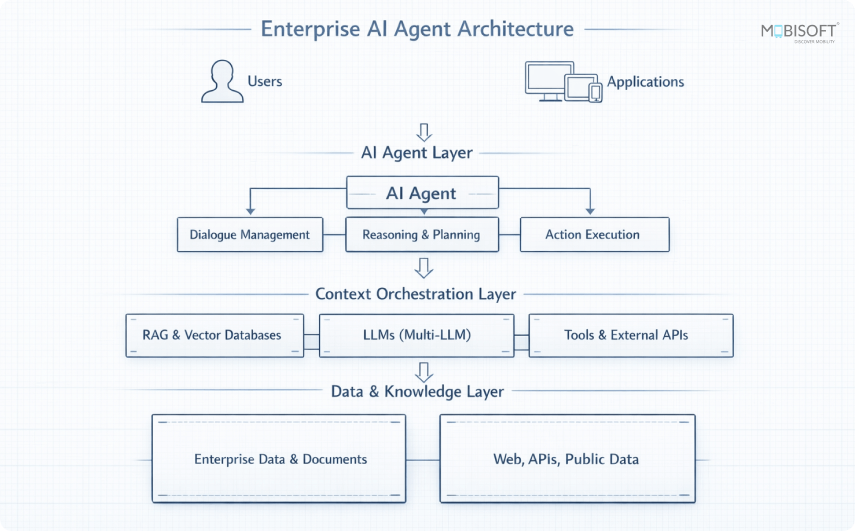

Building AI that performs consistently at an enterprise level requires more than a powerful model. It demands an architectural approach to information management aligned with context engineering for AI agents. Think of it as moving from a single, overloaded circuit to a structured electrical grid, with the following layers:

External Persistent Memory

- This foundational layer stores facts, user data, and policy rules in a dedicated database, not in temporary conversation text, often backed by vector databases for LLMs.

- It solves the critical failure of amnesia, where an agent forgets crucial information between sessions.

- For a business, this prevents costly inconsistencies and ensures every interaction respects historical context and established rules for enterprise AI agents.

Just-in-Time Context Selection

- Acting as a precision filter, this layer dynamically retrieves only the data relevant to the immediate task.

- It prevents the model from being overwhelmed by irrelevant information, a primary cause of distraction and degraded reasoning.

- The business outcome is focused, accurate responses, and the elimination of noise-induced errors.

Context Compression

- When long narratives or documents are necessary, this layer distills them into concise, semantic summaries.

- It directly fights instruction burial, where core directives get lost in verbose history.

- This maintains coherence over long interactions and ensures key narrative threads are preserved without wasting the model's attention budget.

Workflow Isolation

- This layer establishes strict boundaries between different processes, such as separate customer sessions or internal tools.

- It prevents context clash, where information from one domain bleeds into and corrupts another.

- Operationally, this guarantees compliance and purity of execution, stopping dangerous policy drift.

In essence, these layers form the new backbone of production AI through enterprise context engineering. They replace fragile, monolithic prompts with a resilient system.

For teams evolving from basic automation to intelligent agents, AI chatbot development services support building context-aware conversational systems for real workflows.

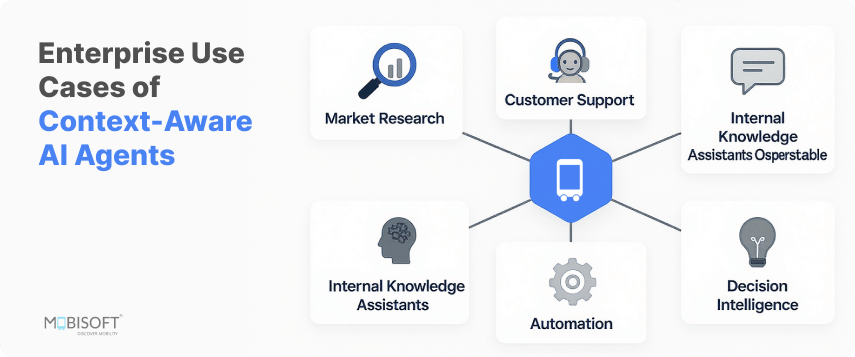

How Context Engineering Redesigns AI Agents

Adopting a context engineering mindset fundamentally alters how we design AI agents. The focus moves from crafting input strings to building integrated information systems through context engineering for AI agents. An agent becomes less a conversational interface and more a coordinator of memory, tools, and state. This change in perspective is what separates a simple chatbot from an autonomous operational unit.

Agents as Memory-Enabled Systems

The core upgrade is integrating persistent, searchable memory with proper LLM memory management. This allows the agent to learn from past interactions and apply those lessons to new situations. It transitions the system from having a goldfish-like attention span to developing a form of institutional knowledge. The agent’s actions become informed by history, not just the last few lines of dialogue.

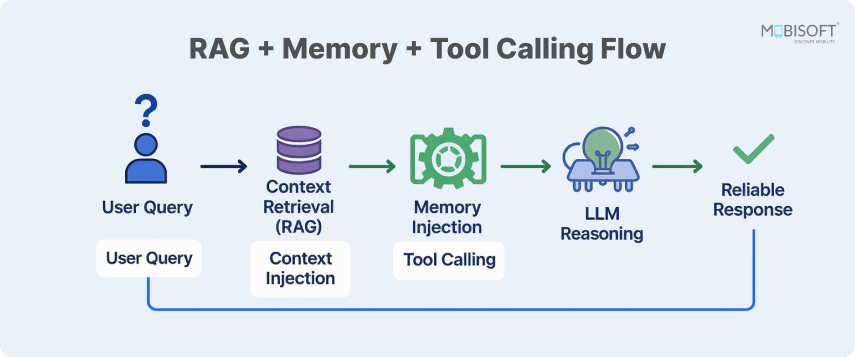

Structured Retrieval

Decision-making is moving from pure generation to retrieval-augmented reasoning using retrieval augmented generation (RAG). The agent actively queries its knowledge bases and memory before formulating a response. This process grounds its outputs in verified sources and relevant precedents, effectively reducing speculative answers. The loop of retrieval, analysis, and then generation becomes its operational heartbeat.

Tool Workflow Orchestration

With a managed context, the agent can reliably orchestrate complex tool sequences across AI agent workflows. It maintains the required state and parameters across multiple steps, such as querying a database, formatting results, and triggering an API. Context engineering provides the thread that ties these discrete actions into a coherent, executable procedure without dropping critical data along the way.

In this architecture, the prompt recedes into the background. It becomes a trigger or a configuration step, not the sole vessel of intelligence. The true substance of the system lies in its memory, retrieval processes, and stateful workflow management. This is why modern, reliable agents are better understood as context systems. Their output is a direct product of their engineered information environment.

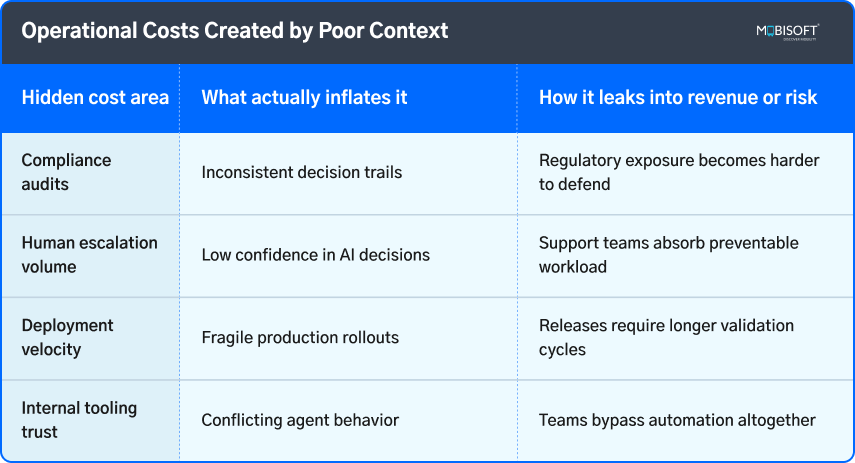

Context Failures That Break Enterprise AI

Even with capable models, unengineered context introduces predictable points of failure in enterprise AI agents. These are not mere errors but systemic breakdowns in the AI's operating environment. Economic Times reported that 95% of executives reported at least one AI mishap in deployment. And only 2% of firms met responsible AI use standards in 2025. Recognizing these patterns is crucial for diagnosing reliability issues and preempting significant business risk.

Context Poisoning

- Malicious or malformed inputs corrupt the agent's working memory. This can intentionally bias its reasoning or accidentally inject false premises.

- The business consequence is compromised decision integrity, leading to incorrect actions sourced from bad data.

Context Distraction

- An overstuffed context window forces the model to process irrelevant information. Critical instructions are lost in the noise.

- This manifests as the agent delivering generic or off-topic responses, directly causing operational delays and quality inconsistency.

Context Confusion

- Mixing domains or tasks within a single context stream blends separate protocols. The agent applies policy from one workflow to another.

- The result is severe compliance risk, as actions in regulated areas may ignore required guardrails.

Context Clash

- Conflicting data points or instructions within the same context create logical paralysis. The model cannot resolve opposing directives.

- This leads to operational deadlock, indecisive outputs, and a complete breakdown in process continuity.

Each failure mode escalates technical debt into a tangible business impact. They generate wrong decisions, regulatory exposure, and process failure. Mitigation requires the architectural layers, like isolation, selective retrieval, and clean memory management. Without these, context itself becomes the primary threat to system reliability.

For structured rollout and controlled environments, MCP-based AI agent deployment enables reliable integration and operational governance.

Why Context Engineering Drives Reliable AI at Scale?

As per Forbes, only 5% of enterprise AI pilots achieve measurable P&L impact or scale across workflows. The remaining 95% produce little or no measurable financial return. Superior model access no longer guarantees a superior AI application. The enduring advantage now stems from a deeper layer of infrastructure. This is where context engineering for LLMs operates, turning powerful but unpredictable models into calibrated business systems.

Building Institutional Consistency

Output variation is a major operational cost. Context engineering directly manufactures repeatability. By architecting how information flows and is prioritized, it ensures the AI's reasoning aligns with institutional knowledge and rules every single time. This engineered consistency becomes a product feature that users rely on.

Enabling Governance by Design

Auditing a black-box model is difficult. Auditing a structured information environment is feasible. Context engineering creates the necessary control planes for oversight. It logs decisions, manages data lineage, and enforces policy at the point of retrieval. This makes safety and compliance verifiable attributes, not afterthoughts.

Creating Model-Agnostic Value

Locking into a single model vendor poses strategic risk. A well-designed context layer abstracts core business logic, such as memory, workflows, and rules, away from the underlying model. This allows the organization to adopt new models seamlessly. It protects the operational intelligence as the technology evolves.

The market is beginning to recognize this transition. It is moving from prompt crafters to the architects who can build these resilient cognitive environments. It becomes the core infrastructure for long-term competitive differentiation, turning a cost center into a stable platform for innovation.

A practical example of retrieval-driven context systems can be seen in AI in market research using RAG, where enterprise data is transformed into actionable insights.

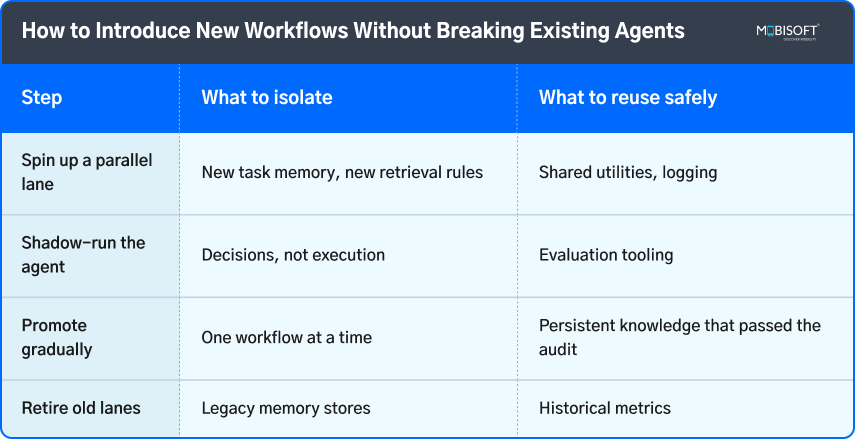

Before promoting new workflows, applying LLM evaluation strategies for reliable AI agents helps validate performance, safety, and consistency.

Context Is the Real AI Infrastructure

The journey from prototype to production reveals a clear distinction. Prompt engineering unlocked the potential for dialogue with AI, making the technology initially usable and accessible. Context engineering addresses the far harder problem of making it dependable. This is not a minor adjustment. It represents a fundamental change in perspective, from viewing AI as a tool for generating responses to treating it as a system that must manage its own cognitive environment through context engineering for LLMs.

or enterprises, this means redesigning AI integration with a system-first mindset. The focus must expand from model selection and prompt design to include the architecture of memory, the precision of retrieval, and the isolation of processes. This deeper infrastructure is what prevents breakdowns under real pressure. As models continue to evolve, this engineered layer of stability and governance will become the true differentiator, separating fragile experiments from robust, long-running AI operations that deliver consistent value and support reliable AI agents at scale.

To extend this architecture into production, explore AI agent frameworks and SDKs for enterprise automation for building scalable agent workflows.

Key Takeaways

- Prompt engineering builds conversations, but it often breaks under real workflow pressure. It is a local optimization.

- Context is not a container. It is the model's dynamic and competitive working memory environment.

- Unmanaged context causes silent failures. Information becomes inaccessible, leading to distraction and reasoning decay.

- Reliable systems require four control layers. These manage memory, selection, compression, and isolation of workflows.

- This approach changes fundamental agent architecture. Agents become context systems powered by retrieval and state management.

- Specific failure modes like poisoning or clash create direct business risk. They cause compliance gaps and operational drift.

- Context engineering is now a critical infrastructure layer. It provides the consistency and governance enterprises require.

- The field changes focus from model capability to information environment design. This builds the foundation for long-term, trustworthy AI operations guided by LLM context engineering.

FAQs

What differentiates a context window from an engineered working memory system?

A context window is a fixed input limit. An engineered working memory is an active architecture. It manages what information enters that window through selection, compression, and retrieval. This results in a reliable, performance-critical subsystem that directs the model's focus intentionally.

How does context engineering incorporate Retrieval-Augmented Generation (RAG)?

RAG serves as a core technique within the broader context of the engineering framework. It specifically enables the just-in-time context selection layer. Context engineering defines the full system, including persistent memory and workflow isolation, using RAG as one component for dynamic information retrieval.

Is this discipline only applicable to conversational AI interfaces?

No. It is fundamental for any autonomous agent executing sequential decisions. This includes systems performing data analysis, orchestrating APIs, or managing multi-step processes. Any agent requiring state awareness across actions depends on engineered context to maintain operational integrity.

What organizational role is responsible for context engineering?

This function typically resides at the intersection of ML engineering, data infrastructure, and platform DevOps. It requires a systems architecture mindset focused on information flow and state management, moving beyond pure model tuning to encompass the entire runtime environment.

Can context engineering eliminate factual inaccuracies in model outputs?

It directly mitigates inaccuracies arising from poor data access or instruction loss. It ensures the model grounds its responses in the provided sources. It cannot correct flaws inherent in the model's base training or its reasoning on perfectly retrieved data.

Which metrics indicate successful context engineering implementation?

Operational indicators, not just accuracy, measure success. Key metrics include reduced variance in output quality, increased adherence to business rules, decreased need for human intervention or audits, and greater consistency across independent agent sessions over time.

January 22, 2026

January 22, 2026