Artificial intelligence has rapidly evolved from an academic pursuit to a core business tool. Companies now deeply want to integrate models like ChatGPT into their own secure systems. This desire stems from a non-negotiable need for data privacy, ensuring sensitive information remains entirely on internal networks. Yet the monumental size and computational demands of these models present a massive deployment hurdle. They demand resources often beyond what's readily available.

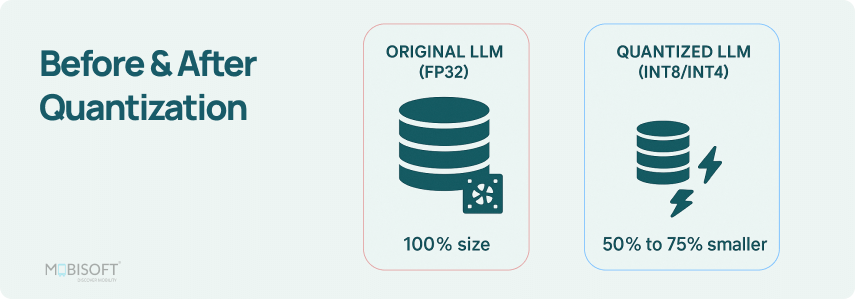

Then there’s quantization in LLM, a powerful technique that reduces the precision of model parameters. This decreases memory usage and improves inference speed. This article will explore the concept of what is quantization in LLMs in the context of LLMs, tracing its history, detailing various types, examining size benefits, understanding perplexity as a performance metric, discussing accuracy loss, and highlighting how AI model quantization makes private LLM hosting viable.

Historical Context of Quantization

Origins of Quantization

Quantization as a concept has deep roots in digital signal processing (DSP) and data compression techniques. Initially, it aimed to convert continuous signals into a format suitable for computer storage and processing. The earliest examples of quantization can be traced back to the development of pulse-code modulation (PCM) in the 1930s, in which analog signals were represented in digital form by sampling and quantizing their amplitudes. As noted in the literature, "Quantization forms the core of essentially all lossy compression algorithms."

Evolution in AI

Then deep learning arrived. The 2010s saw neural networks growing not just in complexity but in sheer size. Their computational and memory demands became increasingly profound. Researchers noticed those old DSP concepts. Pioneering work by people like Courbariaux and Rastegari around 2016 showed us something remarkable. We could actually use low-precision weights inside neural networks. They can be made smaller and faster without completely breaking their performance.

Adoption in LLMs

The transition from traditional models to LLMs, with their billions of parameters, highlighted the increasing need for Large Language Model quantization. When models like Llama exploded in size to billions of parameters, the theoretical became an urgent necessity. Quantization was no longer just an interesting optimization. It was the only way to deploy these behemoths on accessible hardware realistically.

Current Trends

The field is advancing at a very fast pace right now. We're seeing techniques like post-training quantization gain traction, promising not just smaller models, but smarter, more accurate quantized models. The focus has fully shifted to preserving quality, not just compressing size. It’s a fascinating evolution to watch.

Read our Spring Boot LLM integration guide to implement LLMs in production applications.

Types of Quantization

Quantization techniques can be broadly categorized into several types, each with its unique methodologies and applications in optimizing LLMs. This includes widely used LLM quantization techniques applied across modern architectures.

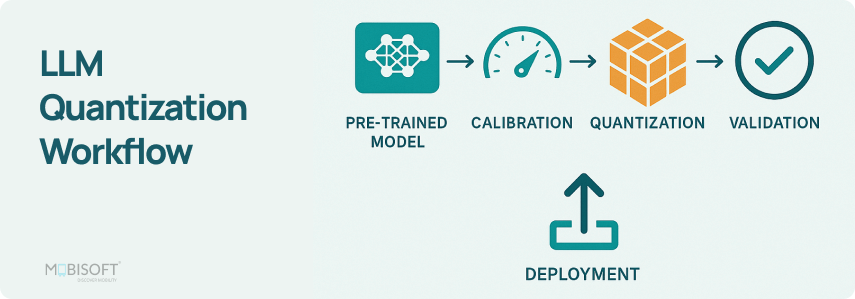

Post-Training Quantization (PTQ)

Post-training quantization is often the first method teams try. It happens after the model is fully trained. You simply convert those high-precision weights, like 32-bit floats, down to a more efficient 8-bit integer format. The great advantage here is speed. You can have a quantized model ready for deployment without the lengthy and expensive retraining process. The trade-off is usually a small accuracy dip, two to five percent. This is perfectly acceptable for a great many applications, says research. especially for teams using AI model quantization to optimize inference.

Quantization-Aware Training (QAT)

Quantization-Aware Training takes a more thorough approach. QAT bakes the quantization process directly into the training loop. The model learns to adapt to the lower precision from the very beginning. It's a bit like training an athlete with ankle weights, so they feel incredibly light once removed. This method generally protects accuracy much better than PTQ, making it worth the extra effort for performance-critical deployments where quantization in neural networks must preserve performance.

Dive into our guide on open-source LLMs to explore cost-effective AI model solutions.

Comprehensive Overview of Quantization Formats

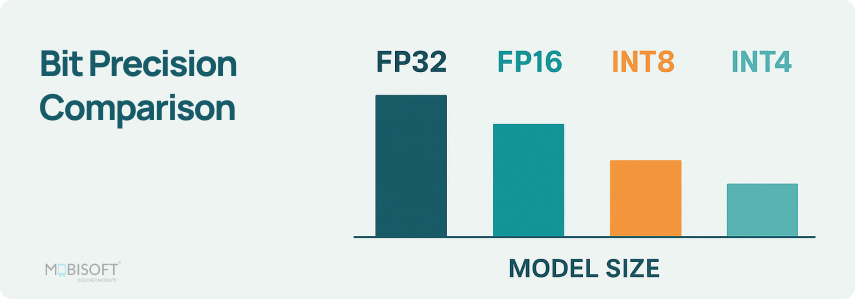

The choice of quantization format significantly impacts model performance, memory usage, and computational efficiency. Here's an in-depth look at the most important formats used in quantization in machine learning workflows:

INT8 (8-bit Integer)

INT8 quantization is the trusted workhorse for production systems. It cuts the model size by seventy-five percent compared to FP32. You typically see less than a one percent accuracy drop, which is just remarkable. Through studies, scholars found out that a massive 70B parameter model that needed 280GB of memory suddenly becomes a much more manageable 70GB. The hardware support across different vendors is also excellent.

Key characteristics:

- Memory reduction: 4x compared to FP32, 2x compared to FP16

- Minimal accuracy loss (typically <1%)

- Excellent hardware support across NVIDIA, AMD, and Intel GPUs

- Industry standard for production deployments in Large Language Model quantization

FP16 (16-bit Floating Point)

FP16, or 16-bit floating point, offers a nice middle ground. It provides a two times reduction in memory and is a common baseline for inference. Modern GPUs handle these calculations natively with their Tensor Cores, which gives you a nice speed boost almost for free.

INT4 (4-bit Integer)

INT4 quantization provides aggressive 8x memory reduction compared to FP32, making large models accessible on consumer hardware. However, this comes with greater accuracy trade-offs. Several methods have emerged to optimize INT4 quantization, supporting the broader goals of AI model optimization techniques used in deployment.

GPTQ (General Pre-Trained Transformer Quantization)

Uses layer-wise quantization with approximate second-order information to minimize output error. GPTQ processes weights in batches, calculating mean squared error (MSE) and updating weights to minimize it. The method is optimized for GPU inference and can achieve 3.25-4.5x speed improvements on various NVIDIA GPUs.

AWQ (Activation-aware Weight Quantization)

Unlike GPTQ, AWQ assumes not all weights are equally important. AWQ identifies ~1% of salient weight channels using activation statistics, then scales these channels up before applying uniform low-bit quantization to all weights. Scaling up the salient channels can reduce the quantization error.

Performance comparison:

- AWQ typically maintains higher accuracy than GPTQ at the same bit width

- Memory usage for a 7B model: ~3.5GB (vs. 14GB in FP16)

- A 70B model requires only ~35-45GB with INT4 (vs. 140GB in FP16)

FP4 (4-bit Floating Point) and NF4 (4-bit NormalFloat)

FP4 represents a newer approach that uses floating-point representation at 4-bit precision. Compared to integer quantization, FP4 handles bell-shaped and long-tail distributions more flexibly. This is particularly advantageous for neural network weights used in quantization in neural networks.

NF4 (NormalFloat 4-bit)

NF4 uses information-theoretically optimal quantization, normalizing weights to fall within a specific range for more efficient representation. Key features include:

- Assuming weights follow a Gaussian distribution

- Using lookup tables for efficient dequantization

- Being integrated with bitsandbytes library for easy deployment

- Enabling fine-tuning of 65B+ models on single consumer GPUs

- Combining with Double Quantization (DQ) for additional 0.4 bits per parameter savings.

Recent developments

NVIDIA's Blackwell architecture, powering the RTX PRO 6000 and B200, now integrates native FP4 tensor core support. This hardware advancement hints at potentially doubling performance compared to FP8 operations. Real-world benchmarks already reflect this, with FP4 achieving around 1.32 times the speed of FP8 on the same setup, all while preserving competitive accuracy levels. It’s a promising step for efficient inference in quantization in machine learning.

FP8 (8-bit Floating Point)

FP8 has emerged as a sweet spot for many production deployments, offering near-lossless accuracy with 2x memory reduction. Modern data center GPUs (H100, H200, B200) include native FP8 tensor cores that deliver 2x performance improvement compared to FP16. Many recent models are trained with FP8 support built in, enabling near-lossless quantization and supporting broader AI model quantization workflows.

Real-world impact:

- Meta's Llama 3.3-70B with FP8: 99%+ quality recovery, 30% latency reduction, 50% throughput improvement

- Llama 3.2-90B with FP8: 10% latency reduction using half the number of GPUs

FP6 (6-bit Floating Point) and MXFP6

FP6 (6-bit floating point) represents an emerging middle ground between FP4 and FP8. Recent research with microscaling (MX) formats shows MXFP6 can offer better accuracy than MXFP4 while maintaining similar computational efficiency on certain hardware architectures.

Studies on large models (70B+ parameters) show mixed-precision strategies combining MXFP4 and MXFP6 can provide better accuracy than pure MXFP4 without sacrificing computational efficiency, making it increasingly relevant for AI model optimization techniques.

Sub-4-bit Quantization (2-bit and 3-bit)

While experimental, sub-4-bit quantization represents the frontier of extreme compression:

- 3-bit quantization: Can achieve 10-11x compression with acceptable accuracy for certain use cases

- 2-bit quantization: Achieves 16x compression but with significant accuracy degradation

- HQQ (Half-Quadratic Quantization): A calibration-free method that can quantize models to 2-bit in minutes (vs. hours for other methods)

Research shows that 70B models are less affected by aggressive quantization than smaller models, with extremely low quantization of 70B models remaining somewhat usable. Whereas 8B models at similar bit widths approach random noise thresholds commonly referenced in quantization in neural networks.

Learn more about our AI development services to accelerate intelligent business solutions.

Mixed-Precision and Format-Specific Strategies

Advanced deployments often use mixed-precision approaches:

- Per-layer quantization: Different layers use different precisions based on sensitivity analysis

- Per-channel quantization: Different channels within layers can use different scales

- GGUF format: Optimized for CPU and Apple Silicon, supporting flexible per-layer quantization (Q4_K_M, Q5_K_M variants)

- EXL2 format: Optimized for low-latency inference with customizable bit allocations

Bit-width Considerations

Choosing the appropriate bit-width is a core part of the quantization strategy. This decision directly shapes both model performance and resource demands. Reducing the bit-width dramatically shrinks model size, sure, but it often introduces quantization noise that can degrade accuracy. That’s why finding the right equilibrium between size and performance is absolutely vital for successful deployment using LLM quantization techniques.

General guidelines:

- 8-bit (INT8/FP8): Production-ready, minimal accuracy loss, 4x memory reduction

- 4-bit (INT4/NF4/FP4): Aggressive compression, 8x memory reduction, acceptable accuracy for most use cases

- 3-bit: Experimental, 10x+ compression, noticeable quality degradation

- 2-bit: Research-level, extreme compression, significant accuracy loss

Size Benefits of Quantization

Quantization offers significant size benefits for deploying Large Language Models, particularly in scenarios where computational resources are limited, and model compression in deep learning is essential.

Memory Reduction

Quantization can achieve remarkable memory savings. Consider the following real-world examples:

Example 1: Llama 3.1 70B

- Original FP16 size: ~140GB (model weights only)

- With overhead and KV cache: ~178GB total

- INT8 quantization: ~70GB (50% reduction)

- INT4 (AWQ) quantization: ~35-45GB (68-75% reduction)

Enables deployment on two NVIDIA 5090 RTX 32GB or single RTX PRO 6000 96GB

Example 2: Llama 3.1 405B

- Original FP16 size: ~810GB

- FP8 quantization: ~405GB (50% reduction)

- Reduces from requiring 16x H100 80GB to 8x H100 80GB

- INT4 quantization: ~202GB (75% reduction)

Real-world Applications and Cost Savings

Organizations are leveraging quantized models across various applications using modern AI model quantization approaches:

- Customer Service Chatbots: Certain open-source small language models can generate responses with quality competitive to OpenAI LLMs, provide comparable yet more reliable latency performance, and reduce costs by up to 29%.

- Enterprise Private Hosting: A startup running millions of daily chatbot interactions can save 50–70% by switching from per-token cloud pricing to GPU-leased instances.

- Infrastructure: 4x RTX 5090 or 1x RTX 6000 Pro Blackwell configuration.

- Enables: Complete data privacy, no external API dependencies.

- ROI: 9-12 months for hardware investment vs. API costs.

Storage and Transfer Efficiency

Reduced model sizes not only aid in memory management but also facilitate easier storage and transfer, with benefits tied to model compression in deep learning:

- Model distribution: INT4 quantized 70B model (~40GB) can be downloaded in 1-2 hours vs. 8-10 hours for the FP16 version

- Version control: Teams can maintain multiple model versions and variants without overwhelming storage systems

- Edge deployment: Quantized models enable deployment on edge devices and locations with limited bandwidth

- A/B testing: Faster iteration cycles when testing multiple model variants

- Disaster recovery: Reduced backup storage requirements and faster recovery times

Check out the Multi-LLM platform for managing multiple language models seamlessly in your enterprise.

Perplexity as a Performance Metric

Perplexity is a crucial metric used to evaluate the performance of language models. It measures how well a probability model predicts a sample and is particularly important in the context of LLMs.

Understanding Perplexity

Perplexity essentially gauges the uncertainty in a language model’s predictions. A lower value signals stronger predictive performance, meaning the model operates with greater confidence. Mathematically, it’s the exponentiation of the average negative log-likelihood over a sequence of words. You can imagine it as the typical number of equally likely choices the model faces when guessing the next word. For instance, a perplexity of 20 implies the model feels about as uncertain as if it had to select from twenty identical options.

Importance of Quantization

When evaluating quantized models, perplexity serves as a key indicator of how much performance has been preserved after quantization in LLM workflows. A significant increase in perplexity post-quantization suggests that the model has lost considerable predictive power, which may not be acceptable for many applications. For this reason, perplexity is often used as a benchmark during the development and evaluation of quantization techniques in LLM.

Examples of Perplexity Scores

Naturally, different models will show different perplexity scores depending on their training data and how they were quantized. A top-tier LLM might start with a perplexity of around 20. After applying quantization, that number could drift up to 25. This movement does signal a gentle decline in performance. Yet for countless real-world applications, this model remains perfectly capable and effective. It’s about finding the right tool for the job, not always chasing a perfect score.

Quantization impact on perplexity (typical ranges):

- FP32 baseline: 20.0

- FP16: 20.0-20.1 (negligible impact)

- INT8: 20.2-20.5 (minimal impact)

- INT4 (GPTQ): 21.0-22.0 (acceptable for most uses)

- INT4 (AWQ): 20.5-21.5 (better than GPTQ)

- NF4: 21.0-22.0 (similar to GPTQ)

- INT3: 23.0-25.0 (noticeable degradation)

- INT2: 28.0-35.0 (significant degradation)

Learn how to build MCP servers for AI agents for efficient AI agent deployment.

Accuracy Loss in Quantized Models

While quantization offers substantial benefits, it is essential to understand the potential for accuracy loss as a trade-off.

Understanding Accuracy Loss

Accuracy loss refers to the decline in model performance caused by reduced precision in quantized parameters. The extent of this loss can vary widely depending on the quantization technique employed. Quantitative analysis shows that models utilizing QAT can achieve better accuracy recovery compared to those using PTQ.

Quantitative Analysis and Benchmarks

Research indicates that quantization approaches like PTQ can lead to accuracy drops of 2-5% in many scenarios, while QAT can mitigate this loss significantly, often keeping accuracy within 1-2% of the original model. For example, a BERT model quantized using QAT might only experience a 1% decrease in accuracy compared to its full-precision counterpart.

Detailed accuracy comparisons (Llama 3 8B on MMLU benchmark):

- FP16 baseline: 65.5% accuracy

- INT8: 65.3% accuracy (-0.2%, negligible)

- GPTQ INT4: 63.8% accuracy (-1.7%)

- AWQ INT4: 64.2% accuracy (-1.3%)

- NF4 (bitsandbytes): 63.9% accuracy (-1.6%)

- HQQ INT4: 64.0% accuracy (-1.5%)

- INT3: 60.5% accuracy (-5.0%)

Model size impact on quantization tolerance:

- 70B models are more resilient to quantization than smaller models

- A 70B model at 4-bit can outperform a 13B model at FP16 on many tasks

- Larger models have more redundancy, making them more “quantization-friendly,” similar to how scalable systems benefit complex workflows in property development.

- Extremely low quantization (2-bit) of 70B models remains somewhat usable, while 8B models at 2-bit approach random performance.

Mitigation Strategies

To minimize the impact of quantization on accuracy, several strategies can be employed:

- Fine-Tuning: Fine-tuning the model after it has been quantized lets it recalibrate and adapt to its new lower-precision reality. This works wonders when paired with QLoRA, a method that makes fine-tuning remarkably parameter-efficient.

- Mixed Precision Training: Employing a combination of precision levels, such as using FP16 for certain layers while keeping others in higher precision, can enhance performance. Critical attention layers and output layers often benefit from higher precision.

- Regularization Techniques: Applying techniques like weight clipping or gradient scaling during training encourages a more robust model. This inherent stability helps the model maintain its accuracy much better throughout the quantization process. It’s a bit like preparing for a challenge in advance.

Discover how AI strategy consulting can help you plan and implement enterprise-scale AI effectively.

Viability of Private LLM Hosting Through Quantization

The hosting of LLMs poses significant challenges due to their extensive resource requirements. However, quantization has opened new avenues for private hosting, especially as organizations explore more secure AI setups similar to those used in transportation logistics and other infrastructure-heavy domains.

Challenges of Hosting LLMs

Honestly, deploying LLMs has traditionally demanded immense resources. We are talking about stacks of high-memory GPUs and enormous storage solutions. For smaller teams or startups, that level of infrastructure isn't just daunting, it's often completely out of reach. The cost can be prohibitive, turning a powerful AI capability into a distant fantasy. This is the fundamental problem that quantization seeks to solve. When teams aim for secure internal deployment, including use cases in real estate event operations and similar environments, requiring strict data control.

Quantization is a transformative approach for large language models. It delivers profound benefits in memory efficiency and makes deployment a feasible goal, especially as teams explore AI model quantization as a practical path to optimization. Grasping its history, the various methods, the tangible size advantages, and the implications for perplexity and accuracy allows teams to better navigate the trade-offs involved in quantization in LLM workflows. The technology will keep evolving, without a doubt. This progress means private LLM hosting will become increasingly accessible, effectively democratizing powerful AI applications for organizations regardless of their scale. It turns a formidable challenge into a practical reality.

Role of Quantization

Quantization alleviates many challenges associated with hosting LLMs by reducing memory and computational needs. With quantized models, organizations can run sophisticated language applications on less powerful hardware, making it feasible to host LLMs privately.

Future of Private Hosting

As quantization technology progresses, the shift toward private LLM hosting will almost certainly pick up speed. We're observing continuous innovations that refine model performance while steadily chopping away at resource demands. This dual progress makes it simpler for organizations to harness LLMs for their unique needs. Key developments point toward hardware-specific optimizations for newer formats like FP4 and FP6. Mixed-precision strategies are getting smarter, more nuanced. Automated quantization pipelines are streamlining what was once a complex implementation hurdle. And we're seeing quantized LLMs begin to pop up on edge devices, even mobile and IoT platforms. A welcome reduction in engineering overhead.

Explore how Private LLM implementation can optimize your AI deployment for secure enterprise workflows.

Conclusion

Quantization is a transformative approach for large language models. It delivers profound benefits in memory efficiency and makes deployment a feasible goal, especially as teams explore AI model quantization as a practical path to optimization. Grasping its history, the various methods, the tangible size advantages, and the implications for perplexity and accuracy allows teams to better navigate the trade-offs involved in quantization in LLM workflows. The technology will keep evolving, without a doubt. This progress means private LLM hosting will become increasingly accessible, effectively democratizing powerful AI applications for organizations regardless of their scale. It turns a formidable challenge into a practical reality.

The real-world cases and benchmarks we've examined show something important. Quantization isn't merely an academic exercise; it's a practical requirement for broadening access to large language models. Startups are reporting 84% cost savings, and established enterprises are creating independent AI systems. This is all possible because reducing model size with quantization allows cutting-edge models to operate on cost-effective hardware while still delivering solid performance.

Looking forward, quantization methods keep improving. Think about FP4, FP6, and mixed-precision approaches evolving steadily. Pair these with hardware advances like NVIDIA's Blackwell architecture, and we can expect even better efficiency. For organizations adopting quantization now, it’s a strategic move. They’ll be able to harness AI's complete capabilities without compromising on budget, privacy, or tailor-made solutions. That’s a compelling advantage.

Key Takeaways

- Quantization strategically trades numerical precision for massive gains in memory efficiency and inference speed, making AI model quantization essential for modern LLM deployment.

- Selecting the correct format, like INT8 for stability or NF4 for fine-tuning, is crucial and depends heavily on your specific hardware and quantization in LLM requirements.

- While larger models generally withstand aggressive quantization better, always validate performance with task-specific metrics beyond just perplexity when working on large language model optimization.

- Techniques like AWQ and GPTQ intelligently preserve critical weights to maintain higher accuracy at extremely low bit widths, improving model performance after quantization.

- Quantization-aware training prepares models for lower precision from the start, often yielding superior results compared to post-training methods, especially in enterprise mobile app development, AIand applications.

- The technique makes private, on-premises deployment of large models financially viable by drastically reducing hardware requirements, enabling business mobility solutions for AI workflows.

- Emerging formats like FP4 and FP6, supported by new hardware, promise even greater efficiency without significant quality loss, enhancing mobile app development for businesses leveraging AI.

- A successful deployment often involves mixed-precision strategies, using different bit widths for various parts of the model, a best practice in enterprise mobile app development services.

- Ultimately, quantization is no longer an optional optimization but a fundamental step for practical LLM deployment, integral to digital transformation initiatives.

FAQs

What hardware considerations are most important when deploying a quantized model?

Your choice of GPU dictates the optimal format. NVIDIA's Blackwell architecture unlocks FP4's potential, while older cards excel with INT8. Memory bandwidth often becomes the true bottleneck, not just VRAM capacity. Align your quantization strategy with your hardware's native support for specific number formats to maximize throughput.

How does quantization impact fine-tuning and ongoing model adaptation?

Quantized models can be fine-tuned efficiently using methods like QLoRA, which keep most weights frozen. However, the process requires careful management of learning rates and gradient scaling. Post-quantization fine-tuning often becomes essential to recover accuracy, especially for task-specific deployments where precision is critical for performance.

Can quantized models be combined with other optimization techniques?

Absolutely. Quantization pairs effectively with pruning, knowledge distillation, and efficient attention mechanisms. This layered approach compounds benefits. For instance, a pruned model quantizes more effectively due to reduced parameter redundancy. The key is applying techniques in the correct order, starting from pruning before quantization to maintaining stability.

What are the often-overlooked trade-offs of 4-bit quantization?

While memory savings are dramatic, expect increased inference latency on some hardware due to dequantization overhead. The noise introduced can also subtly alter the model's "personality," sometimes reducing creativity in exchange for reliability. For complex reasoning tasks, this trade-off requires careful evaluation against your quality thresholds.

How do we validate that a quantized model is performing correctly in production?

Beyond perplexity, monitor task-specific accuracy metrics and latency distributions. Implement canary deployments to compare outputs against your FP16 baseline. Watch for drift in user satisfaction scores, as quantized models can sometimes produce drier, more deterministic responses that impact user experience even when technically accurate.

What emerging quantization trends should engineering teams watch?

Format hybridization is gaining momentum, like mixing FP8 for attention layers with INT4 for MLPs. Research into 1-bit and 2-bit methods is accelerating, though still experimental. The real frontier is dynamic quantization that adjusts precision per-token based on complexity, promising optimal efficiency without manual configuration.

December 3, 2025

December 3, 2025