Enterprise operations have long used static interfaces. Dashboards present data, but require interpretation. Forms demand structured input, creating friction. This dynamic is changing. A more natural modality is reasserting itself within enterprise AI strategy. The human voice is returning to the foreground through Voice AI. This is not a step backward, but a deliberate advance toward a higher-bandwidth way of working in Voice AI for enterprise environments.

In 2026, speech interfaces are progressing from peripheral assistive features to central business infrastructure with enterprise voice AI solutions. The compelling reason is operational throughput. When measuring the sheer speed of completing a task, spoken interaction begins to outpace traditional dashboards, text-based chat, and form-heavy systems. It serves as a profound compression layer for complex intentions inside enterprise workflows.

For leaders evaluating this transition, the essential understanding is foundational. The value resides in velocity, not merely convenience. Early clarity on this point separates strategic integration of Voice AI solutions from mere experimentation.

To align Voice AI adoption with long-term business goals, many enterprises start with structured AI strategy consulting to define architecture, governance, and measurable execution outcomes.

Why Voice AI Is the Fastest Interface for Enterprise Workflows?

Consider speed as the primary metric for a business interface. This is the essential reframe. Modern AI voice assistants are engineered for operational velocity, not conversational charm. Technical advancements have reached a critical point. Latency improvements, combined with streaming inference and real-time reasoning, now support production workloads. Voice functions as a compression layer for complex workflows. It condenses multi-step procedures into efficient, spoken commands.

This capability removes measurable friction in critical areas. In field operations, technicians issue updates and request parts hands-free. Within support centers, agents resolve cases through natural dialogue while systems document the actions using Voice AI for customer support. Logistics and internal IT see similar gains. The spoken word bypasses cumbersome navigation. It connects intention to execution directly.

The narrative here is not about friendly assistants. It is about building a faster, more fluid connection to operational systems with Conversational AI for enterprises. Speed becomes the feature, and everything else follows from that principle.

How 2026 Enterprise Voice AI Solutions Actually Work

Legacy voice systems and modern agents share almost no DNA. The former are rigid pathways. The latter are adaptive partners built on speech recognition AI and contextual intelligence. Confusing them is a costly error. The difference lies in a foundational change from programmed response to contextual reasoning supported by natural language processing voice AI.

Persistent Context Memory

An AI agent in 2026 can recall. It remembers details from prior interactions, whether a user’s system access level or an unresolved ticket from last week. This continuity allows for seamless dialogue powered by LLM-powered voice assistants , so that each conversation can last.

Backend Tool Execution

Critically, these agents execute by working with software tools. They can query a database, create a service ticket, or draft an email using speech-to-text AI. The voice command becomes the trigger for a sequenced action in backend systems. Speech is the interface for orchestration through text-to-speech AI pipelines.

Unified Data Access

They operate with a unified view. An agent can navigate your CRM, ERP, and ticketing system as a single landscape during one conversation. It pulls customer history alongside inventory levels and logistics data to form a complete picture. This context stitching is what enables true decision support in Voice AI for enterprise operations.

Adaptive Reasoning Engine

The old model followed a decision tree. It was a predictable, often frustrating, maze of prompts. The new model operates in adaptive reasoning loops supported by real-time voice AI. It interprets intent, evaluates options, executes an action, and listens for correction. It is less a call router and more a collaborative engine enabled by generative voice AI. This fluidity is what makes it an agent, not a bot.

Teams designing production-grade voice systems often rely on proven AI solutions for enterprises to ensure model orchestration, security, and scalability across operational workflows.

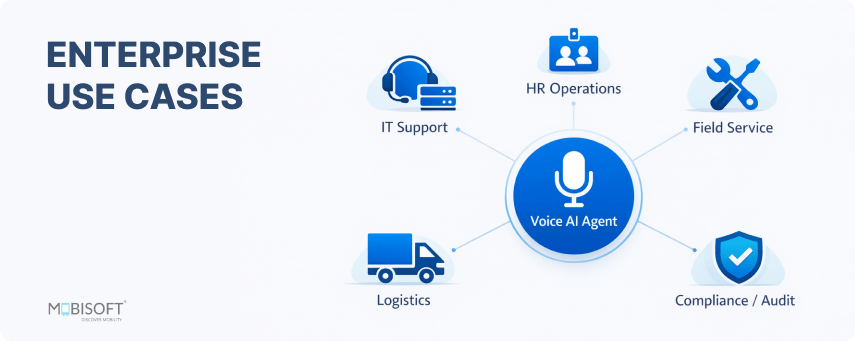

Enterprise Use Cases for Voice AI in Operations

Adoption patterns reveal a clear truth. The initial deployment gravity for speech agents is internal, not external. Customer-facing applications generate discussion, but internal operations generate results through Voice AI workflows. This is where complexity is high, processes are defined, and the ROI is unequivocal for Voice AI for enterprise environments.

IT and HR Automation

IT and HR support are proving to be fertile ground for voice automation for business. Employees already articulate problems verbally. An agent that can authenticate a user, understand their issue, execute a password reset, or log a ticket in the system compresses resolution time from minutes to seconds. The gain is pure productivity enabled by enterprise voice AI solutions.

Field Service Voice AI

In logistics and field service, voice interfaces act as a force multiplier. A warehouse lead can coordinate inventory checks and updates while their hands and eyes are occupied. A technician can request schematics, report completion, and order follow-up parts through narration. The workflow remains uninterrupted.

Automated Audit Records

Another quiet area of scaling is in regulated procedures using AI-powered voice automation. Agents guide staff through sensitive protocols like safety checks, audit questions, and clinical steps. It automatically generates perfect and timestamped records simultaneously, ensuring compliance and liberating focus.

For practical implementation patterns, this AI voice assistant integration guide explores real-world voice assistant use cases across enterprise workflows.

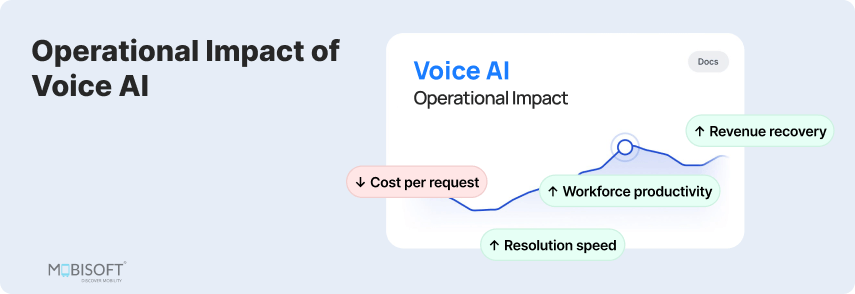

The Business Math Behind Enterprise Voice AI ROI

The investment case moves beyond vague promises of efficiency. It rests on specific, measurable financial mechanics. True ROI emerges not from labor displacement, but from intelligent labor multiplication powered by enterprise AI automation. The math focuses on three key pillars.

Lower Cost Per Request

Every service call, IT ticket, or field report has a baseline cost. Voice agents dramatically reduce this expense. They handle concurrent interactions, compress talk and resolution times, and operate continuously. This directly attacks queue backlogs and provides after-hours coverage without a proportional cost increase through Voice AI solutions.

Faster Revenue Cycles

Speed directly impacts revenue. In Voice AI for customer support, faster resolution reduces call abandonment and cart abandonment. In logistics, quicker issue resolution keeps shipments moving. Voice agents mitigate operational friction that silently bleeds revenue. They convert previously lost moments into completed transactions.

Scalable Efficiency Gains

The most significant figure is not the initial saving. It is the compounding return as workflows become increasingly autonomous with Conversational AI for enterprises. An agent handles the full process from intake to system update to resolution logging. As a result, the efficiency gain multiplies across the workflow chain, creating a scalable leverage point.

The initial pilot might justify itself through cost-per-interaction. The scaled deployment, however, validates itself through systemic throughput and reclaimed revenue. The financial narrative moves from simple cost avoidance to active value generation. This is how the business case quietly proves itself. The numbers cease to be projections and become embedded performance facts. Many organizations extend ROI further with AI customer support automation to streamline enterprise support workflows with intelligent voice and agent systems.

Inside a Reliable Voice AI Stack

Technical credibility here is non-negotiable. Reliability does not spring from a single advanced model. It is engineered through a deliberate, layered architecture. Each component has a distinct role, and the integration between them is what prevents failure.

- The foundation is a dual-component capture system. It consists of a high-accuracy Automatic Speech Recognition to convert audio to text, and a separate, dedicated reasoning engine to interpret intent.

- They are different systems for different tasks. This separation allows each to be optimized and updated independently. A memory layer then enters the process, providing conversational persistence and user-specific context.

- This is what turns a reactive query into a coherent dialogue. Next, the critical piece: the tool router and execution controller.

- This component functions as a disciplined orchestrator. It validates permissions, sequences actions against backend systems like CRM or ERP, and prevents uncontrolled automation chains.

- Perhaps the most practical architectural decision now is the hybrid edge-cloud model. Sensitive audio processing can occur on-site to ensure privacy and slash latency, while complex reasoning taps into cloud-scale models. This split delivers both speed and sophistication.

- Ultimately, reliability is a product of this intentional structure. It is the structural design that contains errors and ensures actions are accurate, authorized, and auditable.

The model choice matters, but the architecture matters more. It is what makes a prototype trustworthy. At scale, enterprises benefit from an enterprise AI agent platform that supports multi-LLM orchestration, secure tool routing, and end-to-end agent execution.

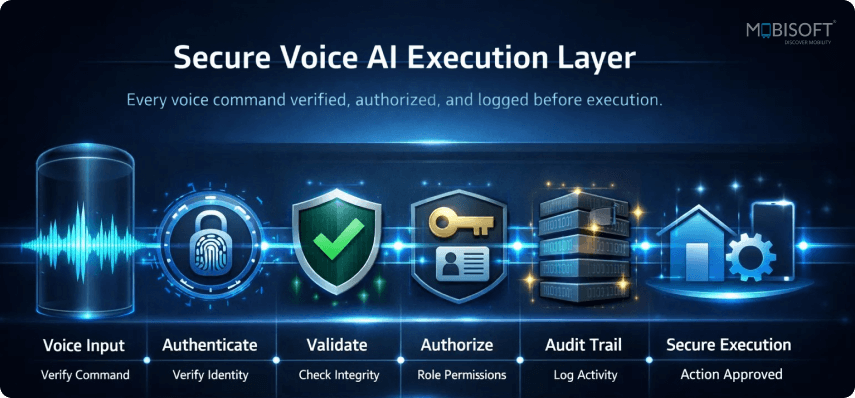

Security Risks of Voice Automation

Voice agents introduce execution-level risk, not merely data privacy concerns. A spoken command can initiate actions that bypass the deliberate friction of clicks, forms, and approvals. This creates a novel vulnerability vector that many enterprises have not yet mapped.

Bypassed Validation Risk

Traditional systems are built with procedural speed bumps. Menus, confirmation screens, and required fields all serve as implicit validation checks. Voice interfaces, designed for speed, intentionally strip these away. The very efficiency they create opens a potential pathway for unchecked instruction.

Silent Execution Errors

The failure modes are subtle. Misheard intent, a partially captured instruction, or an ambiguous command can trigger a perfectly executed but fundamentally wrong workflow. An agent might correctly hear "approve invoice 501" and execute it, without catching the user's hesitant tone or subsequent, verbalized doubt. The system works as designed, yet the outcome is flawed.

Mid-Dialogue Authentication

Mitigation requires new architectural guardrails. This necessitates conversational permission layers. These are systems that confirm high-stakes actions, re-verify user authority mid-dialogue, and maintain a clear audit trail of intent versus execution. An action-validation pipeline becomes non-optional, not for user experience, but for operational integrity. The goal is to preserve speed while installing invisible, verbal checkpoints. This is the balance that defines secure deployment. Many are exposed without realizing the scope. Proactive design is the only defense.

Architectures like these are often accelerated through custom AI agent development that combines reasoning, memory, and tool execution frameworks for enterprise use.

From Voice Assistants to Action Engines

A clear market split is emerging, one that will define procurement and deployment for years. On one side, consumer-style voice tools focus on answering questions and completing simple tasks. On the other hand, enterprise voice AI solutions are built for more complex tasks like authorized execution. This distinction is fundamental because firms are not buying assistants. They are investing in action engines supported by Voice AI for enterprise environments that integrate directly with core systems like ERP, CRM, and logistics platforms. What defines this mission-critical category? It is a strict focus on reliability, auditability, and measurable workflow completion powered by Voice AI solutions.

- These engines operate within strict governance boundaries. They confirm permissions, validate context, and leave a detailed audit trail of intent and action.

- This is reshaping expectations. Procurement will increasingly prioritize security certifications and performance SLAs over personality traits.

- Compliance will demand transparency into the decision chain, not just the final output.

- Pricing models will align with business value and transaction volume, not user seats.

The future belongs to voice as a disciplined, integrated execution layer driven by the future of Voice AI. The era of the friendly but peripheral assistant is quietly closing. Moving from simple assistants to execution engines requires specialized AI chatbot and voice agent development focused on tool use, permissions, and real business actions.

The Future of Voice AI for Enterprise Operations

The integration of voice prioritizes velocity in environments where latency, whether in decision cycles or service resolution, carries a tangible cost. This transition positions speech not as another channel to manage, but as a foundational layer for execution enabled by Voice AI for enterprise operations. It connects human cognition directly to system action through Voice AI, removing procedural debris that slows operations.

The advantage for early adopters is already materializing in the future of Voice AI. It allows tasks to be completed faster, eliminating friction and boosting focus. This is the quiet, compounding return on strategic foresight driven by enterprise voice AI solutions. The organizations that build this capability now are not just adopting a technology. They are fundamentally resetting the speed at which their business can operate and respond. The future belongs to those who execute not just with precision, but with pace. Voice, understood and implemented as an engine, provides exactly that through real-time Voice AI.

Key Takeaways on Voice-Based Enterprise Automation

- Voice agents are now an operational execution layer, not a communication channel. Its value is pure speed enabled by voice-based enterprise automation.

- Enterprises are deploying authoritative action engines that integrate directly with core systems like ERP and CRM using Voice AI integration services.

- Real adoption gravity is internal, within IT, HR, and logistics, where reducing procedural friction with Voice AI workflows delivers immediate and measurable ROI.

- The financial model compounds over time, evolving from cost savings to revenue recovery and multiplied workforce capacity through enterprise AI automation.

- Reliability is not about a single model; it is a product of deliberate architecture in enterprise voice AI development, featuring hybrid processing and strict orchestration.

- Voice introduces a unique execution risk, bypassing traditional digital friction. This demands new security frameworks built for spoken command in Voice AI software for enterprises.

- Modern agents are defined by memory and tool use, enabling them to perform tasks, which separates them from legacy bots and traditional AI voice assistants.

- Early integration builds a compounding advantage. It sets a faster operational tempo aligned with Voice AI trends 2026 that becomes deeply embedded and difficult for competitors to replicate.

Frequently Asked Questions

Is the ROI for speech agents primarily about replacing human workers?

Only to an extent. The most sustainable financial model focuses on labor multiplication, not displacement, supported by Voice AI solutions. Agents handle routine tasks, allowing your team to focus on complex problem-solving. This improves both throughput and job satisfaction. The true return compounds as the system manages entire workflows, from initiation to documentation, freeing significant operational capacity.

Which industries are adopting this technology most rapidly?

We see especially strong adoption in fields with complex, hands-on workflows using Voice AI in enterprise workflows. Manufacturing, logistics, and field service lead because voice integrates seamlessly into physical tasks. Healthcare uses it for procedural documentation. The common thread is an environment where traditional screens create dangerous or inefficient friction, and speech provides a natural solution.

What's the single biggest technical hurdle to reliability?

Ensuring clean intent interpretation in conversational AI for enterprises. Background noise, accents, or ambiguous phrasing can challenge the system. The solution isn't just better speech recognition. It's a robust reasoning layer that asks contextual, clarifying questions before executing commands. This validation loop is critical for preventing errors and is a key differentiator for enterprise-grade platforms.

Can a voice agent truly integrate with our legacy backend systems?

Yes, but through orchestration layers in enterprise voice AI solutions. The agent itself doesn't connect directly to your legacy ERP. Instead, it operates through a secure middleware or tool-use framework that translates spoken commands into the specific API calls or data queries your older systems require. This abstraction layer is essential for compatibility and security.

How do we measure the success of a pilot beyond basic cost savings?

Look at process completion time and first-contact resolution rate when deploying Voice AI for customer support. The most telling metric is often the reduction in "swivel-chair" work, the manual toggling between applications that a user eliminates by narrating a task. This directly correlates with productivity gains and reduced cognitive load, which are leading indicators of successful adoption and scaling.

Are there specific workflows where voice interfaces are not recommended?

They are less suited for presenting large amounts of discrete data, like reviewing a full financial spreadsheet. Speech is ideal for initiating actions, reporting status, or querying specific facts within Voice AI workflows. The boundary lies between information delivery, which can be inefficient via audio, and operational command, which is where voice excels.

What is a "conversational permission layer" and why is it necessary?

It is a critical security protocol in Voice AI for enterprise systems. Before executing sensitive actions like approving payments or changing system access, the agent will verbally request confirmation or additional authentication within the dialogue flow. This builds essential digital friction back into the high-speed interface, creating an audit trail and preventing unauthorized execution.

How does this evolution impact vendor selection and pricing?

Expect pricing to move from per-seat licensing to value-based models, like cost-per-successful-transaction in Voice AI software for enterprises. You will need to evaluate vendors on their orchestration architecture and tool-use capabilities, not just speech recognition accuracy. The partnership is less about licensing software and more about integrating an execution layer into your operational core.

January 29, 2026

January 29, 2026